Problems with Code Coverage when Assessing Functional Tests

Author: Baubak Gandomi

This is the first post in a two-part series about the importance of measuring efficient functional tests in platform engineering and in complex products. Baubak Gandomi is a Test Architect with Adobe Customer Journey Management.

Code coverage is often a KPI used to show progress in projects. Especially when the quality of a legacy product needs to be brought up to speed. It is often expected to help detect holes in our certainty regarding the infallibility of our products and their associated tests. We think that although it seems quite clear in its results, it is quite misleading if used on its own to represent the efficiency of your tests.

This is actually a very good measure and it allows us to find lines in the code that are not tested. But I feel that it does not give a clear picture of the state of the tests, and leads to false assumptions when you generalize this for tests that are not unit tests.

The main focus of this article is functional testing. We will often use the term “line-level” coverage to designate coverage reports that point to a line of code. This includes among other things, line- and branch coverage.

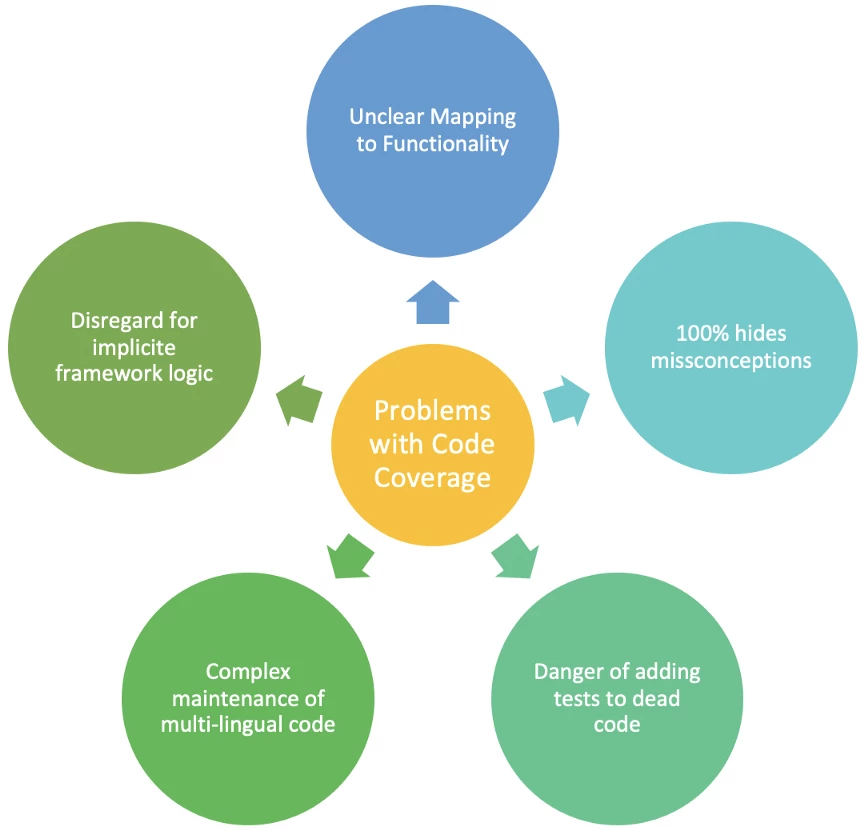

Problems with Line Level Coverage and Functional Tests

Although we think line level coverage is very well adapted to low deployment tests such as unit and some component tests, we think it has a few drawbacks when using it in relation to functional tests. In this chapter, we will give reasons.

Unclear Mapping to Functionality

When you are confronted with a coverage report, the gaps in line coverage will not necessarily point to functionality not being tested. Usually, the most intuitive course of action is adding a unit test.

Unless you have huge parts of code not being tested, the code not being tested is not going to really tell you what functionality is not being tested. As such it is hard to consult the functional experts in regard to the line coverage gaps.

In functional tests, the real reference regarding coverage and gaps in the documentation, and the product experts or managers.

Incompatible Comparison between Functional and Low-Level Tests

When comparing functional tests with unit tests using code coverage we often get comments:

Why doesn’t the addition 10 functional tests not raise the coverage in the same amount as 10 unit tests?

The funny thing with comments such as this is that it sounds great and logical. It is obvious that we should have a lot of unit tests, much more than functional tests. However the problem is that the measure of the efficiency of a functional test is not the lines it touches, but the functionality that has been specified by the business.

100% Coverage yet Error-Prone

I’ll tell you a secret. I have code that has around 100% code coverage. Yet I know it has errors, and that there are use cases where it will fail. I just didn’t have the time to correct it. I am pretty sure I am not alone in this.

A very simple example of this is an “empty” method that calculates prime numbers. It does not fit the specification, but if we call it, it is 100% covered. Another example is not testing incoming arguments. Adding tests with bad input will not raise coverage but will definitely crash the method.

In short, what I try to convey here is that line level coverage does not find faulty assumptions and functional holes. Functional testing would usually quickly find these cases, but coverage will not help us here. If you only focus on line coverage when testing, you will lose sight of the underlying functionality, and miss out on some errors.

Dead Code is the Enemy

We think that unless you really know your code, it is hard to identify that a lack of coverage is due to dead code. When you are filling the gaps, you need to be extra careful, because you may be adding tests to fill a gap which is not really used.

Here again, taking functional tests to the task to make sure it covers all code makes sense only if we are sure that the functional tests cover all functionality. This is especially useful when creating harnesses. Once you are sure about the functional coverage, code coverage of the functional test will actually be useful in detecting dead code. But, yet again this is a specific intervention, and using it as a KPI will not be helpful.

Complex & Cumbersome Mixture of Coverage Technologies

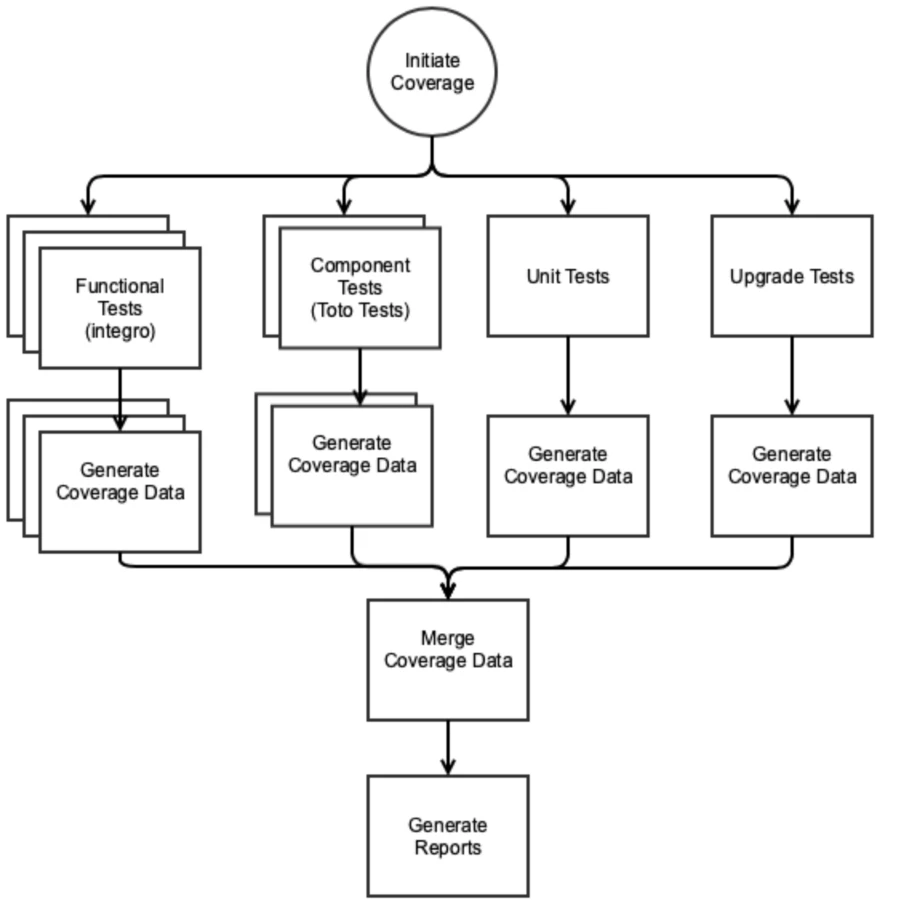

The whole process of activating coverage for different technologies, gathering the data, and merging them into a coherent and useful report, apart from being extremely slow, is very complex and involves many error-prone steps.

Below is a figure of one implementation for measuring code coverage for a series of different tests that we put in place. This process involves 4 different instances performing- tests, instance deployments, measurements, and reporting.

We also think that gathering coverage on a running application will induce unexpected problems that code coverage tools will have problems handling. This involves great amounts of data being managed in memory by the coverage tools and often in a parallel manner, which will put a large amount of stress on them.

Implicit Logic is hard to Capture

Frameworks and SDKs imply that you have a codebase that caters to a large set of use cases. Adding use cases may not even make a change in the line, because the logic is defined implicitly in JSON or XML formats.

Conclusions

I would like to reiterate that we are not suggesting a replacement for line-level coverage, which we think is great for unit tests. What we propose is that too great a reliance on this metric is counterproductive. This is especially true when applying code coverage for measuring the efficiency of functional tests. Instead, we need to have other coverage KPIs to help us estimate the assurance we have of ensuring stability in our releases.

For measuring the efficiency of functional tests we propose Transactional Coverage. This is covered more in our upcoming article titled “Transactional Coverage — a Functional Approach to Detecting Test Gaps”. Stay tuned!

Follow the Adobe Experience Platform Community Blog for more developer stories and resources, and check out Adobe Developers on Twitter for the latest news and developer products. Sign up here for future Adobe Experience Platform Meetups.

Thanks to Baubak Gandomi.

Originally published: Dec 3, 2020