Second activity (that captures visitors who converted in the first AB test activity via mbox) is showing more unique visitors than visitors who previously converted in the first activity.

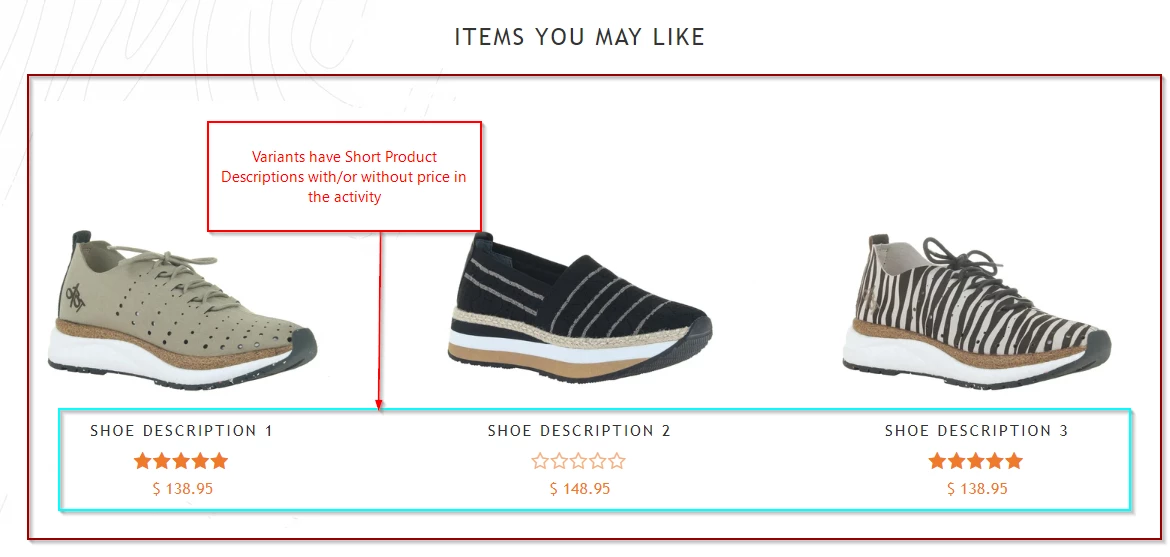

We are currently running an A/B test (carousel_desktop_add_spd_price) where the objective of this test is to determine if adding short product descriptions with/without a price tag below products featured in the product page carousel will increase the click through rate of the carousel and the percentage of visitors adding to cart (out of the visitors that engaged with the carousel). The experiences are delivered on the product page template.

We have Adobe Analytics tags to capture when a visitor interacts with the carousel. However, because there are two carousels within the product page, we are currently are not able to distinguish between the analytics interactions of each carousel. The test is only manipulating the topmost carousel component on the page. This means we’re unable to accurately use analytics to capture the visitors that specifically interacted with the top carousel.

In order for us to accurately capture that data, we added code to each experience in the VEC which attaches an event listener to the topmost carousel, so that whenever a visitor clicks on the carousel component, an adobe.target.trackEvent function is called, passing a parameter to Target with the key value of profile.abRRcarouselClicked, and matching the name of the experience they qualified for.

function fireMboxOnRRClick(){

document.querySelector('.product-carousel .slick-list').onclick = function(){

console.log('carousel clicked');

adobe.target.trackEvent({

"mbox" : "customTestMbox",

"params": {

"profile.abRRcarouselClicked" : "ctrlDesktop" //Can be ctrlDesktop, var1Desktop, or var2Desktop

}

});

};

}

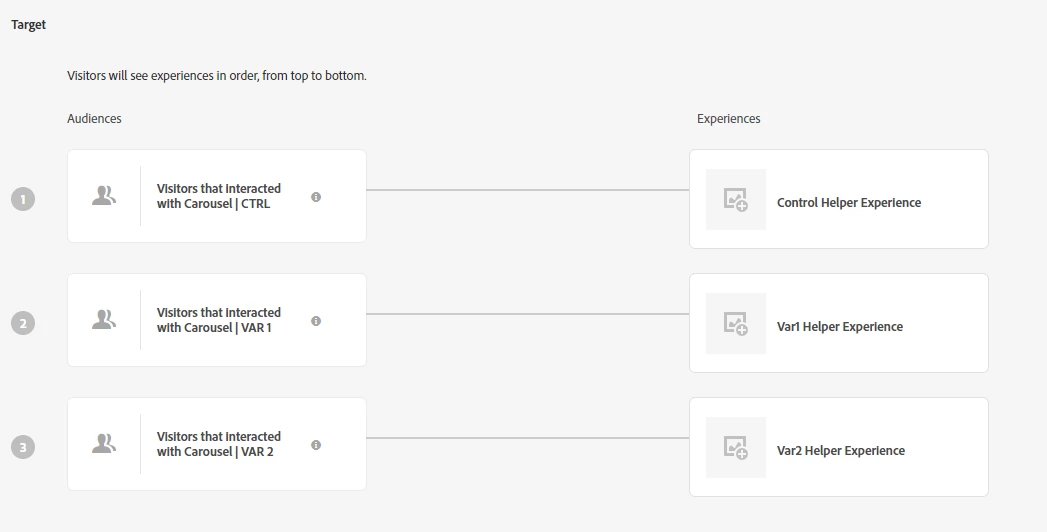

Using that profile parameter, we created a separate experience targeting “helper” activity ( labelled desktop_add_spd_price_helper_20200518) where visitors would qualify for the activity audience based on the value that was set in the profile parameter (abRRCarouselClicked). This helper activity is used to accurately capture the unique visitors that interacted with the product carousel for each experience.

The targeting rules for the helper activity.

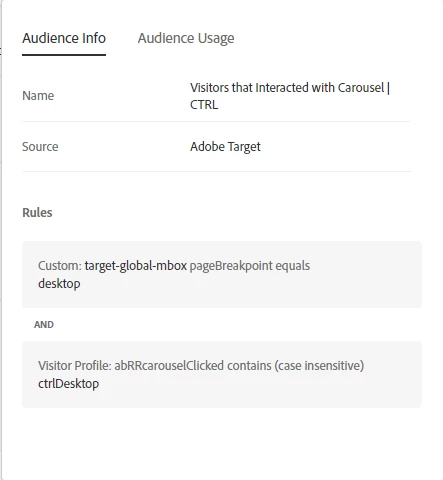

For example: in the screenshot below, if visitors that engaged with the carousel on the control experience of the AB testing activity, they would have a parameter value of ‘ctrlDesktop’, meaning they would also qualify for the control experience of the helper activity. Vice versa for variation 1 and 2.

The audience rules to qualify for the control experience of the helper activity.

In other words, people who clicked on the carousel should qualify for the helper activity on the next page.

We tested this implementation in our dev environment and successfully qualified for both activities across all browsers.

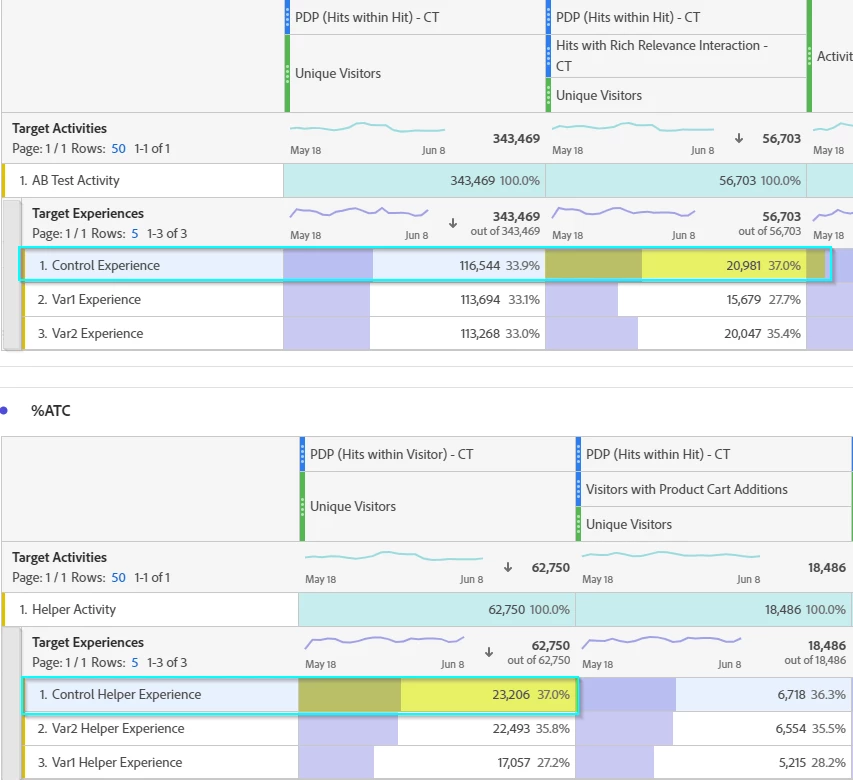

As the ran for the next 3 weeks, we monitored both activities to check for discrepancies in data. While we expected to see a drop off, we found that out of the visitors that interacted with the carousel in our AB test, more visitors had qualified for our helper activity.

For example, in our analytics workspace, we see that there were 20,981 visitors that interacted with both carousels on the page template. For the helper activity, we are seeing 23,206 visitors. Which creates an excess of 1000+ visitors that were able to qualify for the helper activity.

First table displays data from the A/B test. Second table displays data from the XT Helper activity.

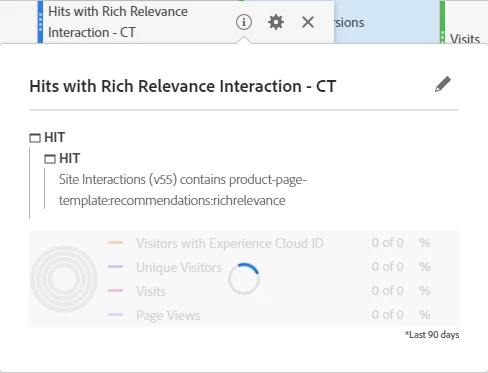

Below is a screenshot of the segment that I used to capture the rate of visitors that interacted with the carousel. We capture this by capturing all hits where site interaction contains a specific term that appears only when the carousel has been clicked on. The segment is then added on top of the unique visitors metric to capture all unique visitors that interacted with the carousel.

We expected to see a decrease of visitors qualifying for the helper activity. Why are there more unique visitors entering the helper activity compared to the number of visitors that have interacted with the carousel?