Modernizing Data Management: The Transition to Lakehouse Architectures

Modernising Data Management: The Transition to Lakehouse Architectures

Introduction

Data management has taken significant strides in recent years, with Lakehouse architecture emerging as a leading-edge solution. For organizations handling complex data ecosystems, the Lakehouse offers a unified approach that combines the best elements of data lakes and data warehouses. This hybrid model promises benefits like streamlined access to data, performance enhancements, and reduced costs.

However, while Lakehouse architecture has the potential to redefine data management, it's important to remember that it is still a new and evolving approach. Adopting a Lakehouse may not be necessary or beneficial for every organization, especially those already operating efficient data platforms. For the largest Indian bank, transitioning to a cloud Data-Lake architecture has brought clear value, yet other organizations may find alternative solutions better suited to their needs. Here's a deeper look at the strengths of Lakehouse architecture—and the factors to consider before making the leap.

The Journey of Data Management: Traditional vs. Emerging Models

On-Premises Data Warehouses

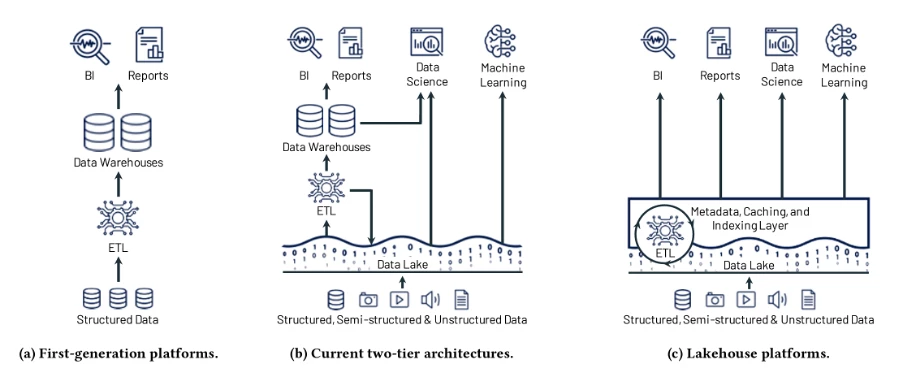

Data warehouses have long served as the backbone of data storage and analytics, optimized for structured data and traditional BI (Business Intelligence) tasks. Yet, they often struggle with scalability and flexibility, especially in managing unstructured data and supporting real-time insights.

Cloud Data Lakes

Cloud data lakes emerged to solve some of these limitations, offering low-cost storage for vast amounts of structured and unstructured data. However, with limited support for data governance and transactional consistency, data lakes introduced complexities of their own, particularly for organizations needing a robust and reliable analytics pipeline.

Lakehouse Architecture: A Hybrid Solution

Lakehouse: A New Generation of Open Platforms that Unify Data Warehousing and Advanced Analytics by Michael Armbrust1, Ali Ghodsi1,2, Reynold Xin1, Matei Zaharia1,3 1Databricks, 2UC Berkeley, 3Stanford University

Lakehouse architecture seeks to address the challenges of both traditional data warehouses and cloud data lakes. By integrating open storage formats with advanced management features, the Lakehouse promises to reduce the complexity of ETL processes, improve data accessibility, and support advanced analytics and machine learning workloads.

Understanding Lakehouse Architecture

At its core, a Lakehouse combines the scalability and flexibility of data lakes with the data management and transactional capabilities of data warehouses. This architecture leverages open file formats like Parquet and ORC, enabling efficient data storage and retrieval. It introduces features such as:

- Schema Enforcement: Ensuring data conforms to predefined structures for consistency.

- ACID Transactions: Providing reliable data operations that are Atomic, Consistent, Isolated, and Durable.

- Metadata Management: Maintaining detailed information about data for easier governance and discovery.

- Indexing and Caching: Enhancing query performance by reducing data retrieval times

Why Lakehouse Architecture Could Be a Game-Changer

The Lakehouse model offers a compelling vision: a single platform that accommodates both analytics and machine learning, without the need for separate systems. For large organizations, particularly those like the largest Indian bank managing vast and varied datasets, a Lakehouse could streamline operations and reduce costs. Here are the primary benefits that make the architecture attractive:

Unified Data Access

Lakehouses centralize structured and unstructured data, reducing silos and ETL overhead for easier, faster data access. This unification allows for more comprehensive analytics and insights, as all data is accessible from a single point.

Real-Time Analytics at Scale

With indexing, caching, and ACID transactions, Lakehouses optimize performance, supporting immediate insights and ML workflows without extensive preparation. This capability is critical for businesses that rely on timely data to make strategic decisions.

Cost Efficiency and Flexibility

Cloud-based Lakehouses separate storage and compute, keeping storage costs low and scaling compute resources on demand. This model provides flexibility in managing workloads and can lead to significant cost savings compared to traditional data warehouses.

Adaptability for Advanced Analytics

Lakehouses are designed for frequent, high-speed analytics, supporting real-time decision-making and adaptive customer insights. They facilitate the integration of new data sources and types, making it easier to evolve with changing business needs.

But Is Lakehouse Architecture the Right Choice for Your Organization?

While Lakehouse architecture is promising, it's important to assess its readiness and relevance for your specific needs. Here are considerations for organizations evaluating a Lakehouse:

Maturity and Stability

Lakehouse is still relatively new, and its long-term stability is unproven in some contexts. For regulated industries, ensuring compliance and performance standards is essential. Organizations should evaluate whether the technology has matured enough to meet their reliability requirements.

Necessity vs. Complexity

If your current system meets performance needs, a full Lakehouse migration may add complexity without clear gains. Assess if Lakehouse capabilities like ML integration are truly essential for your business objectives.

Resource Investment

Adopting Lakehouse architecture requires new skills and tools. The added learning curve may outweigh benefits if in-house expertise is limited. Consider the costs associated with training, hiring, and potential disruptions during the transition.

Pilot Testing for Practical Evaluation

A phased approach, such as pilot testing, allows organizations to evaluate Lakehouse benefits with minimal risk. This strategy helps identify the operational adjustments required for full-scale adoption and provides tangible insights into the architecture's impact.

Case Study: The Journey of the Largest Indian Bank

In the case of the largest Indian bank, the decision to move toward a Lakehouse was driven by specific needs. As data volumes and analytics demands increased, the bank's traditional on-premises warehouse struggled with both scalability and cost. By implementing a Lakehouse architecture on a cloud-based platform, the bank achieved:

- Centralized Data Management: Consolidating data sources into a unified storage system.

- Streamlined ETL Processes: Reducing the complexity and time associated with data transformation.

- Enhanced Analytics Capabilities: Enabling real-time analytics and supporting advanced machine learning workflows.

- Operational Cost Reduction: Lowering expenses through scalable cloud services and optimized resource utilization.

However, the decision was carefully evaluated and aligned with the bank's long-term data strategy, ensuring the architecture was truly necessary for its objectives.

Why the Bank Should Focus on Data Lake Solutions—Beyond the Hype

While the appeal of modern solutions like Delta Lake and Lakehouse architecture is strong, it's essential for the largest Indian bank to critically evaluate the practical benefits these platforms bring beyond just staying on trend. As the bank works with vast amounts of sensitive data, any new data architecture should align with long-term objectives for data governance, scalability, and performance.

Stability Over Novelty

Delta Lake's capabilities were promising but relatively untested for large-scale operations. The bank preferred the reliability of a cloud data lake with a proven track record, ensuring that critical financial data remained secure and accessible.

Focus on Core Needs

The bank prioritized scalability and data centralization over advanced features like ACID transactions, opting for a straightforward cloud data lake that fit its current objectives. This approach allowed the bank to address immediate challenges without overcomplicating the infrastructure.

Flexibility for Future Adoption

By selecting a cloud data lake, the bank retained the option to integrate Delta Lake or Lakehouse features as these solutions matured. This strategy provided adaptability without immediate complexity, enabling the bank to evolve its data architecture over time.

Conclusion: Balancing Innovation with Practicality in Data Management

Lakehouse architecture represents a significant advancement in data management, offering a unified platform that can handle diverse data types and workloads. For organizations facing challenges with data silos, scalability, and the integration of analytics and machine learning, the Lakehouse provides a compelling solution.

However, the decision to adopt a Lakehouse should be made after careful consideration of an organization's specific needs, resources, and long-term goals. For the largest Indian bank, focusing on a cloud data lake provided immediate, practical benefits while keeping the door open for future innovation. By balancing the allure of cutting-edge solutions with a pragmatic approach to data management, organizations can ensure they make technology decisions that deliver real value.