Building Smarter Automation with GenAI: How We’re Supercharging Quality and Velocity

Introduction: Fast, Reliable Automation with GenAI—It’s Happening

At Adobe, innovation and customer experience go hand in hand. We’ve already made great strides with Customer Use Case (CUC) Automation, simulating real-world workflows to catch bugs before they reach our users. Now, we’re taking that even further—by integrating Generative AI (GenAI) into our automation strategy.

Our goal? To automate smarter, faster, and more intuitively—with AI as our co-pilot.

While traditional automation requires manual scripting, GenAI helps us generate test logic, data, and even test scripts dynamically. We're combining human judgment with machine intelligence to evolve from test automation to intelligent automation.

This blog dives into how we’re building this system, what it looks like under the hood, and why it’s changing the way we approach quality engineering.

Why Traditional Automation Needs a Boost

Automated testing has always been powerful—but it’s also labor-intensive. Authoring, maintaining, and evolving test suites takes significant time and effort. And when systems change frequently, even minor tweaks can break dozens of tests.

We asked ourselves:

- Can we speed up test creation without compromising quality?

- Can we reduce time spent maintaining tests?

- Can we teach machines to understand workflows like we do?

That’s where GenAI comes in. It helps us generate and evolve test logic at scale—without hard-coding every step. It also brings intelligence to automation by learning patterns, optimizing test data, and detecting changes proactively.

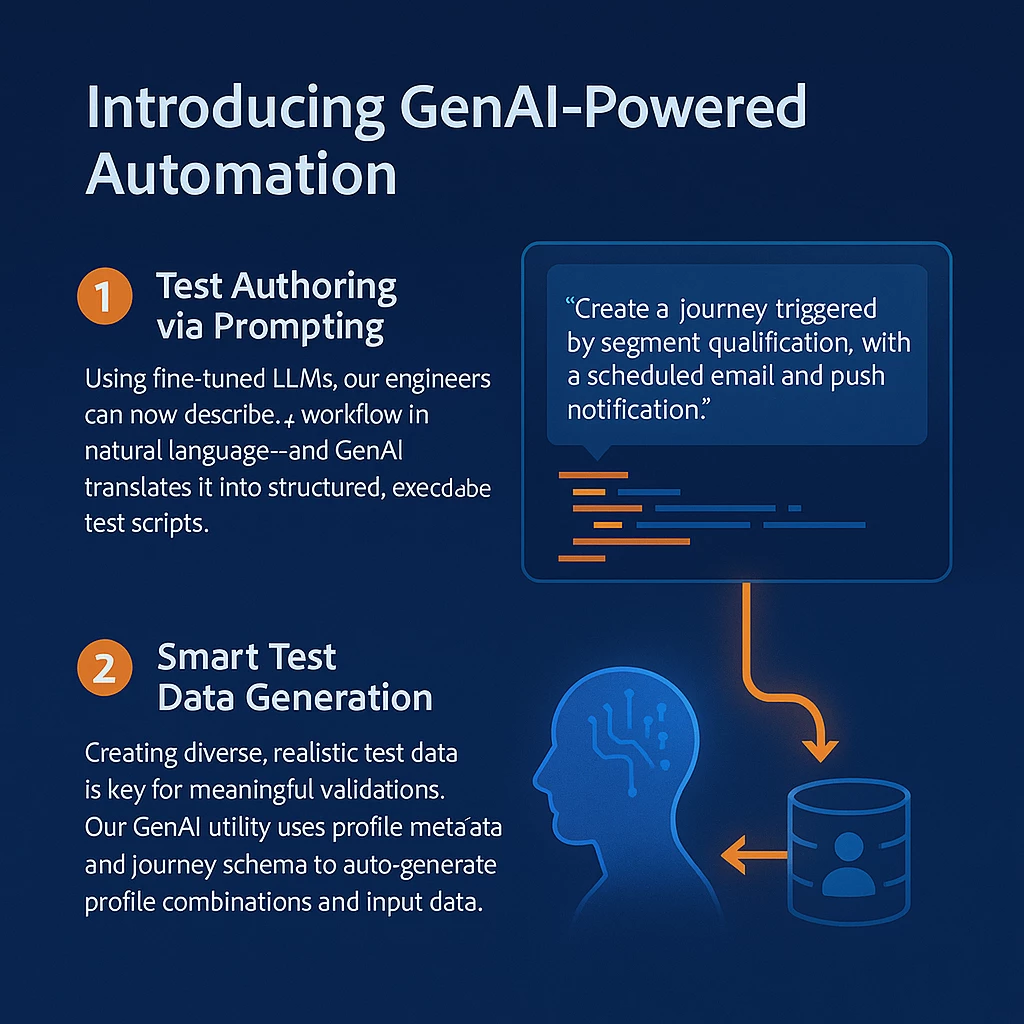

Introducing GenAI-Powered Automation

We’ve embedded GenAI capabilities into our test development and execution pipeline in three key ways:

1. Test Authoring via Prompting

Using fine-tuned LLMs, our engineers can now describe a workflow in natural language—and GenAI translates it into structured, executable test scripts. For example:

“Create a journey triggered by segment qualification, with a scheduled email and push notification.”

This input generates test skeletons that include API calls, environment setup, assertions, and cleanup logic. This reduces scripting time by 70% and accelerates onboarding for new engineers.

2. Smart Test Data Generation

Creating diverse, realistic test data is key for meaningful validations. Our GenAI utility uses profile metadata and journey schema to auto-generate profile combinations and input data. It understands edge cases, boundary values, and common real-world usage, improving test coverage across permutations that would be time-consuming to handcraft.

What the Pipeline Looks Like Now

Here’s how we’ve integrated GenAI into our CI/CD ecosystem:

- Prompt-to-Test Interface: Engineers use an internal CLI or UI to describe workflows. GenAI suggests test code in our Cucumber-based framework.

- Dynamic Assertions: GenAI analyzes historical test runs and logs to recommend assertion strategies (e.g., expected status codes, timing buffers, error scenarios).

We still maintain tight governance. Every AI-generated test is peer-reviewed. But we’ve significantly reduced the manual effort and time required to scale automation across new features and services.

Real Results: Faster Coverage, Better Confidence

Since integrating GenAI into our automation framework, we’ve seen some powerful outcomes:

- 20% faster test coverage for new feature rollouts

- Higher test diversity, especially for edge cases and complex data conditions

But the real win? Engineers spend less time fighting test code and more time building quality features.

Culture Shift: From Test Authoring to Test Curating

Much like our journey with Customer Use Case Automation, the biggest change isn’t just technical—it’s cultural.

We’ve moved from “test authorship” to test curation. Engineers guide the GenAI assistant, validate outputs, and focus on refining intent and edge coverage—rather than hand-coding every test line.

And with better test intelligence, we now catch regressions earlier and resolve them faster—with context-aware suggestions from our GenAI debugger assistant.

What’s Next: Beyond Testing

We’re only scratching the surface of what’s possible. Here’s what’s on our radar:

- Self-Healing Tests : Flaky tests often result from minor UI or API changes. With GenAI, we can introduce self-healing logic that dynamically adjusts selectors, expected responses, or payloads by comparing against previous patterns or schemas. It flags changes, proposes updates, and in some cases, auto-fixes them—saving countless hours of maintenance.

- AI-driven impact analysis: Understand which tests to run based on code changes.

- Conversational test analytics: Let anyone query test results in natural language (“Which segments had the most failed journeys last week?”).

- Scalable Regional Test Enablement: Different regions often share the same core functionalities but may be affected by infrastructure nuances or data variations. With GenAI, we can dynamically enable region-specific test runs based on the same base workflows—ensuring localized reliability without duplicating test logic. This makes our E2E coverage smarter and more scalable across global environments.

Our vision is to build an intelligent quality platform—where AI helps engineers focus on what matters most: delivering exceptional customer experiences.

Smarter Automation, Better Experiences

By integrating GenAI into our quality engineering pipeline, we’re building a smarter, faster, more resilient automation system. We’re not just writing more tests—we’re writing the right tests faster, evolving with the product, and proactively catching issues before customers notice.

This is quality at scale—powered by AI, owned by engineers, and felt by our users.