Reporting Metrics, Part 1: When Adobe Target Reporting Does Not Match Expectations

by Christopher Davis and Kevin Scally

When reporting on Target activities, there are often times when a report’s content does not align with expectations. Sometimes this is simply because something isn’t as intuitive as it seems, other times it may occur because there is some troubleshooting to be done. This post compiles some of the “greatest hits” of FAQs & Troubleshooting on Activity Reporting with Target as the reporting source. Check out Part 2 and Part 3, where we cover these same ideas for when you are using A4T as your reporting source (Part 2) and also for when you are using a third-party analytics tool as your reporting source (Part 3)!

Understanding Your Success Metrics

Target reporting's most common reporting issues center around visitor or success metric counting. When using Target reporting, it is important to understand the success metric you have selected. A full breakdown of success metrics can be found here, but at a high-level, Target can track the following types of metrics:

- Conversion: Conversion events are tracked through one of three metrics. Viewed a page, where the visitor has reached a specified URL that indicates a visitor has converted (e.g., Thank You/Order Confirmation page). You can specify patterns to easily capture multiple pages, though this can occasionally lead to tracking more pages than intended. Viewed an mbox, where a display notification was sent for a specified mbox name (for example, an mbox fired on the order confirmation page. Clicked on element/mbox: where a click notification was sent for a specified element or mbox. Tracked elements are referred to by their CSS selectors (see Target Click Tracking Validation below for more info on CSS selectors) and tracked mboxes are referred to by name.

- Revenue: Revenue is relayed to Target through an order confirmation mbox, there are a few options for revenue-based metrics. Revenue Per Visitor (RPV), where total revenue attributed to the activity divided by the number of Unique Visitors within the Experience. Average Order Value, where the average revenue for each order submitted is used; this metric is not affected by visitors who do not place an order. Total Sales, which tracks total revenue generated by each Experience. Orders, which tracks total order volume generated by each Experience.

- Engagement: Engagement metrics can be used to measure upper-funnel on-site behavior in a couple of different ways. Page Views, which tracks the overall volume of page views per Experience & Time on Site, which tracks overall amount of time spent on the site per Experience. Target also offers Custom Scoring: this engagement metric requires a custom implementation, it allows specific values to be assigned to different pages of a site (e.g., pages of a conversion funnel become increasingly high in value as a user progresses through the funnel). Custom Scoring should only be pursued with a specific use case in mind, as for most reporting needs, the other metric types are sufficient and require much less development work.

CSS Selector & Click Tracking Validation

When Target click tracking or modifications do not appear to be functioning as expected, it is important to be able to validate that your tracking and/or modifications are using valid CSS selectors. Activities authored within the Visual Experience Composer (VEC) use CSS selectors to apply modifications and tracking to desired HTML elements. When modifications do not show, or click tracking does not increment as expected, it is generally the case that the CSS selector specified within the activity setup does not match the selector on the production site where the activity is running.

- You can find a quick, gamified crash-course on CSS selectors here: https://flukeout.github.io/ - once you are familiar with writing CSS selectors, you can then use a more comprehensive resource such as MDN Web Docs to learn more about the full breadth of CSS selectors that exist: https://developer.mozilla.org/en-US/docs/Web/CSS/CSS_Selectors

- You can find info using the Chrome Developer Console to search a page's Document Object Model (DOM) for a CSS selector here: https://developer.chrome.com/blog/search-dom-tree-by-css-selector/ (this can be useful for helping validate that a CSS selector is indeed valid, it is important to validate the selector on the page itself, rather than the page loaded inside of the VEC)

To validate that Target VEC Click Tracking is properly tracking an element, you can inspect an element in the browser's developer console and look for the at-element-click-tracking class on the element. If the element has had this class applied to it by the at.js Page Load request, it should send notification calls using the Delivery API when clicked. You can validate this in the developer console by looking for a delivery call in the Network tab when clicking the element. Make sure to have "Preserve Log" checked when doing this, as by default, the Network tab clears all recorded calls when loading a new page. An example of how to validate a notification call is shown below:

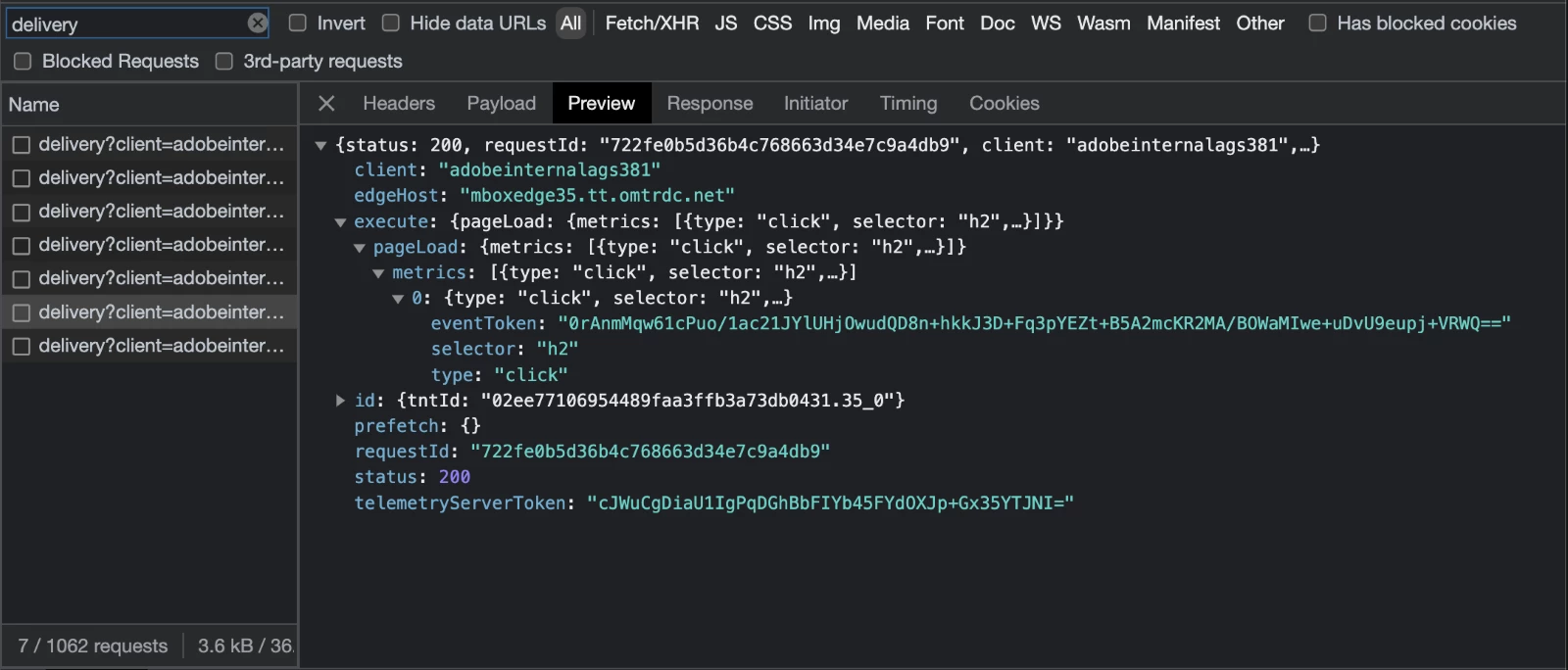

Response to a content request from Target - contains eventToken to be used in notification calls (execute > pageLoad > metrics > [index] > eventToken)

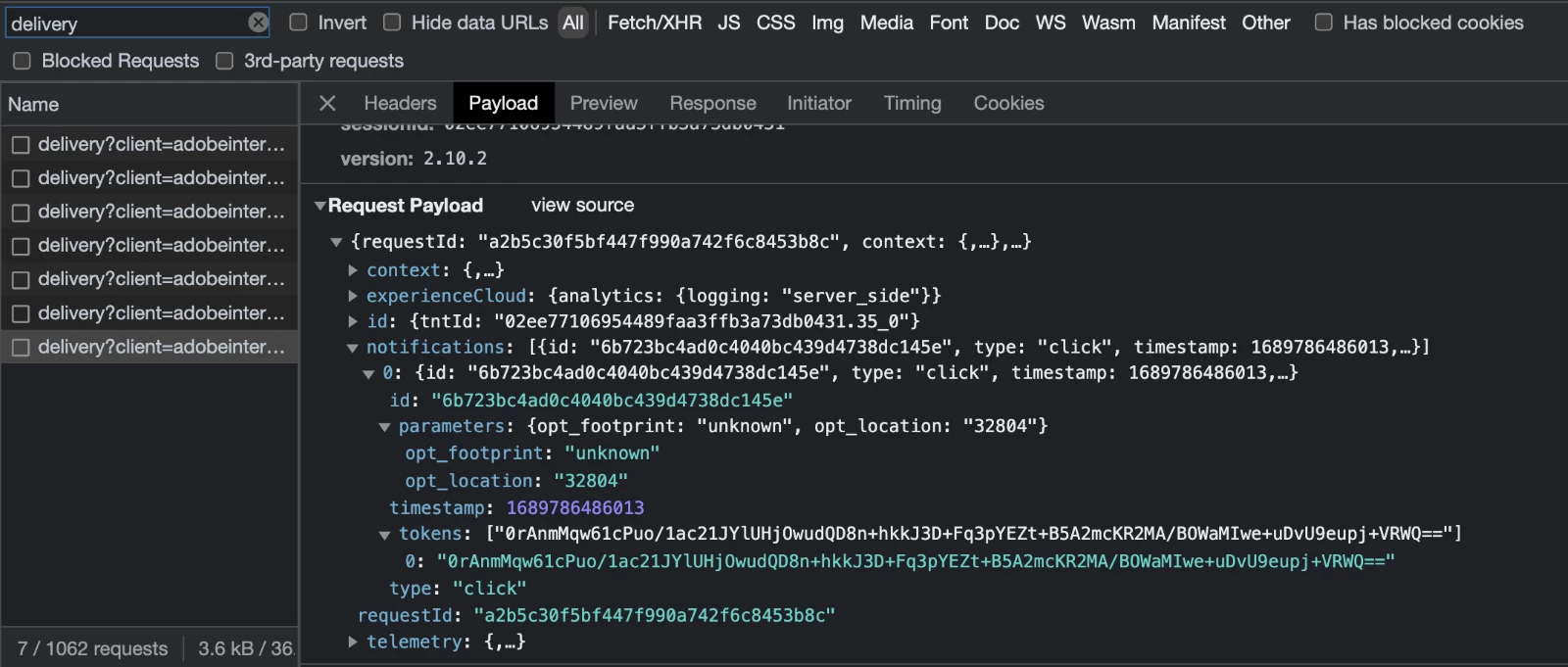

Payload of notification call; contains the token provided by Delivery API request made to retrieve content (notifications > [index] > tokens > [index]) along with the type of notification; in this case, a click notification.

Notification calls can either be click or display in type. When working with Target on a SPA architecture; it is common to use the prefetch request type for content from Target. This will return all content a visitor is eligible for when the request is made but will not increment reporting. A display-type notification call must be triggered in order to increment visitor counts, while a click-type notification call must be triggered in order to increment conversion counts. You can read more about Target notifications here.

Traffic Split Issues

Sometimes an A/B test may appear to not be adhering to the allocation set for the Activity. This is often the result of allocation percentages being changed after the Activity has gone live. In short, traffic allocation changes apply only to new entrants to the activity from the point in time that the adjustment was made. Existing members of the activity are not re-allocated based on the updated allocation percentages, as exposing users to two different variants would invalidate any statistical conclusions that could be drawn from the A/B test data. If an activity's reporting does not match its set allocation, this is often the culprit. You can read more about this behavior of Target on Experience League.

Automated Personalization (AP) & Auto-Target (AT) Reporting

Due to the nature of their functionality, reporting for Automated Personalization activities or A/B tests using Auto-Target allocation do not work at the usual Visitor & Experience level. These features of Target operate at the at the Visit (Auto-Target) and Offer (Automated Personalization) levels. These activity types use Machine Learning models to determine what is the best offer to show to a visitor on a per-session basis. Rather than falling into either a static A or B Experience like a traditional test, visitors fall into either Control or Personalized arms of the experiment.

With these branch types, the offer can vary from session to session so that a model powering the Personalized arm can determine how effective it is at selecting the best offer to show to someone based on their most up-to-date Target Visitor Profile snapshot compared to the Control arm. With this being the case, it does not make sense to attribute a conversion in a current session based on what was shown to a visitor from a previous session, as that would not accurately inform the model of what offers could be directly attributed to a conversion. Since this is by design, the conversion not showing up in the activity's reporting would not indicate a problem with AT/AP reporting.

This post was written by Christopher Davis ( @chrismdavis ) and Kevin Scally ( @kevin_scally ). Both are Target technical consultants within Adobe Consulting Services.