Adobe Target — On Device Decisioning

Author: Alex Bishop

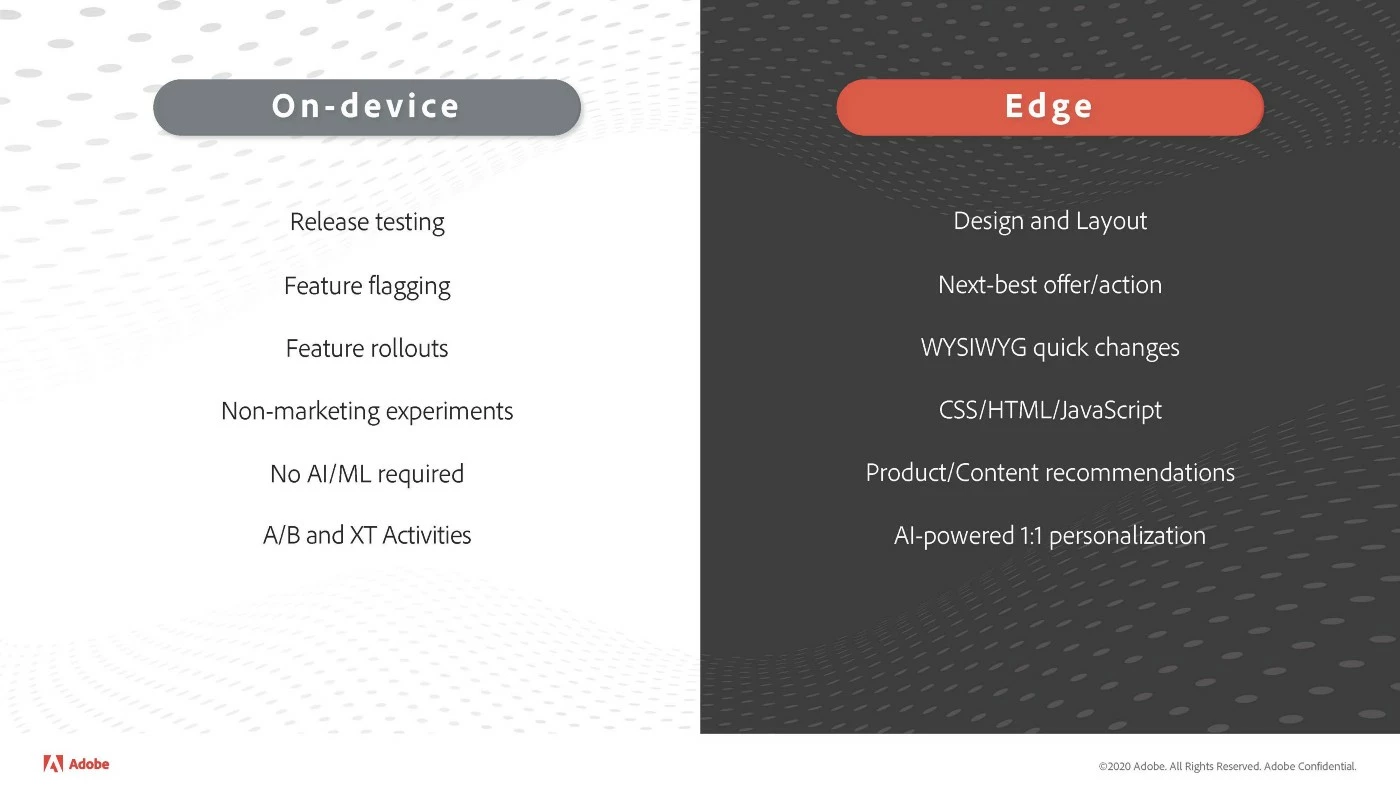

This short article will provide a brief introduction to Adobe’s new On Device Decisioning capabilities. There is some very useful documentation available here that explains when to use On-device vs existing Edge decisioning, so my main focus will be providing some answers to questions that have come up quite regularly since the announcement, as well as a quick run through of the implementation basics.

#1 What you should know

Availability

The good news for the server-side fanatics amongst us is that On Device Decisioning is available in the latest Java & Node.js SDKs. The bad news for everyone else is that it’s not currently available in the Web or Mobile SDKs, however, it is on the roadmap for both.

Server-side SDK basics

There is a JSON artifact that contains all offers/activities data, which will be cached on your server; the Target SDK is then able to interpret the rules contained within that artifact and make decisions around which experience to give your end user.

Note: this doesn’t mean that the offers are going to be downloaded and stored on the client-side browser when using the server-side SDKs.

Web/Mobile SDKs

As mentioned above, these aren’t available yet but the idea is that the Web SDK (release planned for 2021) will involve storing the artifact on the user’s browser and the Mobile SDK will store the artifact on the user’s mobile device. Just to re-emphasise what has been said in the previous section, in the case of the server-side SDKs, the artifact will be stored on your server not the user’s browser.

Creating Activities

On-Device currently only works with the form composer, it isn’t yet supported for VEC activities. This isn’t particularly surprising given that most server-side activities (hybrid implementation being the notable exception) will be created using the form composer. I would expect the Web SDK to support VEC activities when On-Device is released next year.

Reporting Options

The short version is: yes, you can use A4T. When you create an activity with Analytics reporting selected, meta data is included in the artifact that ensures the necessary stitching with Analytics takes place. When a decision is made about which experience to show a user, the notifications call will include the meta data to ensure the hits are recording in Analytics.

Device Caching

Whenever you create/edit/delete activities, a new artifact is deployed to the Akamai CDN and the cache is invalidated so the new version can be downloaded. The SDKs have a polling interval that you can define, which ensures that the newest artifact is downloaded and used for execution.

User Profile

It’s not currently possible to use On-Device for activities that rely on the customer profile for targeting/personalisation etc.

Consistent User Experience

The decision making process is deterministic — murmurhash3 is used to work out which bucket the user should belong to. The 3 main IDs that are used in the context of Target — ECID, mbox3rdpartyid, Target ID — are used for bucketing, so as long as the same ID exists the Target experiences a user sees will be consistent.

AI/ML

There is a plan to include support for AI/ML activities, across all 3 SDK categories, but this is unlikely to happen soon. Adobe mentioned Privacy/GDPR challenges in a recent webinar, based on needing to store the user’s profile on device. However, the roadmap does already include providing Auto-allocate support for the server-side SDKs.

Using Recommendations

This isn’t currently possible due to challenges ranging from storing an entire product catalogue on a user’s device through to how the recommendations model can be trained on the user’s device.

#2 Implementation Basics

The first thing to say here is that you can get up and running very quickly by either running Adobe’s demo site or by checking out the samples provided by Jason Waters.

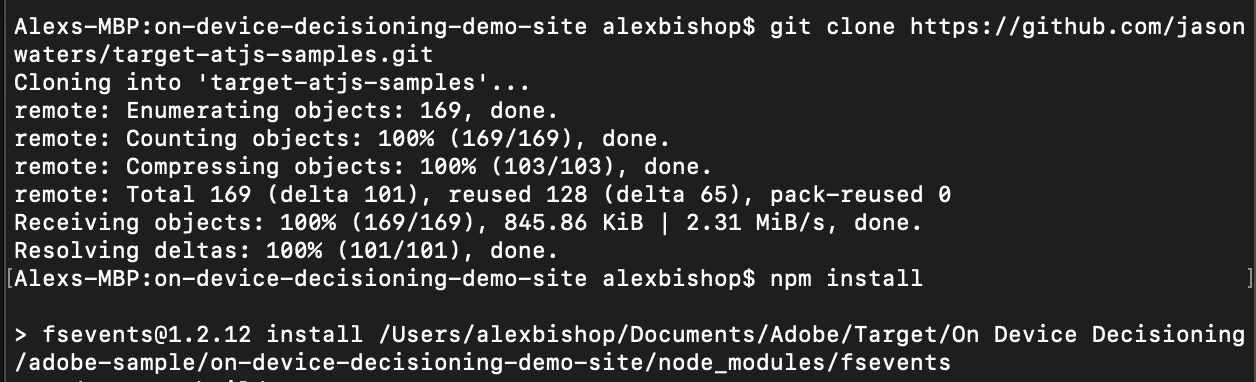

Assuming you have Node.js implemented and you’re going to use Jason’s sample apps as your starting point, the first thing to do is clone the sample apps from github:

git clone https://github.com/jasonwaters/target-atjs-samples.git

And then install the necessary dependencies:

npm install

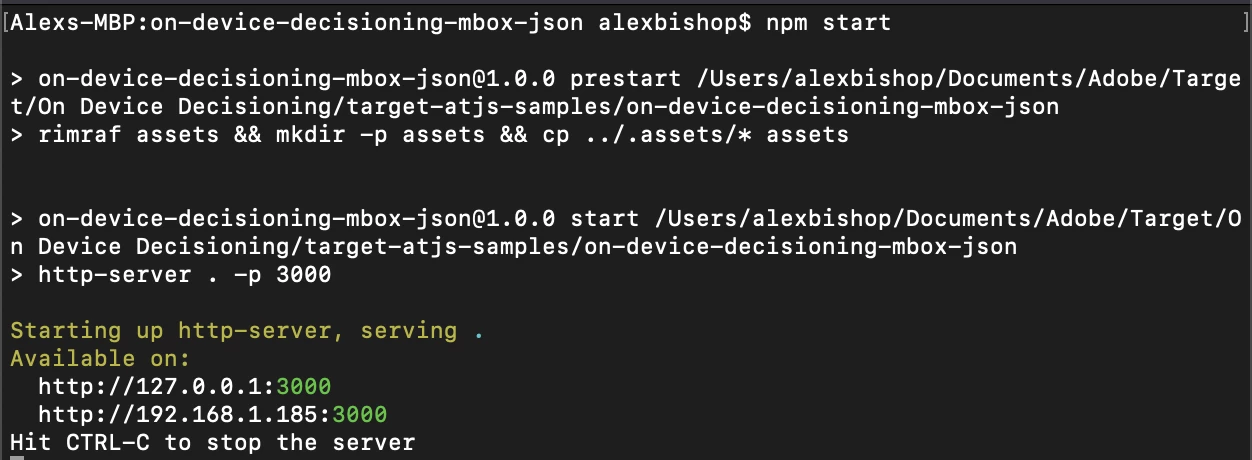

When you start the application, you will then get a notification that the server is running on localhost:3000:

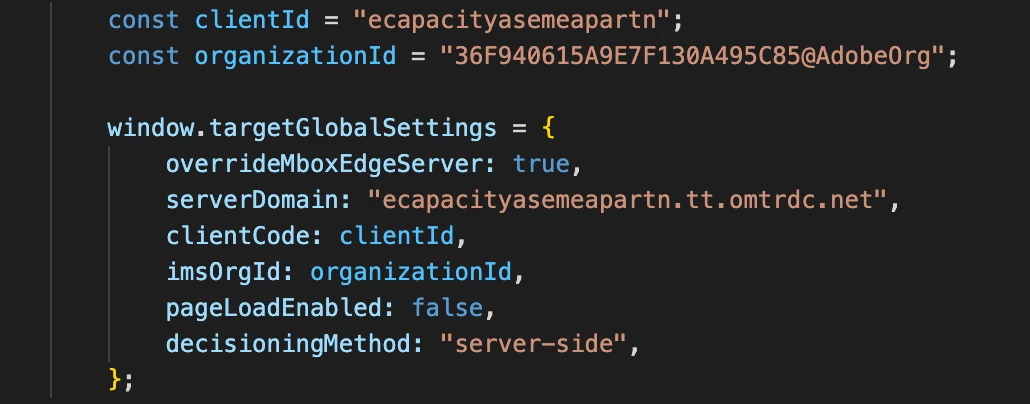

The first thing to take note of is the targetGlobalSettings object — you’ll notice that currently the decisioningMethod is server-side, which means that we’re still making a request to the Edge network to work out what experience the user should see:

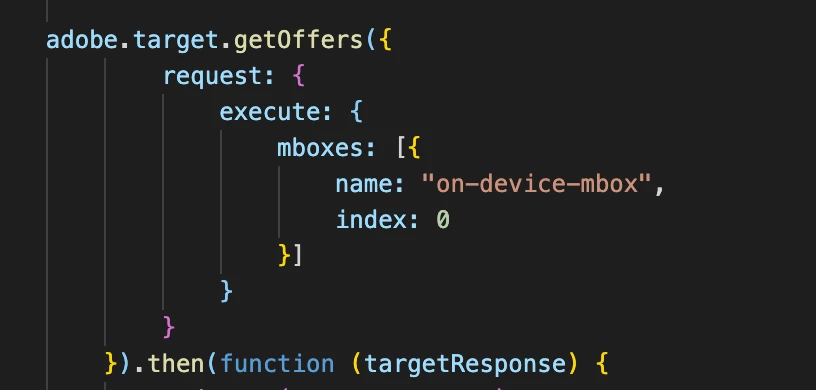

The rest is really as you would expect from a standard server-side implementation — a request to Target is made with an mbox name specified:

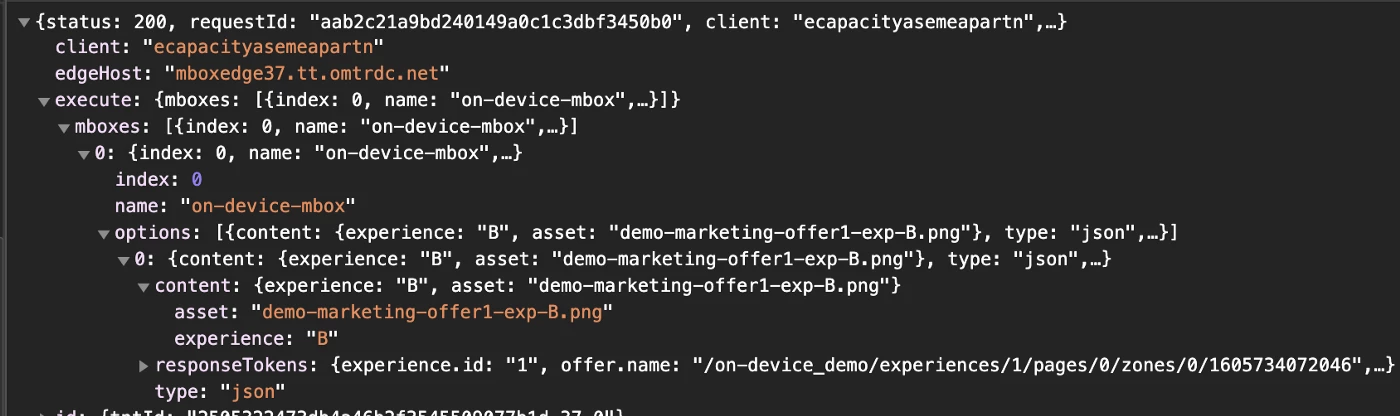

So if you use Jason’s example — or recreate the Target activities using your own Target instance, as I have done — then you’ll see the expected JSON response from your getOffers request. As you can see below, the response is a (relatively) simple JSON structure with various key/value pairs. If you look at the “content” key, you will see the JSON offer that was created in Target:

This isn’t strictly anything to do with On Device Decisioning but I think it’s worth setting the scene by showing the basics of a server-side implementation. So the next obvious question is….

Q: How do I configure the SDK to use On Device Decisioning?

A: Within the SDK config there is a decisioningMethod param, which can be set to “on-device”, “server-side”, “hybrid”. There are certain activities that aren’t supported by “on-device”, so setting to “hybrid” allows for these activities to still be served

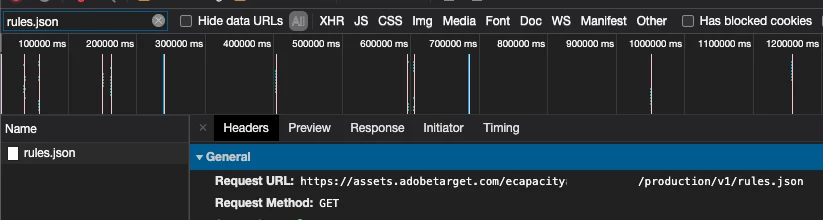

If the rules.json file hasn’t been previously cached, or you haven’t specified an artifactLocation value within targetGlobalSettings, the SDK will make a network request to retrieve the file:

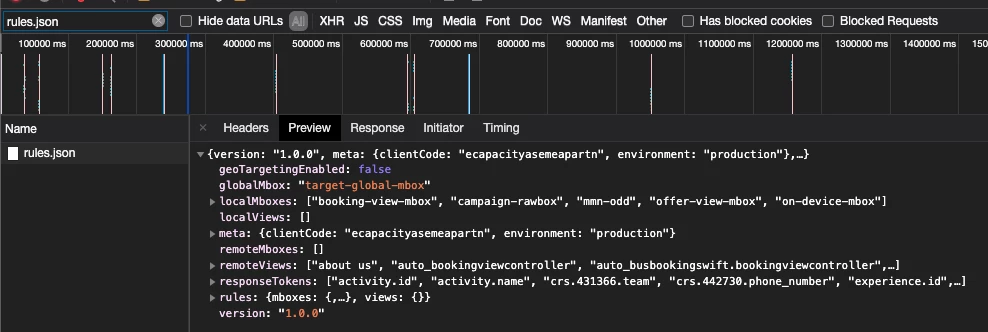

There are a couple of different options available, in terms of at what point you retrieve and store the rules file, both of which are described in detail here. The rules artifact contains all of the necessary information needed by the SDK to serve the Target content:

Once you have retrieved and cached the rules artifact, the SDK will fetch the information from the cached file, which removes the need for making a network request and therefore ultimately gets content back to the user’s browser more quickly.

So that’s it for now — hopefully the information in this article has answered some of the questions that you might have had. As a reminder, there is a lot of useful info on Adobe’s Target SDKs here, as well as sample code to get you up and running here and here.

Originally published: Dec 01, 2020