Authors: Lavinia Lungu, Calin Dragomir, Costel-Cosmin Ionita, and Alexandru Gabriel Ghergut

As part of Adobe Experience Platform, Audience Manager is one of the most powerful data management platforms used by marketers to collect, manage and activate audiences across multiple channels. It is purpose-built to create meaningful real-time customer experiences.

Audience Manager collects over 30 billion requests per day from the customer’s websites. This scale cannot be achieved without a globally distributed edge infrastructure that is able to perform data collection and real-time segmentation. The deployment consists of over 700 EC2 instances spread across 8 AWS regions.

Marketers can manage their audiences through a web portal, which is backed by a cloud-hosted, non-distributed MySQL datastore. We refer to the data stored in this MySQL instance as metadata because it directly relates to audiences and customers. That metadata needs to reach the edge infrastructure in order to reflect any updates in the customer profiles or audience configurations during data collection.

In this post, we’re going to describe the migration story of the legacy metadata propagation system, to the new, more efficient one, backed by Hollow as the main framework.

Existing Challenges

The current approach of collecting and sharing metadata was successfully used for a couple of years, and it proved to be stable enough for our use-cases. There are 3 distinct components of this setup (all of them are JVM based):

- Uploader: The process in charge of extracting the datasets from MySQL and convert them into flat CSV files, which are uploaded in a regional S3 bucket. This process is deployed in the same region with the MySQL instance.

- Downloader: The side-car process installed on each EC2 instance, responsible for downloading the latest CSV files on the local disk. It does this periodically by checking a control file, called manifest, which gets updated by the uploader on each production cycle.

- Reloader: The per-region process which orchestrates the reload procedure for each cluster. It basically calls each instance on a dedicated HTTP reload endpoint, making sure that at any moment of time, only a fixed percentage of the instances are reloading their configurations. We need this control because when the instance reloads itself, it doesn’t accept new requests (due to some internal constraints), so we want to always have a predictable capacity.

Here is the existing system in one picture:

Figure 1: Architecture of the existing metadata propagation system

Figure 1: Architecture of the existing metadata propagation system

This approach is very stable and resilient (being based on S3 as the main data-store), but it also has some downsides:

- Latency: The entire process, from the moment when the data is written into MySQL until it reaches the memory of all the data collection servers (globally), takes around 3 to 4 hours in total

- Memory Usage: For most datasets, the CSV files are loaded into memory directly, without any kind of optimizations

- Downloader: For new services (that are usually deployed in Kubernetes) the downloader adds some overhead because it needs to be deployed as a side-car container (new image, new volume to share the metadata)

- Debugging: It is quite hard to follow an update that happened at some point in time (we need to search through files in S3); also doing a rollback to a previous version of the metadata is not a trivial process

Why we chose Hollow?

Due to this current architecture and reload mechanism, the system proved its limits and was getting slower and slower.

Looking into alternative approaches, we stumbled into Hollow — an open-source library and toolset designed for managing in-memory datasets, which seemed to be a rescue boat for our sinking ship. Due to it’s single producer to many consumers design, it fitted perfectly in our use-case. Our datasets’ sizes were among the recommended ones since we’re currently loading around 5 GB of data, matching Hollow’s requirements of loading GBs and not TBs or PBs. The advantages of having a high performance under read-only access and it’s highly optimized resource management proved to be also a step-up from our previous state.

Figure 2: Hollow strong points

Figure 2: Hollow strong points

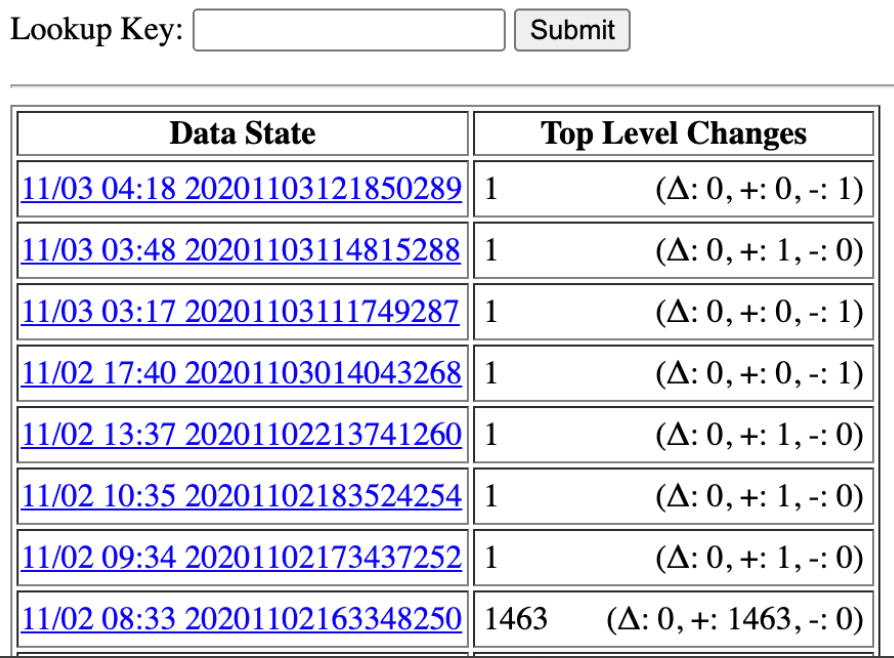

One big win for Hollow was the fact that it solved our reload procedure. Even though Hollow follows the same pattern for loading the data (using S3 as an intermediate storage step for snapshots), it does that in a much more efficient manner and with a smaller memory footprint than we had before. Hollow uses delta updates on each production cycle, which is a major strong point because in that way we reduce the reload time from around 4 hours to under 1 minute (configurable).

The data modeling capabilities of Hollow were another strong point we wanted to take advantage of. You can easily shape your models and generate APIs for accessing the data. You can also index your data to speed up queries based on our access patterns and together with the filtering feature, the retrieved dataset is reduced only to essential information without any additional boilerplate.

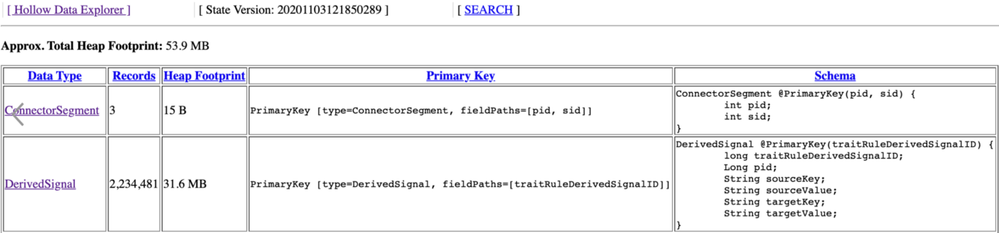

Hollow comes with a set of observability tools that can be easily integrated into our stack, mostly because they can be accessed via HTTP. They provide easy access to data on a per-dataset basis (with search and filtering capabilities), but also traceability. We are able to see the evolution of the datasets and, eventually, to point out the state that introduced a bug.

Adoption Plan

The experiments we conducted with Hollow were quite successful, so we decided to take a step forward and make a plan to integrate it into our components. There were some interesting challenges that we encountered during the design phase:

- The integration test suite of our project was quite opinionated on the existing flat-CSV metadata setup, so we had to find a way to integrate Hollow without rewriting the entire testing framework

- We wanted to design a seamless client-side experience with a decent amount of configurability, but abstract enough so we can hide internal details from our clients

- We wanted to build a standardized way of interacting with Hollow primitives (e.g building the Hollow Consumer, setting up the Announcement Watcher), so we decided to create a common library around that, configurable via Typesafe

We decided to create a single repository with the following structure (Gradle modules):

- Commons: A place where the models for each dataset are stored, as well as the Hollow-generated API

- Consumer: Contains indexes for each dataset, as well as contracts (interfaces) that are meant to be used by the client code

- Producer: A full-fledged Spring Boot app that periodically queries the source of truth (MySQL) and publishes the new state of the datasets.

In terms of data modeling, we’re still keeping the flat structure as we already have in the CSV files, in order to minimize the changes required on the client-side, but now we have a more flexible framework that we can evolve over time.

As an example, an existing CSV model with the following structure:

ClientId,ClientName,Enabled

Will be modeled into a simple data class that looks like that (with the associated getter and setter methods):

@HollowPrimaryKey(fields="clientId")

public static class ClientProfile {

public Integer clientId;

public String clientName;

public boolean enabled;

}

And on the consumer side, we have indexes (built using the auto-generated API) that query through those types of records:

private var index = HashIndex(consumer, true, "ClientProfile", "", "clientId.value")

Until now, this development model worked pretty fine for our use-cases.

The producer, on the other hand, is a bit more involved. Initially, we designed it to simply query the MySQL periodically and to produce new states with the results (one model per query), but while “change is always a constant” in any tech business nowadays, we had to adapt it to support multiple sources of truth.

So now, among querying MySQL, it also calls some REST APIs to grab metadata that needs to be produced (in a reactive way, via Kotlin Flow), so it looks like this:

Figure 3: Hollow Producer integration into our ecosystem

Figure 3: Hollow Producer integration into our ecosystem

For each query we make onto a source of truth, the producer creates a Hollow model which is then transferred to consumers/clients. This is what worked best for us, but depending on your data model, it is possible to construct a Hollow model with data pulled from multiple sources of truth.

Another benefit we considered for the migration was the observability provided by the existing tools within Hollow. Inspecting changes occurring to a dataset at specific time intervals or grabbing the current configuration was tricky with the old approach. The difficulty would increase once other sources of truth would be added since each has its own internal way of tracking changes.

Each Hollow consumer can be attached to the following tools exposed via an HTTP server:

Both of them use the Hollow format as an abstraction layer providing a unified way of viewing configurations regardless of the original sources of data they were pulled from.

Next Steps

Even though the adoption process was quite bumpy, the overall experience in working with Hollow was really great.

The only limitation that we found was related to the way Hollow Producer works. On each production cycle, we always need to provide the data gathered from all sources of truth, and the producer will figure out the deltas. This becomes problematic when you got a source of truth that might fail (REST APIs for example), so we had to find out a way to independently publish datasets. More in the next post!

Follow the Adobe Experience Platform Community Blog for more developer stories and resources, and check out Adobe Developers on Twitter for the latest news and developer products. Sign up here for future Adobe Experience Platform Meetups.

References

- Adobe Experience Platform

- Adobe Audience Manager

- EC2

- Hollow

Originally published: Jan 28, 2021

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.