Adopting Modern CI/CD Practices for Adobe Experience Platform Pipeline

Authors: Pranay Kumar and Douglas Paton

In this post, we continue our look at Adobe Experience Platform Pipeline. In the first two parts of this series, we looked at in-flight processing in Pipeline, as well as how we used Kafka to create Pipeline. This time, we look at modern CI/CD practices adopted by Adobe Experience Platform Pipeline including our choice of implementation, and how they've helped us so far. We also look at how automation has helped streamline the entire process.

Adobe Experience Platform Pipeline is the messaging bus that uses Kafka to connect solutions. It provides geographically distributed managed Kafka clusters with an HTTP API wrapper. It serves over a dozen different locations, some with multiple clusters, and enables us to route data from one to another based on certain rules and topic metadata.

These geo-locations are a heterogeneous mix of infrastructure, including AWS, Azure, and our own private data centers. These systems are made up of virtual machines, managed Kubernetes clusters, and bare metals. In addition to this, we have a growing set of microservices that make up our ecosystem.

We had automation in place to help manage deploys and remove some of the manual aspects of the deploys. But the system was old. It required a lot of manual intervention and it deploys would take hours, if not an entire day. And, there was a need for a dedicated engineering and SRE resource.

There was a growing need for a process that could be consistent across the five environments we had in play. Something that would operate in the dozen-plus clusters that we had in prod. Plus, it had to operate in the different infrastructures we used, Adobe data center, AWS, and Azure. This called for a solution that was generic across all applications.

Our goal was to simplify the process and reduce the number of manual steps and the amount of time it took to accomplish them. Ultimately, we wanted a more predictable process that took less time, required very little manual intervention, and was consistent across all platforms.

The first thing we needed to do, however, was put together a technology solution that was built for this purpose. When we started working on this problem, the tech that we had was cobbled together. And left us with the ability to create automations, but was far from efficient.

A look at Adobe Experience Platform Pipeline tech stack

In order to implement CI/CD in Adobe Experience Platform Pipeline, we needed to update our tech stack. This required us to shift our ideologies in terms of how we approached the problem. We decided that a containerized application using Docker-and Kubernetes-based solutions would help us solve the problems we were having in the most efficient way.

We were already using containerization across a variety of platforms, to begin with, including Adobe Experience Platform. We wanted to propagate that across our entire footprint to replace our bare-metal systems and VMs. Kubernetes was chosen because it operates across the various services that we worked with, such as AWS and Azure. It provided us with the consistent platform we needed across geo-locations and clouds.

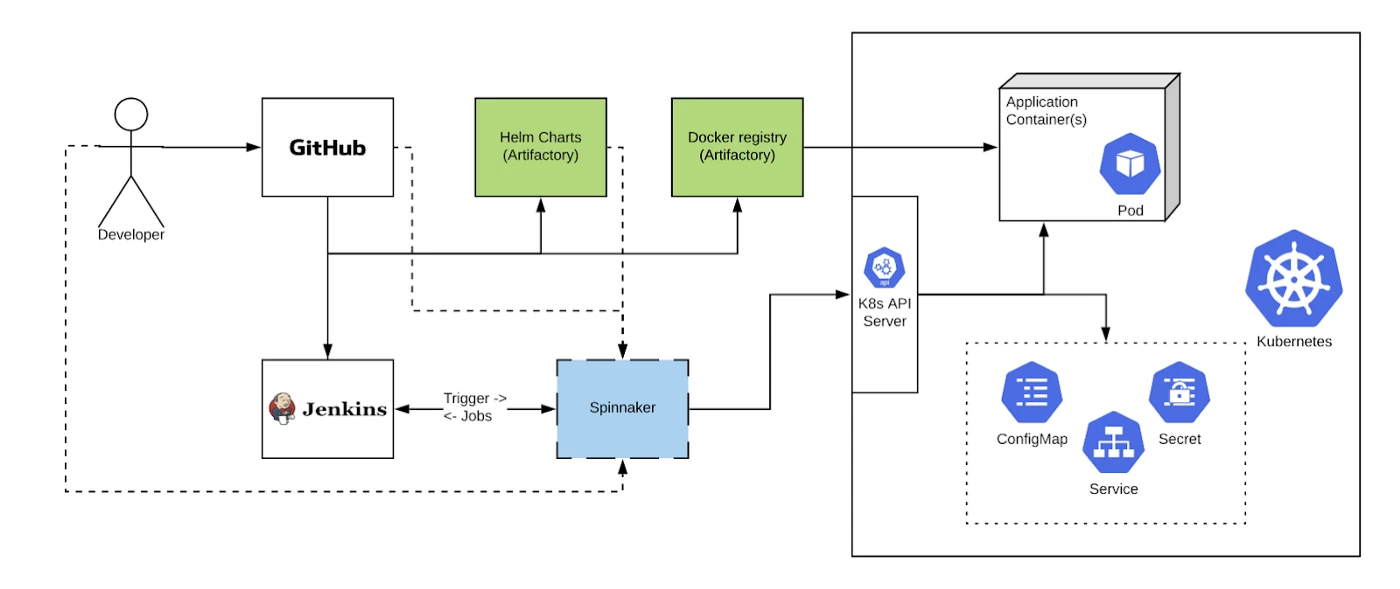

The updated stack is described below:

- Git — for static code and configurations

- Docker — for the containerization we use in the process

- Helm — for creating templates for the Kubernetes manifests

- Artifactory — for binaries such as Helm charts and Docker images

- Jenkins — for continuous integration and automation

- Spinnaker — for continuous deployment platform

- Kubernetes — for container deployment

While Spinnaker is largely the brains behind everything, Jenkins is the brawn. Jenkins helps us do builds for code and configuration pull requests (PR) raised on git. It also performs tasks such as generating and uploading artifacts when required and running test suites against targeted environments.

Kubernetes manages all deployed and runtime resources. We used managed Kubernetes solutions on cloud platforms because it reduces our time to delivery and maintenance overhead.

Continuous deployment using Spinnaker

Spinnaker is the continuous deployment platform that orchestrates our workflows. All triggers, both manual and automated, lead into a Spinnaker pipeline that determines what happens next in the sequence. We use Spinnaker pipelines for applications because of its versatility. It knows how to connect, trigger, or deploy to all the tools within the infrastructure.

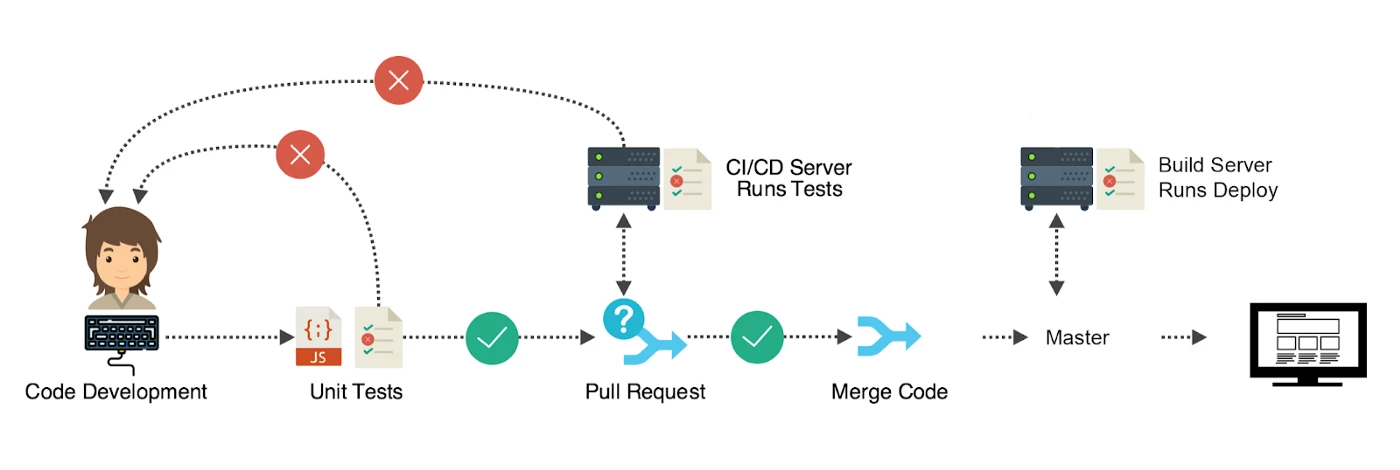

Figure 2 shows a typical workflow. A developer raises a PR, which triggers a Jenkins job that does a build, including unit and basic integration tests. If successful and merged, a commit to certain branch(es) triggers another Jenkins job. This triggers a Spinnaker pipeline. Spinnaker supports baking Helm chart artifacts pulled from Artifactory, along with any values stored in git, resulting in the Kubernetes manifest.

Kubernetes can have multiple resources applied via a single generated manifest or the pipeline can generate them in separate stages and deploy them individually. Spinnaker has native support for Kubernetes, which allows for easy integration and deployment to the Kubernetes cluster(s). We can also add various stages to the Spinnaker pipeline where user input or validation post-deployment may be desired.

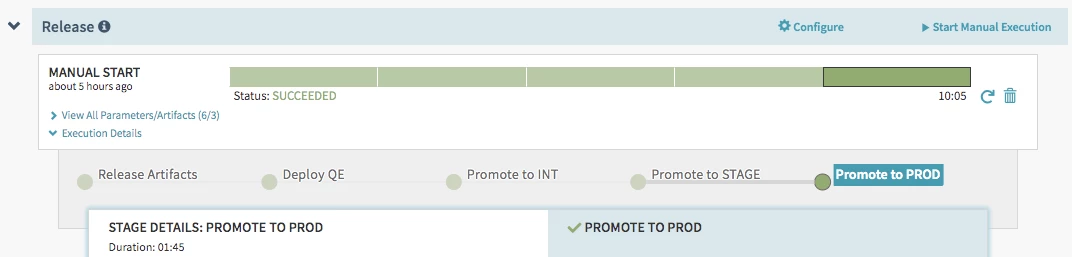

Releasing to production: We follow a general template for a Spinnaker pipeline to release an application that is to be deployed across multiple environments all the way to production. First, we trigger a release artifacts child pipeline. This pipeline has two Jenkins job stages that run in parallel to release two types of artifacts, Docker image, and Helm chart. From there, we trigger a deployment pipeline for each progressive environment, sequentially starting from Development and ending after Production. Each of these deployments is a separate child Spinnaker pipeline, as described above. The separate deployment pipelines help avoid unintended deploys to the wrong environment and provide the flexibility to have each environment reuse the same or deploy to its own separate Kubernetes cluster.

Auto-deployments: Spinnaker pipelines can be triggered manually by a user, or via external systems, such as Jenkins. We automated our CI/CD by having committed to certain branches in git trigger a Jenkins job and follow a similar workflow to Figure 2. This job extracts certain values and triggers the desired Spinnaker pipeline with those properties. Spinnaker then uses these properties to execute the desired workflow, without any human involvement. This truly brings us to the state of continuous deployment that we were aiming for.

Deployment strategies and rollbacks

Versioning: This is key to implementing the following strategies in a controlled fashion. We version all our artifacts because it allows us to use any version on demand. It also helps distinguish manifests.

Red/Black deployments: Red/Black deployments are done using two separate, but identical, production environments, red and black. It helps reduce downtime and risk because only one environment is active at a time. We typically do this for stateless applications as it allows zero downtime and has no impact. We control this by switching the selectors on Kubernetes Service(s) that expose the applications. Spinnaker applies multiple manifests, first to create a new Deployment resource that spins up pods with the new application version, then to update the Service and its selectors to point to the new pods. Finally, we clean up any existing resources that are not used.

Canary deployments: These are deployments that are released to a small subset of users, by updating limited containers serving traffic to the updated version and validating before being widely released. The goal is to use these "canaries" as a way of identifying any issues. In stateless applications, this is possible by manipulating the Kubernetes "Service" resource that points to the existing version of application Deployment at the same time as scaling a new version of Deployment to control traffic flow. Essentially, Service selectors should point to the relevant Deployment labels where you'd like the traffic to flow. The existing Deployment is scaled down once the new Deployment version produces the equivalent number of pods.

For stateful applications, Canary deployments can be done by applying Kubernetes StatefulSet resource specs to the cluster and namespace, with a limited number of pods specified for updating. A restart of those pods is required to apply the new spec and then to validate the Canary. Once validated, the new spec will be applied by Spinnaker that propagates the changes to all pods in the StatefulSet.

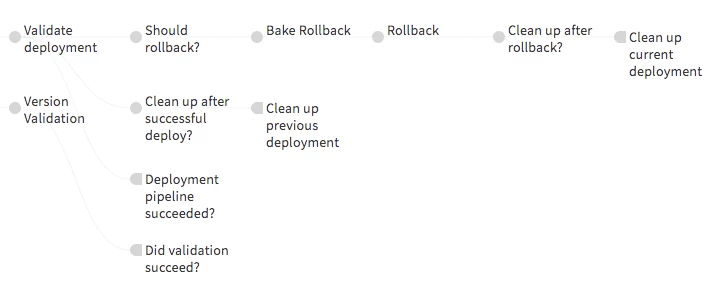

Rollback: Everything doesn't always go as planned and we need the ability to rollback if required. Much like with the Red/Black deployments, rollback in a stateless app can be controlled by the Kubernetes "Service" resource by changing the selectors to point to previously deployed deployment resources. Since we control this using values and parameters, the Spinnaker pipeline doesn't need to track previous deployments or what to rollback to.

When working with stateful apps since they cannot be replaced, you need to update the StatefulSet spec (or, more specifically, the docker image specified in it) and then restart the pods.

In either case, both the user and an external trigger can help decide what version to rollback to and pass the desired parameters on to the Spinnaker pipeline.

What we learned during this process

Spinnaker itself was a failure point for the process. Since we only had one spinnaker instance to deploy everything, if it went down we'd have issues with ongoing and/or pending deployments or workflows.

We also needed an upgrade plan that would solve the problem of safely upgrading Spinnaker, without interrupting our deployments. We solved both problems by creating a backup data store and a backup Spinnaker instance. This allowed us to use the backup for development, experimentation, and emergencies. Only one instance is in live use at a time, to allow the passive instance to be easily upgraded and experimented on without causing problems with deployments. We can then swap the instances when required. The downside is you tend to lose execution history when doing this. As an example, we tested Auto Deployments with service accounts on the backup instance before rolling them out.

We ran into issues early on during our endeavor with Spinnaker and came upon a few shortcuts that helped us debug issues specifically related to Spinnaker pipeline stage failure from the user interface.

If the failed Spinnaker pipeline stage is a child pipeline, select View Pipeline Execution or, if you know which child pipeline is causing problems, you can navigate there manually. If a Jenkins stage has failed, click the job number link and look at the Console Output (this requires you to have access to the invoked Jenkins).

Errors will be listed in red in the details section. If you still don't have enough information at this stage, click on the Source link on the bottom right of this pipeline stage extension. Once you're into the JSON source, search for error, fail, and exception. This will help you find the issues that are causing the error.

In terms of Spinnaker pipeline development, we learned that by modularizing and nesting Spinnaker pipelines we end up with an excellent pair of benefits:

- The ability to reuse these pipelines. You can design a Spinnaker pipeline to do something specific and that workflow can be used as a child pipeline in other workflows. For instance, the stage to release artifacts is a child pipeline we reuse in multiple deploy pipelines.

- Easy re-triggering of Pipeline stages in the process. If a stage is a child pipeline, and it fails, you have the option to restart it. If it wasn't a child pipeline and just another regular stage, you then have to restart the entire parent pipeline.

We started with ad-hoc pipelines that eventually evolved into more mature and restrictive workflows over time, such as the release pipeline. While those align with standard procedures, we realized that there were exceptional instances that warrant certain ad-hoc, but still restrictive, pipelines for automation. Some examples include providing immediate break/fixes on a certain environment, patches during a release, or having to experiment/test on the specific environment(s). These useful when in a pinch as long as they are used properly and sparingly.

We intentionally avoided dependence on Spinnaker UI's built-in hooks and features for Kubernetes operations to align better with our intent to use configuration as code. While we intend to use Spinnaker pipelines for most updates, we also wanted to control the generated manifests. This allowed us to decouple the workflows, so they could also easily be executed without it.

Where we are today and where we're heading tomorrow

Before we started, we did not have a Kubernetes footprint and we had no Spinnaker setup. We started after the last Spinnaker conference and did a proof of concept to determine that these practices indeed solve our main pain points. Since then, we've used the updated stack for new applications and have migrated existing applications handling live traffic to this infrastructure and process. With the new process, deployments are merely a few clicks, including validations, and we've seen considerable time savings along with improved predictability. The benefit of reduced deploy time and effort means we've also seen improved productivity and a faster, more frequent deploy cadence.

We have also sped up our time to delivery on multiple new applications, in multiple geo-locations and clouds, that need to be deployed since the pattern is easy to reproduce. However, we've started noticing a clear need for templates that can further help us streamline the Spinnaker pipelines. Unfortunately, at the time we started aggressively building and adopting this process, Managed Pipeline Templates v2 wasn't completely ready. As we move forward, we plan to develop templates and incorporate them into both new and existing Spinnaker Pipelines. Eventually, we'd also like to track these in git for greater cooperation and ease of maintenance.

So far, we've only done this for a few services and in a few geo-locations. We plan to have this automated deployment process across our entire footprint. We also want to introduce more automation by reducing the manual validations and triggers still required today in our process all the way to production systems.

We will be sharing our experiences again at the Spinnaker Summit 2019 in San Diego from 11/15–11/17/2019. See you there.

Follow the Adobe Experience Platform Community Blog for more developer stories and resources, and check out Adobe Developers on Twitter for the latest news and developer products. Sign up here for future Adobe Experience Platform Meetups.

References

- Adobe Experience Platform — https://www.adobe.com/experience-platform.html

- Adobe Experience Platform Pipeline — https://experienceleaguecommunities.adobe.com/t5/adobe-experience-platform-blogs/how-adobe-experience-platform-pipeline-became-the-cornerstone-of/ba-p/430569

- AWS — https://aws.amazon.com

- Azure — https://azure.microsoft.com/

- Docker — https://www.docker.com

- Jenkins — https://jenkins.io

- Kubernetes — https://kubernetes.io/

- Spinnaker — https://www.spinnaker.io/

- Helm — https://helm.sh/

- Kafka — https://kafka.apache.org

Originally published: Nov 4, 2019