On a recent call discussing use cases for Workfront Fusion, we mentioned that we currently export data from Workfront to Snowflake, which raised some questions as to "how". The answer is a bit complicated, so I wanted to post a more detailed dive here.

Disclaimer 1: We are aware that Adobe is currently evaluating, but not promising, Snowflake Secure Data Sharing (SDS) as a specific solution related to this area. While we are using Snowflake as our target data warehouse at the moment, please read this post more as a guide to exporting data from Workfront to any data warehouse or data storage location that doesn't have a built-in connector.

Disclaimer 2: There are likely lots of ways of doing this, each with their own pros and cons. I'm going to do my best to explain both what we did and why we did it, though, so you can evaluate what is right for your organization.

Disclaimer 3: This post is made for educational and entertainment purposes only. The author has made this post as an individual, not a representative of any company, and the views expressed as the author's alone. No warranties, implied or explicit, exist. Your mileage may vary. 🙂

Goal

Our primary goal was to export our critical data hosted within Workfront to an external location, including all the "CRUD" operations (create, update, delete), to help support cross-dataset reporting via Business Intelligent tooling such as Tableau. Our secondary goal was to setup a system that didn't have to constantly be touched as we did other Workfront administrative task such as adding new custom fields.

Challenges

- Historical objects will get updated with new data, either explicitly (through data updates) or implicitly (through new calculated fields being created). The solution must account for an ever-growing amount of data and do so gracefully.

- Not all object deletions and/or value updates are captured via Journal Reports, so reliability will be impacted if trying to use an "audit stream" to power this.

- Workfront APIs use offset-based pagination, which is well-characterized as having the potential to "return duplicate entries or skip entries if data is being simultaneously added or deleted from [Workfront]" (Modern Treasury).

Target Objects

We ultimately identified 14 critical objects that we wanted to ensure got exported: Assignments, Billing Records, Companies, Groups, Hours, Hour Types, Issues, Notes, Portfolios, Programs, Projects, Tasks, Timesheets, and Users. We then used the Workfront API Explorer to understand the data shape of each of these objects and come up with some patterns that could be followed.

Pagination

As Workfront uses offset-based pagination, we also needed to identify a strategy for how to query each of the objects in a way where the data being pulled is in as consistent of an order as possible. Sorting the queries on ID, for example, is problematic because ID is just a random value that has no intrinsic sorting as objects are created or deleted. Sorting on entryDate (typically the same as the object's creation date) works for those objects with such a field (not all objects have that), but hour objects leverage entryDate for the actual date of the time entry and have no corresponding creation date. Sorting on lastUpdated is incredibly problematic when users are in the system making changes.

In the end, we opted to do entryDate_1_Sort=asc and ID_2_Sort=asc for objects that support those fields and find the best acceptable alternative for objects that don't. Note that we know that data is likely lost or duplicated, so we address this via our next mechanism...

Full Data Dump

Whenever we dump an object, we dump everything -- all history available in the system as of the date when querying occurs. This addresses the "CRUD" problem where older objects get updated implicitly and other objects get deleted.

However, we are projected to have ~1 million hour entries per year; at this scale, we need to worry about memory usage of the Fusion scenario, data size, recovery of scenarios that hit the 45-minute max runtime, etc. This forces a strategy around an async processing queue.

Async Processing Queue

Data stores are magical things. They're MongoDB instances that can be used to implement crude work queues. We created a data store to track the object type, the number of pages expected to pull, and the last page number pulled. This then allowed us to pick up exporting from where we left off at the last run in a very direct manner.

Data Format

We chose to write data in JSON format with each batch being written to our SFTP server as a workaround for the offset-based pagination "duplication" issue that can occur. (Snowflake really does not like having duplicate records in the same bulk load.). JSON also allowed us to dump arbitrary Data Extension fields (fields=*,parameterValues:*) without having to specify things and store in Snowflake as a JSON object, which, again, meets our flexibility requirement.

Putting It All Together

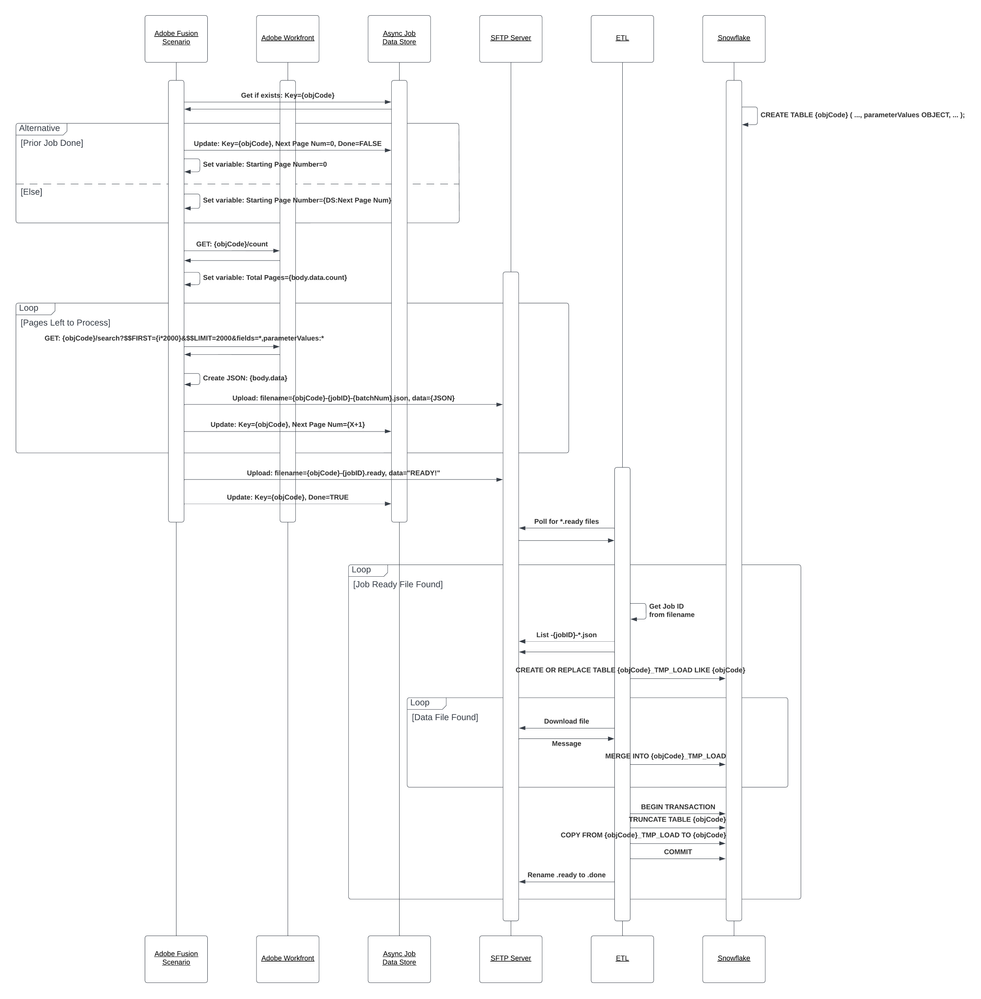

The following UML describes the end-to-end process used to get everything out of Workfront and into Snowflake. There are a lot of little details needed to get this working, of course, but it should give enough of a hint to get one started down that journey.

We ultimately used a third party ETL which has native SFTP-to-Snowflake capabilities to do the final load into Snowflake. In theory, one could do this with Fusion itself using Snowflake's native HTTP API calls,