Redundant data in dataset.

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi,

I am ingesting some data from Amazon S3 bucket every 15 mins and i noticed that the data which is already ingested is getting ingested again and again as a separate row in the dataset.

Is there a way to make sure that data already ingested is not ingested again?

Solved! Go to Solution.

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @arpan-garg, that says the reason for duplication.

Since the file is getting modified, it loads the file again and ingests the records since you cannot filter based on a field. See the note below:

The best way to handle this is as follows:

- Select the folder instead of the file

- Select the backfill option to load the existing files from the folder; otherwise, skip.

- Add a new file with the increment changes in the folder

- The job will automatically pick the new files (based on the file modification timestamp and the job's last run time).

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @arpan-garg, quick questions :

- In your data selection step, did you select a particular file or folder?

- How is the delta data fed into S3 - Does a new file in a folder or an existing file gets updated?

- What's the value of backfill?

Here's an important thing to look for - https://experienceleague.adobe.com/docs/experience-platform/sources/ui-tutorials/dataflow/cloud-stor...

Thanks,

Chetanya

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @ChetanyaJain - Yes, i selected a particular file while ingesting data.

An existing file gets updated with the new values but it also contains the old values.

One things to notice is when ingesting data via S3 bucket we don't see an option for selecting a incremental load field so it backfills all the data again.

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @arpan-garg, that says the reason for duplication.

Since the file is getting modified, it loads the file again and ingests the records since you cannot filter based on a field. See the note below:

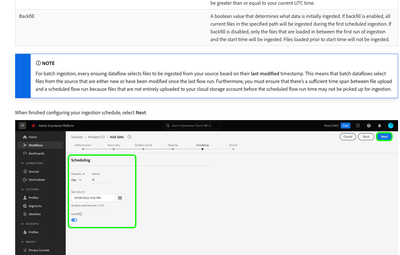

The best way to handle this is as follows:

- Select the folder instead of the file

- Select the backfill option to load the existing files from the folder; otherwise, skip.

- Add a new file with the increment changes in the folder

- The job will automatically pick the new files (based on the file modification timestamp and the job's last run time).

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Thank you, @ChetanyaJain , for the information provided. I will definitely give it a try. Based on the information, it appears that data cleaning should be handled separately, and only newly modified or updated data should be included in the new file. If a file with new timestamp contains the old data as well, AEP will ingest everything again.

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

That's right, any record that comes in it will re-ingest. So there are times when you must plan the events ingestion very well. Once added, they cannot be modified.

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

So data preparation/preprocessing is necessary before ingesting the data? Dear Chetanya, is there any recommended approach to automate this? Any tools that could find the diff and create the necessary input?

Views

Replies

Total Likes