@joerghoh - We currently do not have an AEM Publisher instance in our setup. Instead, we are using a Sling Distribution Agent configured with an endpoint pointing to a servlet, which is responsible for posting the distributed data to a middleware service. For now, as part of a proof of concept (POC), we are saving the files locally on our machine. Once the middleware setup is complete, the data will be sent there accordingly.

At present, when using the out-of-the-box (OOTB) FileVault Package Exporter, the data is exported in a ZIP format, similar to the packages created via the AEM Package Manager.

However, our requirement is slightly different:

For instance, consider the folder /content/dam/fmdita-outputs, which contains subfolders such as child-map-1, parent-map, etc. Each of these subfolders includes JSON files. When we trigger Sling Distribution using our custom agent, we want it to export only the actual JSON files — maintaining the same folder structure — and save them directly in our local file system.

Currently, the default behavior creates a Vault package containing all node data, rather than exporting the original JSON files. Hence, we are exploring the possibility of implementing a custom Package Builder that can handle this logic and directly save or send the JSON files as-is.

I have attached the relevant code snippets from our setup along with the current output generated when using the FileVault Package Builder for reference.

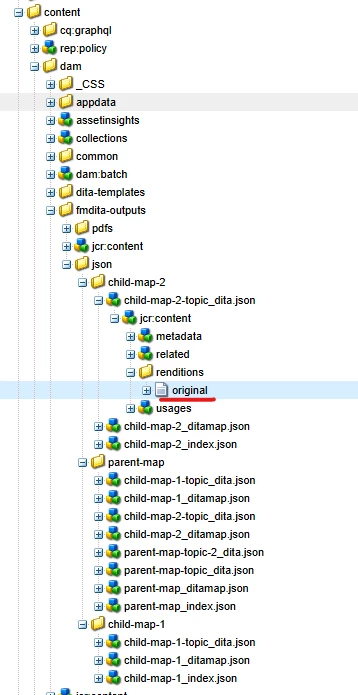

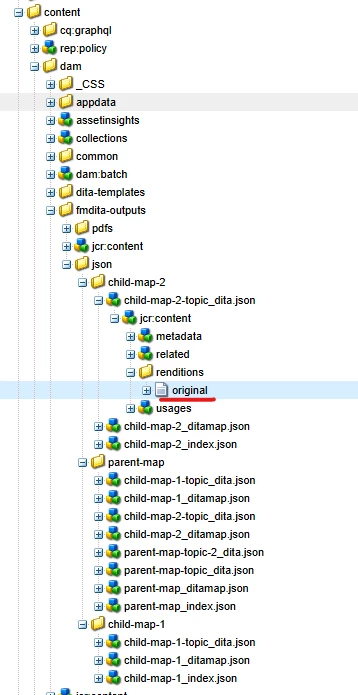

Node structure:

The /content/dam/fmdita-outputs node represents the DAM folder that contains the generated output files. Each subfolder (e.g., child-map-1, parent-map, etc.) holds one or more JSON files — these are the actual output files that we want to replicate or export in their original format (not as part of a Vault package).

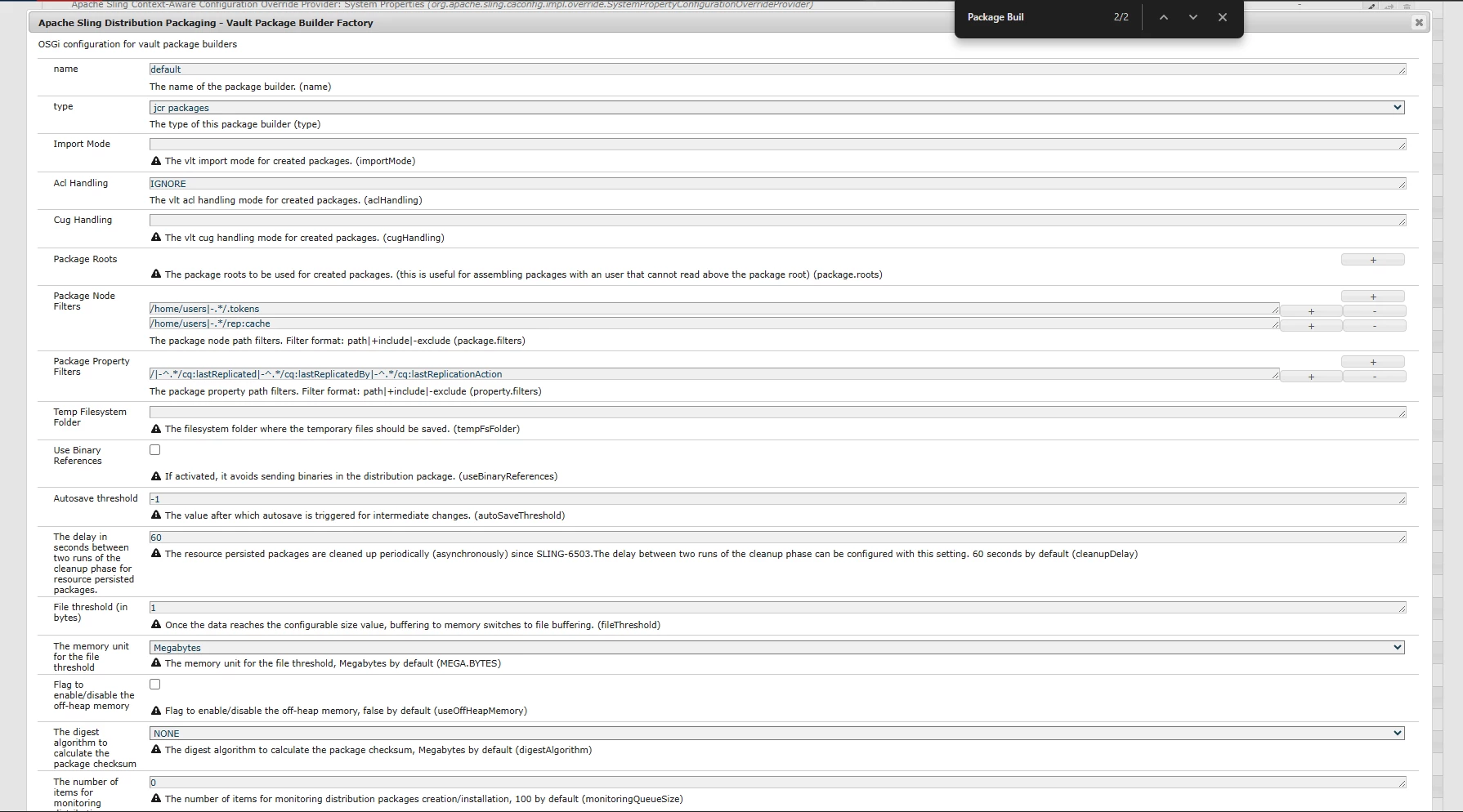

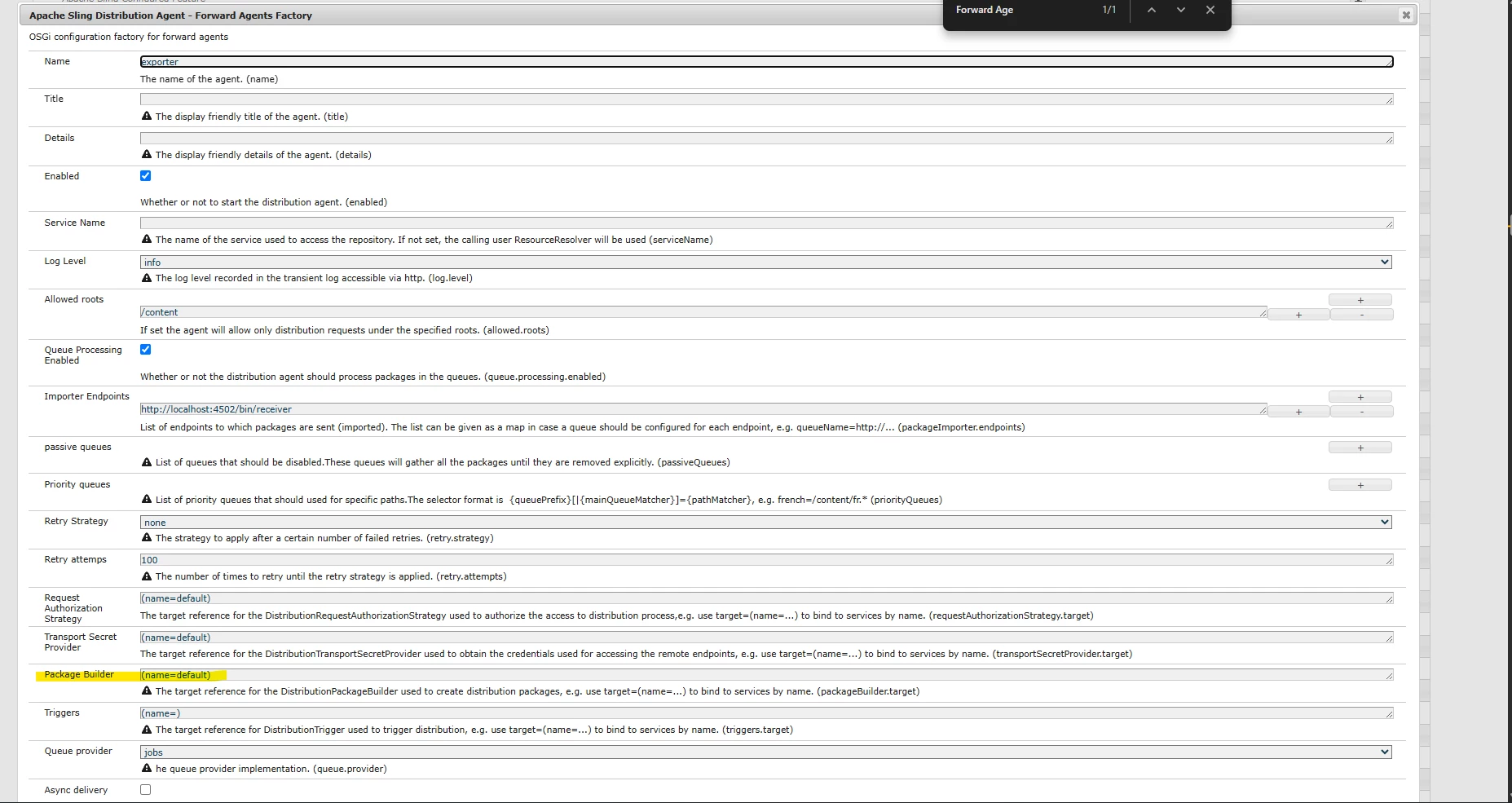

Sling Distribution Agent Configuration (org.apache.sling.distribution.agent.impl.ForwardDistributionAgentFactory-json-exporter.cfg.json) :

{

"name": "exporter",

"enabled": true,

"queue.processing.enabled": true,

"allowed.roots": ["/content"],

"packageImporter.endpoints": [

"http://localhost:4502/bin/receiver"

],

"requestAuthorizationStrategy.target": "(name=default)",

"transportSecretProvider.target": "(name=default)"

}

Relevant servlet (configured in endpoint) :

package com.adobe.aem.common.core.servlets;

import org.apache.sling.api.SlingHttpServletRequest;

import org.apache.sling.api.SlingHttpServletResponse;

import org.apache.sling.api.servlets.SlingAllMethodsServlet;

import org.osgi.service.component.annotations.Component;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import javax.servlet.Servlet;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServletResponse;

import java.io.*;

import java.nio.charset.StandardCharsets;

import java.time.LocalDateTime;

import java.time.format.DateTimeFormatter;

import java.util.*;

import java.util.stream.Collectors;

@Component(

service = Servlet.class,

property = {

"sling.servlet.methods=POST",

"sling.servlet.paths=/bin/receiver"

}

)

public class VaultBinaryExtractorServlet extends SlingAllMethodsServlet {

private static final Logger log = LoggerFactory.getLogger(VaultBinaryExtractorServlet.class);

@Override

protected void doPost(SlingHttpServletRequest request, SlingHttpServletResponse response)

throws ServletException, IOException {

log.error("VaultBinaryExtractorServlet invoked!");

String contentType = request.getContentType();

log.error("Incoming Content-Type: " + contentType);

String timestamp = LocalDateTime.now().format(DateTimeFormatter.ofPattern("yyyyMMdd_HHmmss"));

File rawFile = new File(System.getProperty("java.io.tmpdir"), "received_vault_data_" + timestamp + ".bin");

File jsonFile = new File(System.getProperty("java.io.tmpdir"), "received_vault_data_" + timestamp + "_summary.json");

log.error("Saving incoming stream to: " + rawFile.getAbsolutePath());

try (InputStream inputStream = request.getInputStream();

BufferedOutputStream outputStream = new BufferedOutputStream(new FileOutputStream(rawFile))) {

byte[] buffer = new byte[8192];

int bytesRead;

while ((bytesRead = inputStream.read(buffer)) != -1) {

outputStream.write(buffer, 0, bytesRead);

}

}

log.error("Raw binary saved successfully: " + rawFile.length() + " bytes");

try (FileInputStream fis = new FileInputStream(rawFile)) {

byte[] headerBytes = new byte[16];

fis.read(headerBytes);

String header = new String(headerBytes, StandardCharsets.US_ASCII);

log.error("Header signature: " + header);

if (header.startsWith("DSTRPACKMETA")) {

log.error("Detected Distribution Package stream. Extracting metadata and embedded ZIP...");

extractReadableMetadata(rawFile, jsonFile);

extractInnerZip(rawFile);

log.error("Processing complete for file: " + rawFile.getName());

} else {

log.error("No DSTRPACKMETA header detected. Skipping extraction.");

}

} catch (Exception e) {

log.error("Error analyzing binary header", e);

}

// Respond to caller

response.setStatus(HttpServletResponse.SC_OK);

response.setContentType("application/json");

String msg = "{\"message\":\"Binary file received and processed successfully.\"}";

response.getOutputStream().write(msg.getBytes(StandardCharsets.UTF_8));

response.getOutputStream().flush();

}

/**

* Extracts ASCII/UTF-8 readable fragments and writes metadata summary as JSON.

*/

private void extractReadableMetadata(File binFile, File jsonFile) {

log.error("Starting lightweight binary-to-JSON conversion...");

Map<String, Object> result = new LinkedHashMap<>();

List<String> readableStrings = new ArrayList<>();

try (InputStream in = new FileInputStream(binFile)) {

byte[] data = in.readAllBytes();

result.put("fileName", binFile.getName());

result.put("fileSizeBytes", data.length);

result.put("header", new String(data, 0, Math.min(16, data.length), StandardCharsets.US_ASCII));

StringBuilder sb = new StringBuilder();

for (byte b : data) {

char c = (char) (b & 0xFF);

if (Character.isISOControl(c) && c != '\n' && c != '\r') {

if (sb.length() >= 4) {

readableStrings.add(sb.toString());

}

sb.setLength(0);

} else {

sb.append(c);

}

}

if (sb.length() >= 4) readableStrings.add(sb.toString());

List<String> probablePaths = readableStrings.stream()

.filter(s -> s.contains("/content/") || s.contains(".json") || s.contains("jcr_root"))

.collect(Collectors.toList());

result.put("readableStringsFound", readableStrings.size());

result.put("probablePaths", probablePaths);

try (Writer writer = new FileWriter(jsonFile)) {

writer.write(toPrettyJson(result));

}

log.error("Readable metadata extracted successfully: " + jsonFile.getAbsolutePath());

} catch (Exception e) {

log.error("Error extracting readable metadata", e);

}

}

/**

* Extracts the inner Vault ZIP stream from DSTRPACKMETA binary and writes it to disk.

*/

private void extractInnerZip(File binFile) {

log.error("Attempting to extract embedded ZIP from binary...");

try (InputStream in = new FileInputStream(binFile)) {

byte[] data = in.readAllBytes();

int zipStart = -1;

for (int i = 0; i < data.length - 1; i++) {

if (data[i] == 'P' && data[i + 1] == 'K') {

zipStart = i;

break;

}

}

if (zipStart == -1) {

log.error("No ZIP signature (PK) found inside binary file!");

return;

}

byte[] zipBytes = Arrays.copyOfRange(data, zipStart, data.length);

File extractedZip = new File(System.getProperty("java.io.tmpdir"),

binFile.getName().replace(".bin", "_inner.zip"));

try (FileOutputStream out = new FileOutputStream(extractedZip)) {

out.write(zipBytes);

}

log.error("Inner ZIP extracted successfully: " + extractedZip.getAbsolutePath());

log.error("You can now unzip this to see jcr_root/... JSON and XML files.");

} catch (Exception e) {

log.error("Error extracting inner ZIP from DSTRPACKMETA", e);

}

}

/**

* Simple pretty JSON formatter (no external libs).

*/

private String toPrettyJson(Map<String, Object> map) {

StringBuilder sb = new StringBuilder("{\n");

for (Map.Entry<String, Object> entry : map.entrySet()) {

sb.append(" \"").append(entry.getKey()).append("\": ");

Object value = entry.getValue();

if (value instanceof String) {

sb.append("\"").append(value.toString().replace("\"", "\\\"")).append("\"");

} else if (value instanceof Collection) {

sb.append("[\n");

for (Object item : (Collection<?>) value) {

sb.append(" \"").append(item.toString().replace("\"", "\\\"")).append("\",\n");

}

if (sb.charAt(sb.length() - 2) == ',') sb.setLength(sb.length() - 2);

sb.append("\n ]");

} else {

sb.append(value);

}

sb.append(",\n");

}

if (sb.charAt(sb.length() - 2) == ',') sb.setLength(sb.length() - 2);

sb.append("\n}");

return sb.toString();

}

}

Output (with FileVault Package Builder) : https://drive.google.com/file/d/1eILYNIP15cQE44cRw_uIkFSazWn_qpw8/view?usp=sharing

If it’s possible to continue using the FileVault Package Builder and achieve the desired behavior — i.e., only post the JSON files — through certain tweaks or configurations in the existing implementation, we are open to exploring that approach as well. Please let me know if this can be done.