Authors: Meenakshi Ganesh, Sunish Verma, and Jody Arthur

The new Segmentation Automation Workflow Tool makes it easier than ever for Adobe Experience Platform customers to export customized data sets and segments into their own consuming systems allowing them to leverage their data faster and better.

This blog details a single implementation or customization on Adobe Experience Platform. Not all aspects are guaranteed as general availability. If you need professional guidance on how to proceed, please reach out to Adobe Consulting Services on this topic.

Adobe Experience Platform’s Real-Time Customer Data Platform offers many ways to integrate organically to allow customers to extract their data for use in third-party applications. Now, with the new Segmentation Automation Workflow Tool developed by Adobe Consulting Services, customers can also customize their data exports and segments from Adobe Experience Platform into their own consuming systems, allowing them to operationalize their data faster than ever. With Segmentation Automation Workflow Tool, Adobe Experience Platform customers can now consume the platform’s API to more easily set up their segmentation jobs and export their results to their output locations of choice allowing them greater flexibility in using different applications to work with their data.

Actioning data faster with new automation

Segmentation Automation Workflow Tool is built on a scalable microservices-based architecture. This structure allows for simplified customization based on a customer’s needs for the final output data without adding significant time to the solution.

Designed to exhibit parallel behavior, Segmentation Automation Workflow Tool enables multiple customers to see the results of their workflows very fast. Likewise, this structure also allows Adobe Experience Platform to better serve all of its customers with the ability to run multiple customer jobs at high scale and with high security.

The tool is capable of handling delta exports in which customers can choose not only the specific data they need but also a timeframe for the data set. Segmentation can be set up as a one-time job or a recurring job, and multiple jobs can be run simultaneously and deliver the results to any number of destinations defined by the customer, such as a native SFTP application, Adobe Campaign, or any other application that can be preconfigured to accept the data.

A solution built on big data technologies

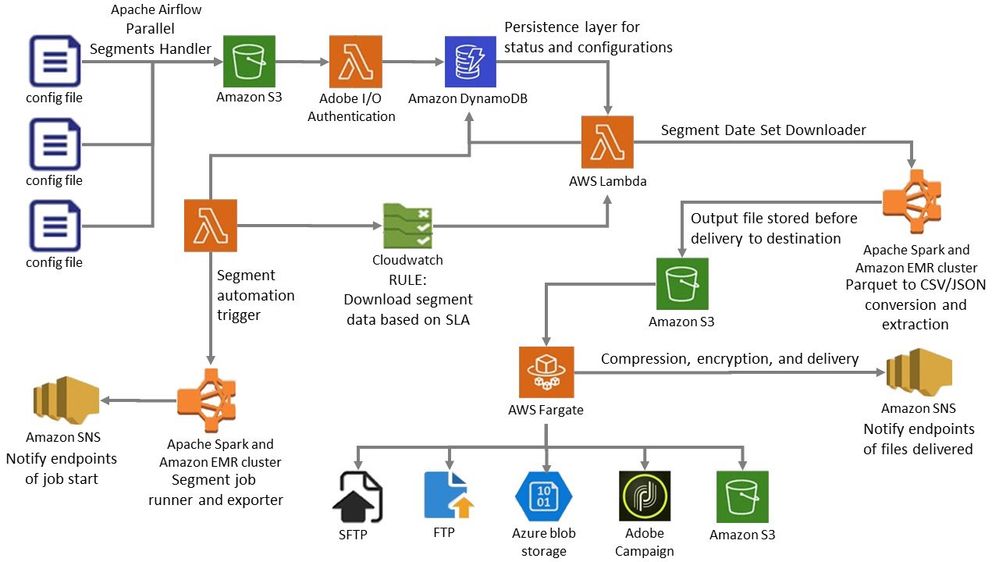

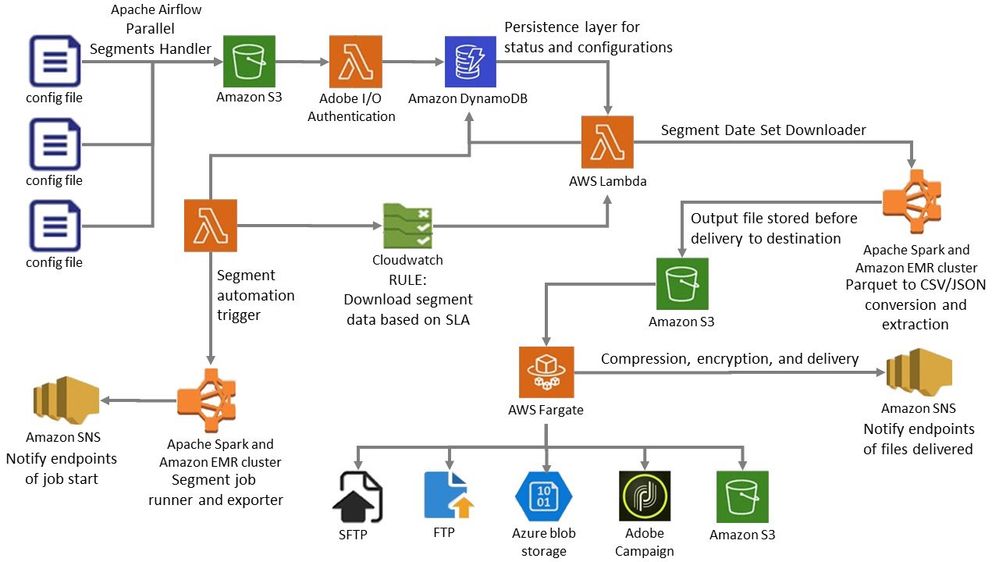

The architecture of Segmentation Automation Workflow Tool is built on Amazon Web Services (AWS) (Figure 1) with the following big data technologies:

- Adobe I/O — Adobe I/O provides the necessary authentication and generates the access tokens needed for the customers to interact with the Adobe Experience Platform’s APIs securely.

- Amazon Elastic Container Service (ECS) and Fargate — Amazon ECS and Fargate provide containerization to make all the tasks run through Segmentation Automation Workflow tool or framework cloud-agnostic, allowing customers the flexibility to use their preferred cloud services.

- AWS Lambdas — Lambdas function as a service allowing the tool to execute all the jobs within the workflow in parallel.

- Apache Airflow — Apache Airflow is used for the parallel orchestration, allowing the SAW Tool or SAW Framework to serve multiple customers in parallel in the form of multiple workflows to run jobs simultaneously, all without interference from other jobs.

- Amazon DynamoDB — DynamoDB provides an intermediate data store for storing the details regarding multiple customers and helps to orchestrate the job in parallel.

- Cloudwatch — Cloudwatch handles log monitoring for status checks of the intermediate applications and is used to set the cron jobs and automate the services within the tool.

- Amazon Simple Notification Service (SNS) — SNS provides the monitoring and notification services in the tool.

- Apache Spark and Amazon Elastic MapReduce (EMR) — Spark is used to transform the Parquet files returned by the tool into the format specified by the customer, and EMR provides the clusters needed to run the Spark jobs.

- Amazon S3 — S3 serves as the object store for the files and metadata generated with Segmentation Automation Workflow Tool.

Figure 1: Architecture of Segmentation Automation Workflow Tool on Amazon Web Services.

Figure 1: Architecture of Segmentation Automation Workflow Tool on Amazon Web Services.

Looking under the hood at how Segmentation Automation Tool works

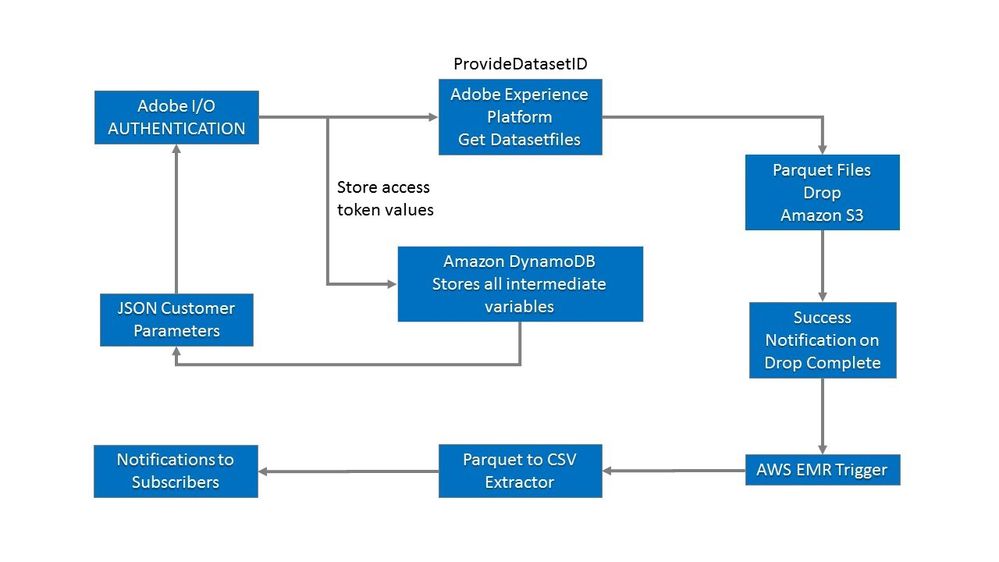

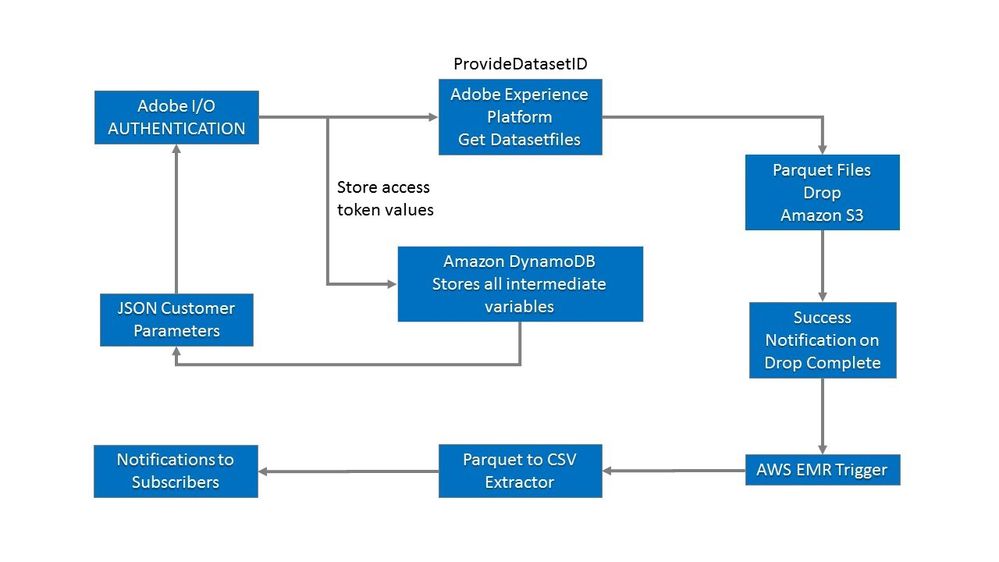

Segmentation Workflow Automation tool works behind the scenes within Adobe Experience Platform. In order to set up the workflow, the customer must first log into Adobe Experience Platform to authenticate through Adobe I/O. Then the customer simply creates the config file to define the parameters desired for the integration and drops it into the Amazon S3 bucket provided with the account. From there, the file follows the process shown in Figure 2.

Figure 2: Technical diagram showing key features of the tool and how it works.

Figure 2: Technical diagram showing key features of the tool and how it works.

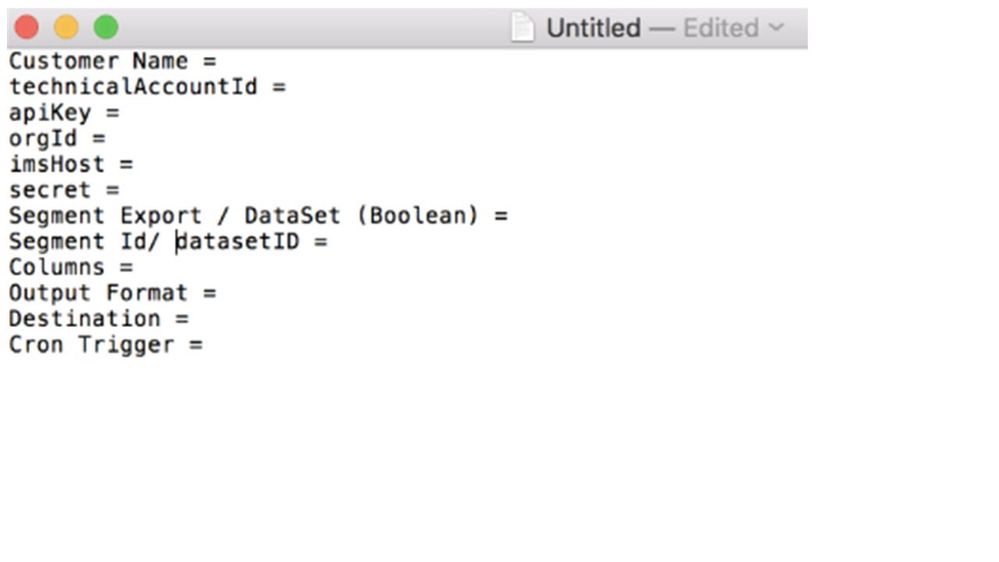

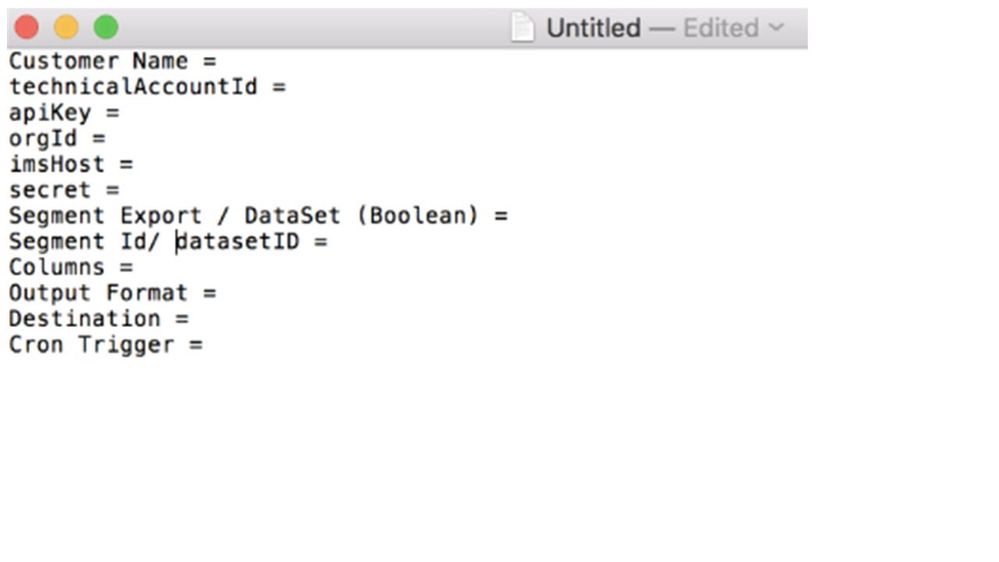

In the config file (Figure 3), the customer can select whether to export a dataset or segments, define the output format and location, and specify the Cron Trigger, which tells the tool how often to run the workflow. The tool can also be configured to download segment data from batches downloaded in the past, which is very useful in cases where a customer has lost a past batch of data and needs to download it again.

Figure 3: Segmentation Automation Workflow Tool setup.

Figure 3: Segmentation Automation Workflow Tool setup.

Segmentation Automation Tool also provides file encryption and compression as an additional layer. The data is encrypted for delivery and the tool provides compression to allow seamless export of even the largest files.

The segment columns can be customized to meet whatever requirements customers need to import the results into their different destination systems (e.g. Adobe Campaign, Oracle Responsys, and Camelot). Destinations are expandable to include Amazon S3, SFTP, FTP, Azure Blob, and Adobe Campaign. Files are exported in Parquet format, which can be easily converted to CSV, JSON, and other easily readable formats allowing customers to play around with the fields.

Segmentation Automation Tool offers enhanced security features, too. It is sandbox-aware and supports Protection Aegis for Live Migration (PALM) allowing customers with multiple sandbox instances to work with their segments securely within the same sandbox. This is possible because Adobe Experience Platform itself has a sandbox culture in which a given customer can work with multiple markets through different API calls. This is how Segmentation Automation Workflow Tool is able to communicate with the customer’s unique IMS OrgID to pull data from multiple markets within the same IMS Org but in different sandboxes.

The tool provides email notifications to let the customer know when the job begins and when the file(s) are delivered to their destinations. These notifications can be configured for both batch downloads and segment exports. Customers can subscribe to these notifications, allowing them to easily schedule any internal workflows that rely on the data. These notifications also let Adobe Consulting Services engineers know if an error occurs and where the job is at during any point in the process, which allows our platform engineers to be proactive in resolving issues for customers.

Benchmarking a success

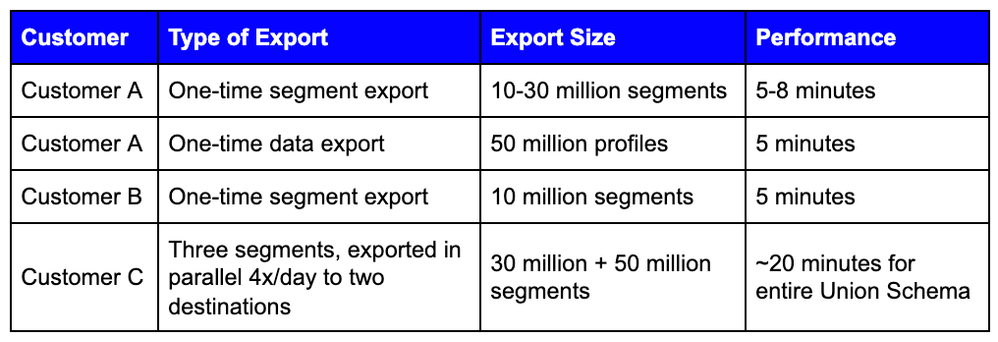

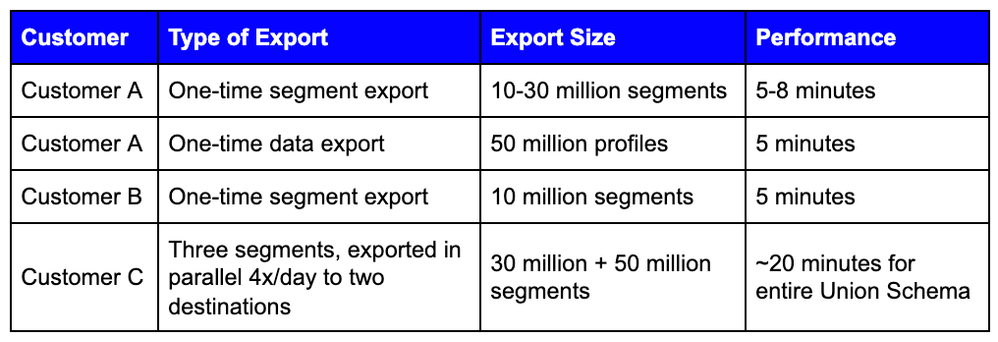

Early results indicate that our Segmentation Automation Tool will work quite well for customers with even the most demanding workloads. Table 1 shows our initial results with three of our enterprise customers and illustrates well the power of this tool to handle large workloads at high scale.

Table 1: Segmentation Automation Workflow Tool performance resulting from our proof of concept for Adobe Experience Platform customers.

Table 1: Segmentation Automation Workflow Tool performance resulting from our proof of concept for Adobe Experience Platform customers.

Customer A was our first proof of concept for Segmentation Automation Tool, which we attempted in the early stages of its development. The customer wanted to export a large number of segments from Adobe Experience Platform to Adobe Campaign.

The initial export took longer than what is shown in Table 1. Based on the initial results, we made some changes in the spark job to improve the performance. Instead of reading the files sequentially, we took the entire data in memory and read it, which significantly reduced the time required to run the workflow to just 5–8 minutes depending on the size of the export. When the customer later requested an export of its data sets, we were able to export a file containing 50 million profiles in just five minutes.

For Customer B, we performed a one-time segment export of 10 million records, which we were able to complete in five minutes illustrating the repeatability of our results.

Customer C offered us the opportunity to illustrate the power of the tool for ongoing exports. We helped the company set up three different segments to download simultaneously six times per day. In total, the export consisted of 80 million segments, which took approximately 20 minutes for the entire union schema.

Empowering Adobe Experience Platform customers

Adobe Experience Platform is an open-ended solution that allows customers to ingest and extract data in many ways. Segmentation Automation Workflow Tool was designed to empower our customers with easy access to their data in the platform and the flexibility to extract it to any destination they choose to use it more effectively to meet their unique business needs. The tool provides a number of important capabilities that enhance the degree to which our customers can leverage their data:

- Segmentation Export — Segmentation Automation Workflow Tool provides the ability to extract the segments as a file and select the specific attributes needed for the customer’s use case. For example, a customer can build some segments in Adobe Experience Platform and then export that data at scale and share it as a file feed with custom attributes. We can leverage the built-in capabilities of the tool to meet this need for customers working with destinations within or directly integrated with Adobe Experience Platform as well as provide out-of-the-box integrations for customers wanting to export their data to other destinations.

- Reporting Needs — With the ability to customize the fields to be exported, Segmentation Automation Workflow Tool allows for easy access and export of data to meet a wide variety of reporting needs.

- Scalability — Segmentation Automation Workflow Tool is scalable based on the volume of data the customer needs. This scalability significantly expands the number of use cases the tool can help our customers solve.

Segmentation Automation Workflow Tool is one example of how Adobe Consulting Services engineers are working to help our customers unlock the full potential in their data by making it easier to extract for use in a wide variety of use cases.

Follow the Adobe Experience Platform Community Blog for more developer stories and resources, and check out Adobe Developers on Twitter for the latest news and developer products. Sign up here for future Adobe Experience Platform Meetups.

Resources

- Adobe Consulting Services — https://www.adobe.com/experience-cloud/consulting-services.html

- Adobe Experience Platform — https://www.adobe.com/experience-platform.html

- Adobe Experience Platform API — https://www.adobe.io/apis/experienceplatform.html

- Adobe Campaign — https://www.adobe.com/marketing/campaign.html

- Amazon Web Services (AWS) — https://aws.amazon.com/

- Adobe I/O — https://www.adobe.io/apis/experienceplatform/home/tutorials/alltutorials.html

- AWS Lambdas — https://aws.amazon.com/lambda/

- Apache Airflow — https://airflow.apache.org/

- Amazon Elastic Container Service (ECS) — https://aws.amazon.com/ecs/

- Fargate — https://aws.amazon.com/fargate/

- Amazon DynamoDB — https://aws.amazon.com/dynamodb/

- Cloudwatch — https://aws.amazon.com/cloudwatch/

- Amazon Simple Notification Service (SNS) — https://aws.amazon.com/sns/

- Amazon Elastic MapReduce (EMR) — https://aws.amazon.com/emr/

- Apache Spark — https://spark.apache.org/

- Amazon S3 — https://aws.amazon.com/s3/

- Oracle Responsys — https://www.oracle.com/marketingcloud/products/cross-channel-orchestration/

- Azure Blob — https://azure.microsoft.com/en-us/services/storage/blobs/

- Apache Parquet — https://parquet.apache.org/

- Protection Aegis for Live Migration (PALM) — https://books.google.com/books?id=v35TDwAAQBAJ&pg=PA278&lpg=PA278&dq=Protection+Aegis+for+Live+Migra...

Originally published: Apr 23, 2020

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.