What is the maximum file size used in the data loading activity and how to increase time limit for uploading data in campaign server?

Hi Team,

We are uploading 4.5 GB data into campaign server using data loading activity. Also we need to process the file and insert data into schema.

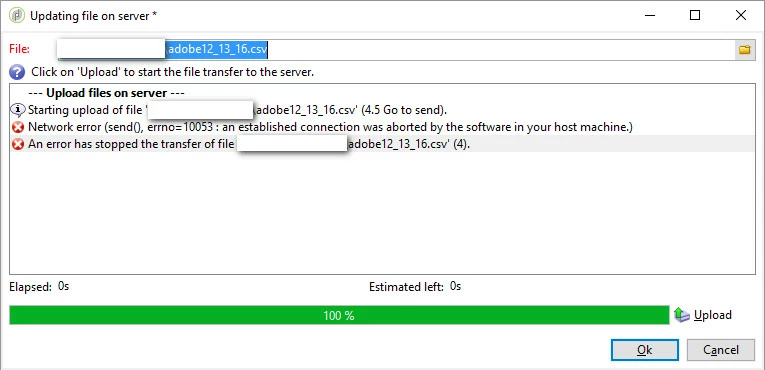

We are facing some errors while uploading data into server.

Also we tried moving file to campaign server using SSH and used File collector to process it. This approach also facing too much time while executing the workflow.

Is there any option for increasing time limit in data loading activity?

Is there any maximum file size restriction in campaign server?

Let us know the best practice to process bulk files.