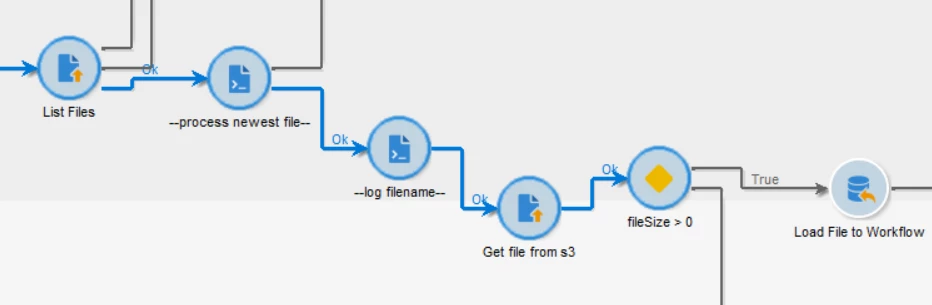

Need to download only one file from s3

I have a process where I need to retrieve the most recent file from an s3 bucket and use it to target specific customers in a recurring delivery. However, files are written to this bucket multiple times in a day and are saved for 14 days. Campaign only has read access to this bucket, so I can't archive the delete the files.

When I run the workflow, I load not only the newest file, but the others as well, which causes multiple deliveries to be triggered.

The file transfer is set to download and the server folder is configured like this:

How can I load only one file or how can I filter out the other files in the workflow so multiple files dont trigger multiple deliveries?