Abstract

Introduction

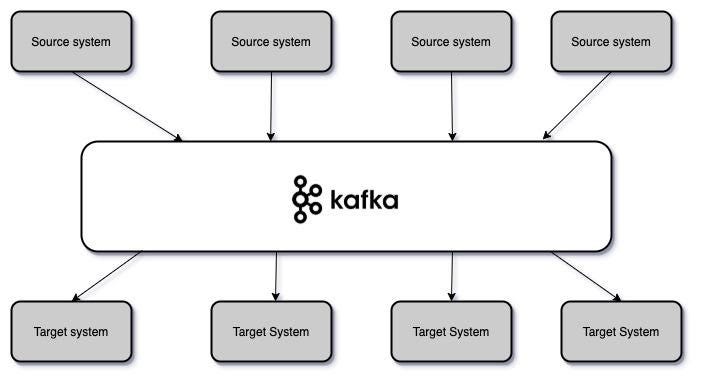

Apache Kafka is an open-source distributed event streaming platform used by many companies around the world, allowing us high-performance data pipelines, streaming analytics, data integration for mission-critical applications.

Companies use to start using a simple integration point-to-point, something like this:

After a while, the requirements change, and then the companies need integrations with more sources and more targets complicating in the future.

The above architecture has the following problems:

1. If you have 4 source system and 6 target systems, you need to write 24 integrations

2. Each integration comes with difficulties around protocols (TCP, HTTP, REST, FTP, JDBC, etc), data format (Binary, CSV, JSON, etc), data schemas how the data is shaped and may change.

Read Full Blog

Q&A

Please use this thread to ask the related questions.

Kautuk Sahni