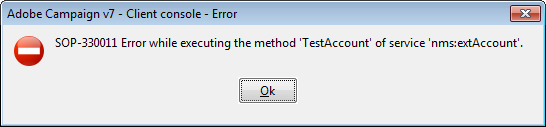

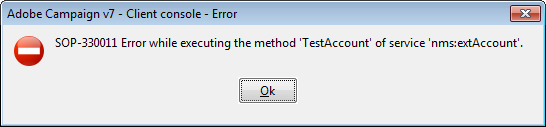

We are trying to fetch data from Hortonwork Hadoop. I created the ODBC data source (Connection test successful) in our windows server and created an external account using the ODBC datasource (there is no database type option for Hadoop) name as the Server. When I go to the Parameters tab of the external account and click on the "Deploy Functions", I am getting a success message. But If I do the test connection, it is throwing an error as below.

In the web@Default log I am getting this error

WDB-200001 SQL statement 'select GetDate()' could not be executed. (iRc=-2006)

ODB-240000 ODBC error: [Hortonworks][Hardy] (80) Syntax or semantic analysis error thrown in server while executing query. Error message from server: Error while compiling statement: FAILED: SemanticException [Error 10011]: Line 1:7 Invalid function 'GetDate' SQLState: S1000 (iRc=-2006)

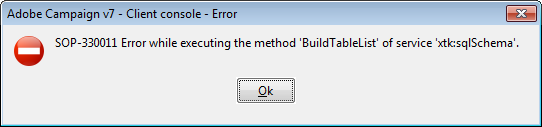

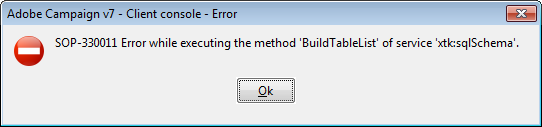

I thought about ignoring this and check one step forward and saved the External account and went to create a new Schema using "Access External Data".

I selected the Hortonwork FDA account and clicked on the Table Section and got below error

This time also got the same error in default log

So I thought about trying the alternate option.

In an workflow, I picked one DBImport activity and selected "Local External Datasource" instead of "Shared external datasource". So It asked me to give all the parameters needed to setup.

I selected ODBC as the database engine as that is the most matching option I got and gave other connection information. And this time when I clicked the Table Name field, I was able to see all the table names from Hive Views. So I selected one table and picked some columns and gave a simple filter criteria with STATE = 'IL' or STATE = 'Illionios' and ran the import activity.

And here is what I got in the log

| 10/18/2018 10:05:24 AM | dbImport | p_ent_cd = N'Illinois')' could not be executed. |

| 10/18/2018 10:05:24 AM | dbImport | WDB-200001 SQL statement 'SELECT D0.acct_nbr, D0.corp_ent_cd, D0.can_cd, D0.can_init_lvl_cd, D0.cdc_actn_cd, D0.dob, D0.eff_dt, D0.first_nm, D0.last_nm, D0.mem_nbr, D0.gndr_cd, D0.grp_nbr FROM default.myTableName D0 WHERE (D0.corp_ent_cd = N'IL') OR (D0.cor |

| 10/18/2018 10:05:24 AM | dbImport | ' ')' in expression specification SQLState: S1000 |

| 10/18/2018 10:05:24 AM | dbImport | ODB-240000 ODBC error: [Hortonworks][Hardy] (80) Syntax or semantic analysis error thrown in server while executing query. Error message from server: Error while compiling statement: FAILED: ParseException line 1:212 cannot recognize input near 'N' ''IL' |

The the SQLs are generated with a "N" before the string literals to mark for Unicode, but HiveQL probably does't understand that. Not sure why Adobe is adding the Unicode identifier when the string is just basic ASCII and if Hive doesn't understand, why is it added. I think in SQL server it is used most.

So I deleted the filter criteria and just ran a basic select. And this time I got error like this

| 10/18/2018 10:15:21 AM | dbImport | WDB-200001 SQL statement 'SELECT D0.acct_nbr, D0.corp_ent_cd, D0.can_cd, D0.can_init_lvl_cd, D0.cdc_actn_cd, D0.dob, D0.eff_dt, D0.first_nm, D0.last_nm, D0.mem_nbr, D0.gndr_cd, D0.grp_nbr FROM default.member D0' could not be executed. |

| 10/18/2018 10:15:21 AM | dbImport | er.java:319) at org.apac SQLState: S1000 |

| 10/18/2018 10:15:21 AM | dbImport | op.security.AccessControlException: Permission denied: user=e6863001, access=EXECUTE, inode="/test/incoming/raw/Membership/BSTAR/POC3/curr/MEMBER":hdfs:hdfs:drwx------ at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionCheck |

| 10/18/2018 10:15:21 AM | dbImport | ODB-240000 ODBC error: [Hortonworks][Hardy] (80) Syntax or semantic analysis error thrown in server while executing query. Error message from server: Error while compiling statement: FAILED: SemanticException Unable to fetch table member. org.apache.hado |

| 10/18/2018 10:15:09 AM | dbImport | SQL:... _cd, D0.can_cd, D0.can_init_lvl_cd, D0.cdc_actn_cd, D0.dob, D0.eff_dt, D0.first_nm, D0.last_nm, D0.mem_nbr, D0.gndr_cd, D0.grp_nbr FROM default.member D0 |

| 10/18/2018 10:15:09 AM | dbImport | SQL: [{ODBC:e6863001:default/@N06m8mpjyOnyw8sMa9lAgw==@Test]>[default] COPY INTO wkf155766063_2_1 (sAcct_nbr,sCorp_ent_cd,sCan_cd,sCan_init_lvl_cd,sCdc_actn_cd,sDob,sEff_dt,sFirst_nm,sLast_nm,sMem_nbr,sGndr_cd,sGrp_nbr) SELECT D0.acct_nbr, D0.corp_ent |

| 10/18/2018 10:15:09 AM | dbImport | SQL:... rp_ent_cd nvarchar(255), sDob nvarchar(255), sEff_dt nvarchar(255), sFirst_nm nvarchar(255), sGndr_cd nvarchar(255), sGrp_nbr nvarchar(255), sLast_nm nvarchar(255), sMem_nbr nvarchar(255) ); |

| 10/18/2018 10:15:09 AM | dbImport | SQL: -- Log: Creating table 'wkf155766063_2_1' DropTableIfExists 'wkf155766063_2_1'; CREATE TABLE wkf155766063_2_1( sAcct_nbr nvarchar(255), sCan_cd nvarchar(255), sCan_init_lvl_cd nvarchar(255), sCdc_actn_cd nvarchar(255), sCo |

So basically adobe is trying to create temp table in Hadopp database with the select statement and obviously Hadoop doesn't allow anyone to create tables in their space.

Now questions:

Why the external account is not working?

What are the functions created by the external account (I think GetDate() is one of them, which is causing some errors)?

Why the SQLs are generated with 'N' before the string literals?

How to stop creation of temp tables inside Hadoop? Never Hadoop will allow a consumer to create temp tables.

I asked one other question pertaining to the temp tables before, but no answer. Just posting the link Disable temp table creation for External FDA connections

vipul vaibhav, Amit_Kumar, Vipul Raghav any help is highly appreciated. It is really urgent and slams the door of huge opportunity on our face if this connection is not successful