Delete data for a day using DataSource API 1.4

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi,

We are using DataSource.Upload to insert 1000 records in Adobe analytics.

While processing data in AA, some exception occurred due to special character in record 702, because of this request failed and returned exceptions. But 701 records processed successfully and inserted in DataSource in AA.

After that, we fixed issue in upstream and rerun the job for the same day and job succeed.

In AA dashboard, we are getting duplicate count for first 701 records due to rerun of same job.

I want to delete first 701 records so that I won't get duplicate record in my dashboard, may I know how can I delete record for a particular day? or any other way to fix this issue?

Thanks in advance!

Solved! Go to Solution.

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hey @sagrawal85,

If you have no log or access to the previous upload it can be challenging to find the faulty uploads. Depending on how you upload data, you could run a report for all data uploaded on a certain day and create an import to nullify all data from that day. Be aware that if you upload negative values without prior positive uploads, you will end up with those negative values in your report. Correcting data like this is a tedious and manual process but it's unfortunately all we have.

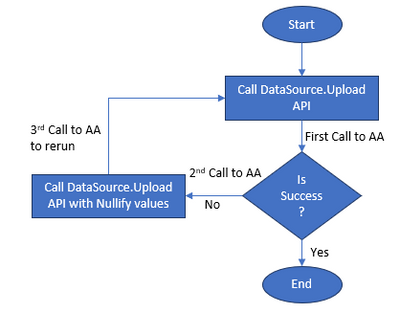

The chart you created looks like a good process to me. Be again aware that the 2nd call in the chart should only contain the successfully uploaded rows from the 1st call to avoid negative values in reporting.

Good luck!

Need help with Adobe Analytics or Customer Journey Analytics? Let's talk!

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Unfortunately, Data can not be deleted after the upload. However, you can upload the data again with a negative value, which will nullify the effect of the previous upload.

Need help with Adobe Analytics or Customer Journey Analytics? Let's talk!

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @FrederikWerner ,

Thanks for responding back.

How will I know how many records I need to nullify before uploading same data again? Because exception occurred while uploading data and few records inserted successfully.

Also, to resolve this issue, I need to call API twice, one for to nullify and one for uploading same record again. So, total 3 times I need to call API.

Is my below understanding correct? if yes, Is it right way to handle data duplication?

Thanks!

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hey @sagrawal85,

If you have no log or access to the previous upload it can be challenging to find the faulty uploads. Depending on how you upload data, you could run a report for all data uploaded on a certain day and create an import to nullify all data from that day. Be aware that if you upload negative values without prior positive uploads, you will end up with those negative values in your report. Correcting data like this is a tedious and manual process but it's unfortunately all we have.

The chart you created looks like a good process to me. Be again aware that the 2nd call in the chart should only contain the successfully uploaded rows from the 1st call to avoid negative values in reporting.

Good luck!

Need help with Adobe Analytics or Customer Journey Analytics? Let's talk!

Views

Replies

Total Likes