Tuesday Tech Bytes: Unleashing AEM Insights Weekly

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Welcome to 'Tuesday Tech Bytes,' your weekly source for expert insights on AEM. Join us as we explore a range of valuable topics, from optimizing your AEM experience to best practices, integrations, success stories, and hidden gems. Tune in every Tuesday for a byte-sized dose of AEM wisdom!

Get ready to meet our blog authors:

Introducing Anmol Bhardwaj, an AEM Technical Lead at TA Digital, with seven years of rich experience in AEM and UI. In his spare time, Anmol is the curator of the popular blog, "5 Things," where he brilliantly simplifies intricate AEM concepts into just five key points.

And here's Aanchal Sikka, bringing 14 years' expertise in AEM Sites and Assets to our discussions. She's also an active blogger on https://techrevel.blog/, with a recent penchant for in-depth dives into various AEM topics.

Together, Anmol and Aanchal will be your guides on an exhilarating journey over the next 8 weeks, exploring themes such as:

- 🔮 Theme 1 - AEM Tips & Tricks

- 🏆 Theme 2 - AEM Best Practices

- 🌐 Theme 3 - AEM Integrations & Success Stories

- 🔮 Theme 4 - AEM Golden Nuggets.

We are absolutely thrilled to embark on this journey of learning and growth with all of you.

We warmly invite you to engage with our posts by liking, commenting, and sharing. If there are specific topics you'd like us to delve into, please don't hesitate to let us know.

Together, let's ignite discussions, share invaluable insights, and collectively ensure the resounding success of this program!

Quicklinks to each tech byte:

- Adaptive Image Rendering for AEM components

- Tips & Tricks - Part 1 (Sling Model, Servlet, Jobs, Request filter, Injector)

- Tips & Tricks - Part 2 (Developer mode, Unit tests, Keyboard shortcuts, custom health check)

- Basic Guidelines: Content Fragment Models and GraphQL Queries for AEM Headless Implementation

- AEM Performance Optimization: Best Practices for Speed and Scalability

- Integrating AEM with CIF & Developing AEM Commerce Projects on AEM

- Building Robust AEM Integrations

- AEM as a Cloud Service Migration Journey

- Tools for Streamlining Development and Maintenance

Kindly switch the sorting option to "Newest to Oldest" for instant access to the most recent content every Tuesday.

Aanchal Sikka

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Adaptive Image Rendering for AEM components

In the realm of Adobe Experience Manager (AEM) development, optimizing images within AEM components is crucial. One highly effective method for achieving this optimization is by using adaptive rendering.

What are Adaptive Images ?

Adaptive images are a web development technique that ensures images on a website are delivered in the most suitable size and format for each user’s device and screen resolution. This optimization enhances website performance and user experience by reducing unnecessary data transfer and ensuring images look sharp and load quickly on all devices.

Example:

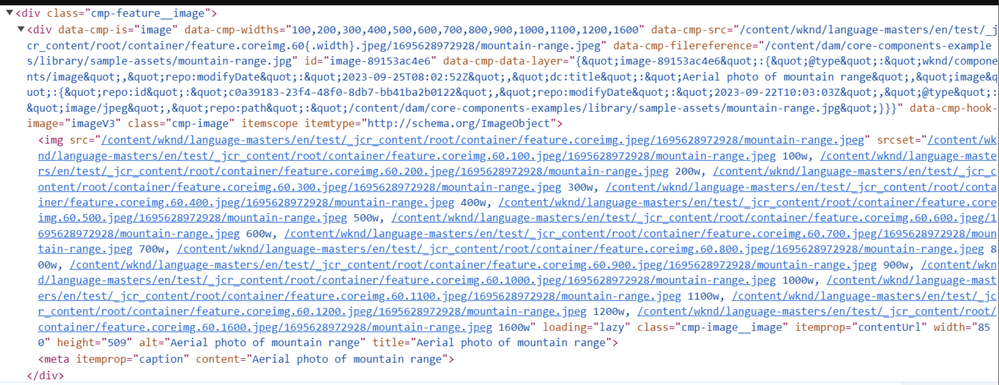

Let’s analyze the example, with a primary emphasis on the srcset attribute for the current blog:

<div data-cmp-is="image" ...>: This is the HTML element that is generated by Image component . It has various attributes and data that provide information about the image.data-cmp-widths="100,200,300,400,500,600,700,800,900,1000,1100,1200,1600": These are different widths at which the image is available. These widths are used to serve the most appropriate image size to the user’s device based on its screen width.data-cmp-src="/content/wknd/language-masters/en/test/_jcr_content/root/container/feature.coreimg.60{.width}.jpeg/1695628972928/mountain-range.jpeg": This attribute defines the source URL of the image. Notice the{.width}placeholder, which will be dynamically replaced with the appropriate width value based on the user’s device.data-cmp-filereference="/content/dam/core-components-examples/library/sample-assets/mountain-range.jpg": This is a reference to the actual image file stored on the server.srcset="...": This attribute specifies a list of image sources with different widths and their corresponding URLs. Browsers use this information to select the best image size to download based on the user’s screen size and resolution.loading="lazy": This attribute indicates that the image should be loaded lazily, meaning it will only be loaded when it’s about to come into the user’s viewport, improving page load performance.

How Browsers Choose Images with srcset Based on Device Characteristics:

- Device Pixel Ratio (DPR): The browser first checks the device’s pixel ratio or DPI (Dots Per Inch), which measures how many physical pixels are used to represent each CSS pixel. Common values are 1x (low-density screens) and 2x (high-density screens, like Retina displays). DPR influences the effective resolution of the device.

- Viewport Size: The browser knows the dimensions of the user’s viewport, which is the visible area of the webpage within the browser window. Both the viewport width and height are considered.

- Image Size Descriptors: Each source in the

srcsetlist is associated with a width descriptor (e.g.,100w,200w) representing the image’s width in CSS pixels. These descriptors help the browser determine the size of each image source. - Network Conditions: Browsers may also consider the user’s network conditions, such as available bandwidth, to optimize image loading. Smaller images may be prioritized for faster loading on slower connections.

- Calculation of Effective Pixel Size: The browser calculates a “density-corrected effective pixel size” for each image source in the

srcset. This calculation involves multiplying the image’s declared width descriptor by the device’s pixel ratio (DPR). - Selecting the Most Appropriate Image: The browser compares the calculated effective pixel sizes to the viewport size. It chooses the image source that best matches the viewport width or height, ensuring that the image is appropriately sized for the user’s screen.

- Loading Only One Image: Typically, the browser loads only one image from the

srcsetlist. It selects the image source that is the closest match to the viewport dimensions. This approach optimizes performance and reduces unnecessary data transfer.

Example:

<img srcset="image-100w.jpg 100w, image-200w.jpg 200w, image-300w.jpg 300w" alt="Example Images">

Suppose the browser detects a device with a DPR of 2x and a viewport width of 400 CSS pixels. In this case, the browser selects the image-200w.jpg source (200w) because it is the closest match to the viewport width of 400 pixels. Only the image-200w.jpg will be loaded, not all three images.

By dynamically selecting the most appropriate image source from the srcset, browsers optimize page performance and ensure that images look sharp on high-resolution screens while minimizing unnecessary data transfer on lower-resolution devices.

Implementing adaptive images for Components

In Adobe Experience Manager (AEM), both ResourceWrapper and data-sly-resource are used for including or rendering content from other resources within your AEM components. However, they serve slightly different purposes and are used in different contexts:

ResourceWrapper:

- Purpose:

ResourceWrapperis a Java class used on the server-side to manipulate and control the rendering of resources within your AEM components. - Usage:

- You use

ResourceWrapperwhen you want to programmatically control how a resource or component is rendered. - This appraoch is also used by WCM Core components like Teaser.

- You use

- Benefit: Utilized as an abstract class, ResourceWrapper provides advantages in terms of reusability, consistency, and streamlined development. In the context of components responsible for displaying images, developers can extend this abstract class and configure a property on the custom component. This approach expedites the development process, guarantees uniformity, simplifies customization, and, in the end, elevates efficiency and ease of maintenance.

data-sly-resource:

- Purpose:

data-sly-resourceis a client-side attribute used in HTL (HTML Template Language) to include and render other resources or components within your HTL templates. - Usage:

- You use

data-sly-resourcewhen you want to include and render other AEM components or resources within your HTL templates.

- You use

- Example:

<sly data-sly-resource="${resource.path @ resourceType='wknd/components/image'}"></sly>

Our focus will be on harnessing the power of the ResourceWrapper class from the Apache Sling API as a crucial tool for this purpose.

Understanding ResourceWrapper

The ResourceWrapper acts as a protective layer for any Resource, automatically forwarding all method calls to the enclosed resource by default.

To put it simply, in order to render an adaptive image in our custom component:

- We need to integrate a fileUpload field.

- Wrap this component’s resource using ResourceWrapper. The resource type associated with the image component will be added to the Wrapper.

- As a result, this newly enveloped resource can be effortlessly presented through the Image component.

It’s a straightforward process!

Now lets get into the details.

Step 1: Create an Image Resource Wrapper

The first step is to create an Image Resource Wrapper. This wrapper will be responsible for encapsulating the Image Resource and appending the Image ResourceType to the corresponding value map. This wrapped instance can then be used by Sightly to render the resource using the ResourceType. Please keep in mind that the URLs generated by Sightly depend on the policy of the Image component used.

Link to complete class: ImageResourceWrapper

public class ImageResourceWrapper extends ResourceWrapper {

private ValueMap valueMap;

private String resourceType;

// Constructor to wrap a Resource and set a custom resource type

public ImageResourceWrapper(@NotNull Resource resource, String resourceType) {

super(resource);

if (StringUtils.isEmpty(resourceType)) {

// Validate that a resource type is provided

throw new IllegalArgumentException("The " + ImageResourceWrapper.class.getName() + " needs to override the resource type of " +

"the wrapped resource, but the resourceType argument was null or empty.");

}

this.resourceType = resourceType;

// Create a ValueMapDecorator to manipulate the ValueMap of the wrapped resource

valueMap = new ValueMapDecorator(new HashMap<>(resource.getValueMap()));

}

....

}

Step 2: Create an Abstract Class for Reusability

Link to Complete class: AbstractImageDelegatingModel

public abstract class AbstractImageDelegatingModel extends AbstractComponentImpl {

/**

* Component property name that indicates which Image Component will perform the image rendering for composed components.

* When rendering images, the composed components that provide this property will be able to retrieve the content policy defined for the

* Image Component's resource type.

*/

public static final String IMAGE_DELEGATE = "imageDelegate";

private static final Logger LOGGER = LoggerFactory.getLogger(AbstractImageDelegatingModel.class);

// Resource to be wrapped by the ImageResourceWrapper

private Resource toBeWrappedResource;

// Resource that will handle image rendering

private Resource imageResource;

/**

* Sets the resource to be wrapped by the ImageResourceWrapper.

*

* toBeWrappedResource The resource to be wrapped.

*/

protected void setImageResource(@NotNull Resource toBeWrappedResource) {

this.toBeWrappedResource = toBeWrappedResource;

}

/**

* Retrieves the resource responsible for image rendering. If not set, it creates an ImageResourceWrapper based on the

* configured imageDelegate property.

*

* The image resource.

*/

@JsonIgnore

public Resource getImageResource() {

if (imageResource == null && component != null) {

String delegateResourceType = component.getProperties().get(IMAGE_DELEGATE, String.class);

if (StringUtils.isEmpty(delegateResourceType)) {

LOGGER.error("In order for image rendering delegation to work correctly, you need to set up the imageDelegate property on" +

" the {} component; its value has to point to the resource type of an image component.", component.getPath());

} else {

imageResource = new ImageResourceWrapper(toBeWrappedResource, delegateResourceType);

}

}

return imageResource;

}

/**

* Checks if the component has an image.

*

* The component has an image if the '{@value DownloadResource#PN_REFERENCE}' property is set and the value

* resolves to a resource, or if the '{@value DownloadResource#NN_FILE}' child resource exists.

*

* True if the component has an image, false if it does not.

*/

protected boolean hasImage() {

return Optional.ofNullable(this.resource.getValueMap().get(DownloadResource.PN_REFERENCE, String.class))

.map(request.getResourceResolver()::getResource)

.orElseGet(() -> request.getResource().getChild(DownloadResource.NN_FILE)) != null;

}

/**

* Initializes the image resource if the component has an image.

*/

protected void initImage() {

if (this.hasImage()) {

this.setImageResource(request.getResource());

}

}

}

Step 3: Create a Model for Sightly Rendering

Link to Complete class: FeatureImpl

Link to Complete interface: Feature

@Model(adaptables = SlingHttpServletRequest.class, adapters = Feature.class, defaultInjectionStrategy = DefaultInjectionStrategy.OPTIONAL)

public class FeatureImpl extends AbstractImageDelegatingModel implements Feature {

// Injecting the 'jcr:title' property from the ValueMap

@ValueMapValue(name = "jcr:title")

private String title;

/**

* Post-construct method to initialize the image resource if the component has an image.

*/

@PostConstruct

private void init() {

initImage();

}

public String getTitle() {

return title;

}

}

Step 4: Sightly Code for Rendering

<sly data-sly-template.image="${@ feature}">

<div class="cmp-feature__image" data-sly-test="${feature.imageResource}" data-sly-resource="${feature.imageResource =disabled}"></div>

</sly>

These steps provide a structured approach to implement adaptive images for custom components using the WCM Core Image component in Adobe Experience Manager. Customize the Model and Sightly code as needed to suit your specific project requirements and image rendering policies.

Step 5: Configure Image Resource Type

In this step, you will configure the Image Resource Type to be used in the component’s dialog. This involves setting the imageDelegate property to a specific value, such as techrevel/components/image, within the component’s configuration. This definition is crucial as it specifies which Image Resource Type will be utilized for rendering.

Link to the component in ui.apps: Feature

<?xml version="1.0" encoding="UTF-8"?>

<jcr:root xmlns:jcr="http://www.jcp.org/jcr/1.0" xmlns:cq="http://www.day.com/jcr/cq/1.0" xmlns:sling="http://sling.apache.org/jcr/sling/1.0"

jcr:primaryType="cq:Component"

jcr:title="Feature"

componentGroup="Techrevel AEM Site - Content"

sling:resourceSuperType="core/wcm/components/image/v3/image"

imageDelegate="techrevel/components/image"/>

Step 6: Add Image Upload Field to the Component’s Dialog

Link to the complete code of component’s dialog

<file

granite:class="cmp-image__editor-file-upload"

jcr:primaryType="nt:unstructured"

sling:resourceType="cq/gui/components/authoring/dialog/fileupload"

class="cq-droptarget"

enableNextGenDynamicMedia="{Boolean}true"

fileNameParameter="./fileName"

fileReferenceParameter="./fileReference"

mimeTypes="[image/gif,image/jpeg,image/png,image/tiff,image/svg+xml]"

name="./file"/>

Step 7: Verify Component Availability

Ensure component is available for use in your templates, allowing them to be added to pages. Additionally, it’s crucial to verify that you have appropriately configured the policy of the Image component. The policy of the Image component plays a pivotal role in generating the adaptive image’s URL, ensuring it aligns with your project’s requirements for image optimization and delivery.

At this point, with the knowledge and steps outlined in this guide, you should be fully equipped to configure images within your custom component.

When you inspect the URL of the image, it should now appear in a format optimized for adaptive rendering, ensuring that it seamlessly adapts to various device contexts and screen sizes.

This level of image optimization not only enhances performance but also significantly contributes to an improved user experience.

Aanchal Sikka

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Interesting article.Will this load an image based on the resolution ? For example, I have divided my page into 4 columns and I want to add images into each column. You can imagine the size of one column, ideally it is small in size. Now I have an image which is 2k resolution and size is 15MB. But as per page design it needs just 300x300. WIll this approach loads only 300x300 image instead of loading 2k resolution image

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

I don't think it will do that, it will load image size depending on your view port and DPR and not on the width of the container in which the image is used.

For example if your screen is 400px X 700px and DPR 2 and you have divided the screen into 4 columns of 100px each then the image size needed for each column will be of 100px X 2 = 200px and 700px X 2 = 1400px, which will be sufficient for good quality image but browser doesn't know about this since we control the size using css so

width : 400px X 2 = 800px and height 700px X 2 = 1400px will be used by browser. So the nearest image to 800px size will be used for each column.

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

AEM Tips & Tricks

Hello Everyone

Excited & thrilled to embark on this journey of learning and growth with all of you.

Following this week's theme for Tuesday Tech Bytes.

-

The implementation doesn't support the target type.

-

An adapter factory handling the conversion isn't active, possibly due to missing service references.

-

Internal conditions within AEM fail.

-

Required services are simply unavailable.

try {

MyModel model = modelFactory.createModel(object, MyModel.class);

} catch (Exception e) {

// Display an error message explaining why the model couldn't be instantiated.

// The exception contains valuable information.

// MissingElementsException - When no injector could provide required values with the given types

// InvalidAdaptableException - If the given class can't be instantiated from the adaptable (a different adaptable than expected)

// ModelClassException - If model instantiation failed due to missing annotations, reflection issues, lack of a valid constructor, unregistered model as an adapter factory, or issues with post-construct methods

// PostConstructException - In case the post-construct method itself throws an exception

// ValidationException - If validation couldn't be performed for some reason (e.g., no validation information available)

// InvalidModelException - If the model type couldn't be validated through model validation

}

@Model(adaptables = Resource.class)

public class FootballArticleModel {

@Inject

private String title;

@Inject

private Date publicationDate;

// Getter methods for title and publicationDate

}@Model(adaptables = Resource.class)

public class BasketballArticleModel {

@Inject

private String title;

@Inject

private Date publicationDate;

// Getter methods for title and publicationDate

}// Common Sports Article Interface

public interface SportsArticle {

String getTitle();

Date getPublicationDate();

}

// Sling Model for Football Article

@Model(adaptables = Resource.class)

public class FootballArticleModel implements SportsArticle {

@Inject

private String title;

@Inject

private Date publicationDate;

// Implementing methods from the SportsArticle interface

public String getTitle() {

return title;

}

public Date getPublicationDate() {

return publicationDate;

}

}-

If you need to update the common properties' behavior (e.g., `getTitle`) for all sports articles, you can do so in one place (the interface) instead of modifying multiple Sling Models.

-

When working with instances of these models in your AEM components, you can adapt them to the `SportsArticle` interface, simplifying your code and promoting consistency across various sports article types.

-

This approach promotes code reusability, maintainability, and flexibility in your AEM project, making it easier to handle different types of sports-related content with shared properties.

-

Enhanced Access Control: Servlets bound to specific paths often lack the flexibility to be effectively access-controlled using the default JCR repository Access Control Lists (ACLs). On the other hand, resource-type-bound servlets can be seamlessly integrated into your access control strategy, providing a more secure environment.

-

Suffix Handling: Path-bound servlets are, by design, limited to a single path. In contrast, resource type-based mappings open the door to handling various resource suffixes elegantly. This versatility allows you to serve diverse content and functionalities without the need for multiple servlet registrations.

-

Avoid Unexpected Consequences: When a path-bound servlet becomes inactive (e.g., due to a missing or non-started bundle), it can lead to unintended consequences, like POST requests creating nodes at unexpected paths. This can introduce unexpected complexities and issues in your application. Resource type mappings provide better control and predictability.

-

Developer-Friendly Transparency: For developers working with the repository, path-bound servlet mappings may not be readily apparent. In contrast, resource type mappings provide a more transparent and intuitive way of understanding how servlets are associated with specific resources. This transparency simplifies development and troubleshooting.

@component(args)

public class MyEventTrigger {

@Reference

private JobManager jobManager;

public void triggerAsyncEvent() {

// Create a job description for your asynchronous task

String jobTopic = "my/async/job/topic";

// Add the job to the queue for asynchronous processing

jobManager.addJob(jobTopic, <property_map>); // you can create a property map and pass it to the job.

}

}@component(

service = JobConsumer.class,

property = {

JobConsumer.PROPERTY_TOPICS + "=my/async/job/topic"

}

)

public class MyJobConsumer implements JobConsumer {

private final Logger logger = LoggerFactory.getLogger(getClass());

@Override

public JobResult process(Job job) { // Job is passed as an argument, and you can use the property_map in this function.

try {

logger.info("Processing asynchronous job...");

// Your business logic for processing the job goes here

return JobConsumer.JobResult.OK;

} catch (Exception e) {

logger.error("Error processing the job: " + e.getMessage(), e);

return JobConsumer.JobResult.FAILED;

}

}

}import org.apache.sling.api.SlingHttpServletRequest;

import org.apache.sling.api.SlingHttpServletResponse;

import org.apache.sling.api.servlets.SlingSafeMethodsServlet;

import org.apache.sling.engine.servlets.SlingRequestFilter;

import org.osgi.service.component.annotations.Component;

import javax.servlet.FilterChain;

import javax.servlet.ServletException;

import java.io.IOException;

@Component(

service = SlingRequestFilter.class,

property = {

"sling.filter.scope=request",

"sling.filter.pattern=/content/mywebsite/en.*" // Define the URL pattern for your pages

}

)

public class CustomSuffixRequestFilter implements SlingRequestFilter {

@Override

public void doFilter(SlingHttpServletRequest request, SlingHttpServletResponse response, FilterChain chain)

throws IOException, ServletException {

// Get the original request URL

String originalURL = request.getRequestURI();

// Add a custom suffix to the URL

String modifiedURL = originalURL + ".customsuffix";

// Create a new request with the modified URL

SlingHttpServletRequest modifiedRequest = new ModifiedRequest(request, modifiedURL);

// Continue the request chain with the modified request

chain.doFilter(modifiedRequest, response);

}

@Override

public void init(javax.servlet.FilterConfig filterConfig) throws ServletException {

// Initialization code if needed

}

@Override

public void destroy() {

// Cleanup code if needed

}

}- Authentication of requests

- As well as authentication of all the servlet requests coming into a server

- Checking resource type, path, and request coming on from a particular page, etc.

It can act as an extra layer of security or an additional layer of functionality before a request reaches a servlet.

@Target({ElementType.FIELD})

@Retention(RetentionPolicy.RUNTIME)

// Declareation for custom injector.

@InjectAnnotation

// Identifier in the Injector class itself

@Source("custom-header")

public @interface InheritedValueMapValue {

String property() default "";

InjectionStrategy injectionStrategy() default InjectionStrategy.DEFAULT;

}// Implement a custom injector for request headers by implementing the `org.apache.sling.models.spi.Injector` interface.

@Component(property=Constants.SERVICE_RANKING+":Integer="+Integer.MAX_VALUE, service={Injector.class, StaticInjectAnnotationProcessorFactory.class, AcceptsNullName.class})

public class CustomHeaderInjector implements implements Injector, StaticInjectAnnotationProcessorFactory

{

@Override

public String getName() {

return "custom-header"; // identifier used again

}

@Override

public Object getValue(Object adaptable, String fieldName, Type type, AnnotatedElement element,

DisposalCallbackRegistry callbackRegistry) {

if (adaptable instanceof SlingHttpServletRequest) {

SlingHttpServletRequest request = (SlingHttpServletRequest) adaptable;

String customHeaderValue = request.getHeader("X-Custom-Header");

if (customHeaderValue != null) {

return customHeaderValue;

}

}

return null;

}

}

@inject annotation to seamlessly inject the custom header value into your component.@Model(adaptables = SlingHttpServletRequest.class)

public class CustomHeaderComponent {

@Inject

private String customHeaderValue; // This will be automatically populated by the custom injector

// Your component logic here, using customHeaderValue

}public class CustomHeaderComponent {

@Inject

private String customHeaderValue;

public String getCustomHeaderValue() {

return customHeaderValue;

}

// Other methods to work with the custom header value

}

Anmol Bhardwaj

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

AEM Tips & Tricks

-

Discover Errors with Ease, Navigate Nodes in a Click

-

Effortless Error Detection: You can find out errors in your SLING models without leaving the page in the error section of developer mode, it shows the entire stack trace.

-

Point-and-Click Node Navigation: You can easily navigate to your component node or even it's scripts in CRX through the hyperlink present in developer mode.

-

Policies Applied to Component: With a click of a button, you can check what policies are added to the particular component.

-

Component Details: You can even check out your component details with hyperlink present in developer mode. It gives a very clear and detailed information about your component. ( including policies, live usage & documentation)Author Perspective:

-

Live Usage / Component Report : You can also get all the instances and references where the component you selected is being used. This is really helpful for both developers, BA & authors.

-

Component Documenation & Authoring Guide: The documentation tab in the component details tab will point to the component documentation, which can be customised to add authoring guide to the component , which can help the authors in authoring the component better.

@ExtendWith({MockitoExtension.class, AemContextExtension.class})

class MyComponentTest {

private final AemContext context = new AemContext();

@Mock

private ExternalDataService externalDataService;

@BeforeEach

void setUp() {

// Set up AEM context with necessary resources and sling models

context.create().resource("/content/my-site");

context.addModelsForClasses(MyContentModel.class);

context.registerService(ExternalDataService.class, externalDataService);

}

@Test

void testMyComponentWithData() {

// Mock behavior of the external service

when(externalDataService.getData("/content/my-site")).thenReturn("Mocked Data");

// Create an instance of your component

MyComponent component = context.request().adaptTo(MyComponent.class);

// Test component logic when data is available

String result = component.renderContent();

// Verify interactions and assertions

verify(externalDataService).getData("/content/my-site");

assertEquals("Expected Result: Mocked Data", result);

}

@Test

void testMyComponentWithoutData() {

// Mock behavior of the external service when data is not available

when(externalDataService.getData("/content/my-site")).thenReturn(null);

// Create an instance of your component

MyComponent component = context.request().adaptTo(MyComponent.class);

// Test component logic when data is not available

String result = component.renderContent();

// Verify interactions and assertions

verify(externalDataService).getData("/content/my-site");

assertEquals("Fallback Result: No Data Available", result);

}

}

|

Framework

|

Purpose and Use Cases

|

When to Use

|

When to Avoid

|

|---|---|---|---|

|

JUnit 5

|

Standard unit testing framework

|

- Writing unit tests for Java classes

|

- Not suitable for simulating AEM-specific

|

|

|

for writing test cases and

|

and components.

|

environments or mocking AEM services.

|

|

|

assertions.

|

|

|

|

Mockito

|

Mocking dependencies and

|

- Mocking external dependencies

|

- Not designed for simulating AEM-like

|

|

|

simulating behavior.

|

(e.g., services, databases).

|

environments or AEM-specific behaviors.

|

|

|

|

- Verifying interactions between code and

|

- Cannot create AEM resources or mock

|

|

|

|

mocks.

|

AEM-specific functionalities.

|

|

Apache Sling Mocks

|

Simulating AEM-like behavior

|

- Simulating AEM-like environment for

|

- Not suitable for pure unit testing of Java

|

|

|

and Sling-specific functionality.

|

testing AEM components and services.

|

classes or external dependencies.

|

|

|

|

- Resource creation and management.

|

- Overkill for basic unit tests without

|

|

|

|

|

AEM-specific behavior.

|

|

AEM Mocks Test Framework

|

Comprehensive AEM unit testing

|

- Testing AEM components, services,

|

- May introduce complexity for simple unit

|

|

|

with Sling models.

|

and behaviors in an AEM-like

|

tests of Java classes or external

|

|

|

|

environment.

|

dependencies.

|

|

|

|

- Simulating AEM-specific behaviors

|

- Overhead if you don't need to simulate

|

|

|

|

and resources.

|

AEM behaviors or use Sling models.

|

|

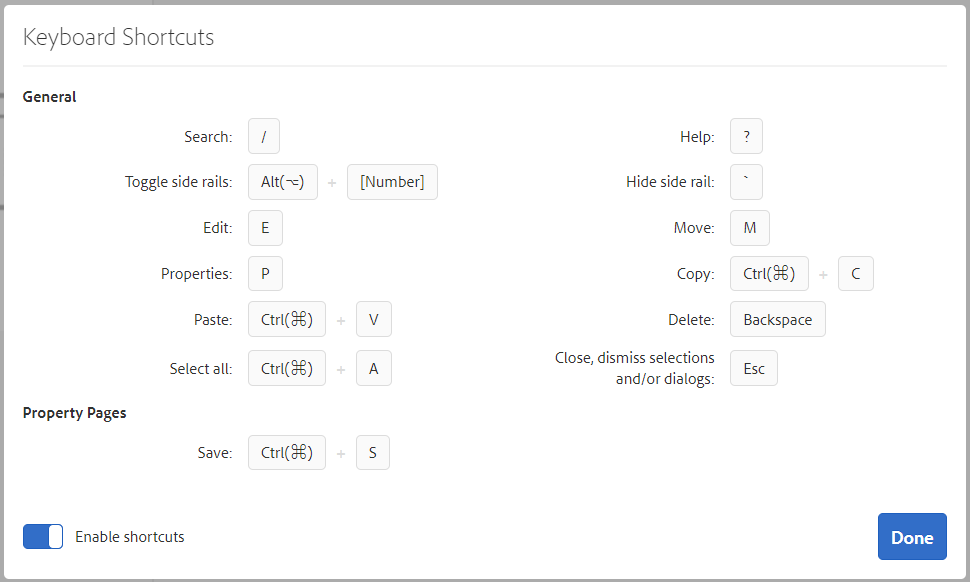

Shortcut

|

Description

|

|---|---|

|

Ctrl-Shift-m

|

Toggle between Preview and the selected mode

|

-

Sites > Tools > Operations > Health Reports through Navigation

<dependency>

<groupId>org.apache.felix</groupId>

<artifactId>org.apache.felix.healthcheck.api</artifactId>

<version>2.0.4</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.felix</groupId>

<artifactId>org.apache.felix.healthcheck.annotation</artifactId>

<version>2.0.0</version>

<scope>provided</scope>

</dependency>

/*

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The SF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing,

* software distributed under the License is distributed on an

* "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

* KIND, either express or implied. See the License for the

* specific language governing permissions and limitations under the License.

*/

package org.apache.felix.hc.generalchecks;

import static org.apache.felix.hc.api.FormattingResultLog.bytesHumanReadable;

import java.io.File;

import java.util.Arrays;

import org.apache.felix.hc.annotation.HealthCheckService;

import org.apache.felix.hc.api.FormattingResultLog;

import org.apache.felix.hc.api.HealthCheck;

import org.apache.felix.hc.api.Result;

import org.apache.felix.hc.api.ResultLog;

import org.osgi.service.component.annotations.Activate;

import org.osgi.service.component.annotations.Component;

import org.osgi.service.component.annotations.ConfigurationPolicy;

import org.osgi.service.metatype.annotations.AttributeDefinition;

import org.osgi.service.metatype.annotations.Designate;

import org.osgi.service.metatype.annotations.ObjectClassDefinition;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

@HealthCheckService(name = DiskSpaceCheck.HC_NAME)

@Component(configurationPolicy = ConfigurationPolicy.REQUIRE, immediate = true)

@Designate(ocd = DiskSpaceCheck.Config.class, factory = true)

public class DiskSpaceCheck implements HealthCheck {

private static final Logger LOG = LoggerFactory.getLogger(DiskSpaceCheck.class);

public static final String HC_NAME = "Disk Space";

public static final String HC_LABEL = "Health Check: " + HC_NAME;

@ObjectClassDefinition(name = HC_LABEL, description = "Checks the configured path(s) against the given thresholds")

public Config {

@AttributeDefinition(name = "Name", description = "Name of this health check")

String hc_name() default HC_NAME;

@AttributeDefinition(name = "Tags", description = "List of tags for this health check, used to select subsets of health checks for execution e.g. by a composite health check.")

String[] hc_tags() default {};

@AttributeDefinition(name = "Disk used threshold for WARN", description = "in percent, if disk usage is over this limit the result is WARN")

long diskUsedThresholdWarn() default 90;

@AttributeDefinition(name = "Disk used threshold for CRITICAL", description = "in percent, if disk usage is over this limit the result is CRITICAL")

long diskUsedThresholdCritical() default 97;

@AttributeDefinition(name = "Paths to check for disk usage", description = "Paths that is checked for free space according the configured thresholds")

String[] diskPaths() default { "." };

@AttributeDefinition

String webconsole_configurationFactory_nameHint() default "{hc.name}: {diskPaths} used>{diskUsedThresholdWarn}% -> WARN used>{diskUsedThresholdCritical}% -> CRITICAL";

}

private long diskUsedThresholdWarn;

private long diskUsedThresholdCritical;

private String[] diskPaths;

@Activate

protected void activate(final Config config) {

diskUsedThresholdWarn = config.diskUsedThresholdWarn();

diskUsedThresholdCritical = config.diskUsedThresholdCritical();

diskPaths = config.diskPaths();

LOG.debug("Activated disk usage HC for path(s) {} diskUsedThresholdWarn={}% diskUsedThresholdCritical={}%", Arrays.asList(diskPaths),

diskUsedThresholdWarn, diskUsedThresholdCritical);

}

@Override

public Result execute() {

FormattingResultLog log = new FormattingResultLog();

for (String diskPath : diskPaths) {

File diskPathFile = new File(diskPath);

if (!diskPathFile.exists()) {

log.warn("Directory '{}' does not exist", diskPathFile);

continue;

} else if (!diskPathFile.isDirectory()) {

log.warn("Directory '{}' is not a directory", diskPathFile);

continue;

}

double total = diskPathFile.getTotalSpace();

double free = diskPathFile.getUsableSpace();

double usedPercentage = (total - free) / total * 100d;

String totalStr = bytesHumanReadable(total);

String freeStr = bytesHumanReadable(free);

String msg = String.format("Disk Usage %s: %.1f%% of %s used / %s free", diskPathFile.getAbsolutePath(),

usedPercentage,

totalStr, freeStr);

Result.Status status = usedPercentage > this.diskUsedThresholdCritical ? Result.Status.CRITICAL

: usedPercentage > this.diskUsedThresholdWarn ? Result.Status.WARN

: Result.Status.OK;

log.add(new ResultLog.Entry(status, msg));

}

return new Result(log);

}

}

Anmol Bhardwaj

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Basic Guidelines: Content Fragment Models and GraphQL Queries for AEM Headless Implementation

Unlocking the potential of headless content delivery in Adobe Experience Manager (AEM) is a journey that begins with a solid foundation in Content Fragment Models (CFM) and GraphQL queries. In this blog, we’ll embark on this journey and explore the best practices and guidelines for designing CFMs and crafting GraphQL queries that empower your AEM headless implementation.

Guildelines for Content Fragment Models.

- Use Organism, Molecule, and Atom (OMA) model for structuring Content Fragment Models. It provides a systematic way to organize and model content for greater flexibility and reusability.

- Organism: Organisms represent high-level content entities or content types. Each Organism corresponds to a specific type of content in your system, such as articles, products, or landing pages. Organisms have their own Content Fragment Models, defining the structure and properties of that content type. Example:

- Organism: “Article”

- Content Fragment Model: “Article Content Fragment Model”

- Molecule: Molecules are reusable content components that make up Organisms. They represent smaller, self-contained pieces of content that are combined to create Organisms. Molecules have their own Content Fragment Models to define their structure. Example:

- Molecule: “Author Block” (includes author name, bio, and profile picture)

- Content Fragment Model: “Author Block Content Fragment Model”

- Atom: Atoms are the smallest content elements or data types. They represent individual pieces of content that are used within Molecules and Organisms. Example:

- Atom: “Text” (represents a single text field) in CFM

- Organism: Organisms represent high-level content entities or content types. Each Organism corresponds to a specific type of content in your system, such as articles, products, or landing pages. Organisms have their own Content Fragment Models, defining the structure and properties of that content type. Example:

- Relationships: Identify relationships between CFMs that reflect the relationships between different types of content on your pages. Also, GraphQL’s strength lies in its ability to navigate relationships efficiently. Ensure that your CFMs and GraphQL schema capture these relationships accurately. Use GraphQL’s nested queries to request related data when needed. For example, an “Author” CFM might have a relationship with an “Article” CFM to indicate authorship.

- Page Components Correspondence: Identify the components or sections within your web pages. Each of these components should have a corresponding CFM. For example, if your pages consist of article content, author details, and related articles, create CFMs for “Article”, “Author” and “Related Articles” to match these page components.

- Hierarchy and Nesting: Consider the hierarchy of content within pages. Some pages may have nested content structures, such as sections within articles or tabs within product descriptions. Create CFMs that allow for nesting of content fragments, ensuring you can represent these hierarchies accurately.

- Manage the number of content fragment models effectively: When numerous content fragments share the same model, GraphQL list queries can become resource-intensive. This is because all fragments linked to a shared model are loaded into memory, consuming time and memory resources. Filtering can only be applied after loading the complete result set into memory, which may lead to performance issues, even with small result sets. The key is to control the number of content fragment models to minimize resource consumption and enhance query performance.

- Multifield in Content Fragment Models: Adobe’s out-of-the-box (OOTB) offerings include multifields for fundamental data types such as text, content reference, and fragment reference. However, in cases where more intricate composite multifields are required, each set should be established as an individual content fragment. Subsequently, these content fragments can be associated with a parent fragment. GraphQL queries can then retrieve data from these nested content fragments. For an example, refer to link

- Content hierarchy for GraphQL optimization: Establishing a path-based configuration for content fragments is essential to enhance the performance of GraphQL queries. This approach enables queries to efficiently navigate through folder and content fragment hierarchies, thereby retrieving information from smaller data sets.

- Dedicated tenant/config folders: In the scenario of large organizations encompassing multiple business units, each with its unique content fragment models, it’s advisable to strategize the creation of content fragment models within dedicated /conf folders. These /conf folders can subsequently be customized for specific /content/dam folders. The “Allowed Content Fragment Models” property can be leveraged to restrict the usage of specific types of CFMs within a folder.

- Field Naming: Opt for transparent and uniform field names across both CFMs and GraphQL types. Select names that provide a clear indication of the field’s function, simplifying comprehension for both developers and content authors when navigating the content structure.

- Comments: Incorporate detailed descriptions for every field found in CFMs and GraphQL types. These comments should offer valuable context and elucidate the purpose of each field, aiding developers and content authors in comprehending how each property is intended to be utilized and its significance in the overall structure.

- Documentation: Ensure the presence of thorough documentation for both CFMs and GraphQL schemas. This documentation should encompass the field’s purpose, the expected values it should contain, and instructions on its utilization. Additionally, provide clear guidelines regarding the appropriate circumstances and methods for using specific fields to maintain uniformity. Any data relationships or dependencies between fields should also be documented to offer guidance to developers and content authors.

- Contemplate the option of integrating CFMs into your codebase, limiting editing access to specific administrators if necessary. This precautionary measure helps mitigate the risk of unintended modifications by unauthorized users, safeguarding your content structure from inadvertent alterations.

- In the context of AEM Sites, it is advisable to prioritize the utilization of QueryBuilder and the Content Fragment API for rendering results. This approach enables your Sling models to effectively process and transform the raw content for the user interface.

- Consider employing Experience Fragments for content that marketers frequently edit, as they provide the convenience of a WYSIWYG (What You See Is What You Get) editor.

Guildelines for graphQL queries

Sharing the general guidelines around creating graphQL queries. For syntax based suggestions, please refer to the links in References section.

- Query Complexity: Consider the complexity of GraphQL queries that content authors and developers will need to create. Ensure that the schema allows for efficient querying of content while avoiding overly complex queries that could impact performance.

- Pagination Implement offset/cursor-based pagination mechanisms within your GraphQL schema. This ensures that queries return manageable amounts of data. It’s advisable to opt for cursor-based pagination when dealing with extensive datasets for pagination, as it prevents premature processing.

// Offset based pagination

query {

articleList(offset: 5, limit: 5) {

items {

authorFragment {

lastName

firstName

}

}

}

}

//cursor-based pagination

query {

adventurePaginated(first: 5, after: "ODg1MmMyMmEtZTAzMy00MTNjLThiMzMtZGQyMzY5ZTNjN2M1") {

edges {

cursor

node {

title

}

}

pageInfo {

endCursor

hasNextPage

}

}

}

- Security and Access Control: Implement security measures to control who can access which content from GraphQL queries. Ensure that sensitive data is protected and that only authorized users can execute certain queries or mutations.

- Query only the data you need, where you need it: In GraphQL, clients can specify exactly which fields of a particular type they want to retrieve, eliminating over-fetching and under-fetching of data. This approach optimizes performance, reduces server load, enhances security, and ensures efficient data retrieval, making GraphQL a powerful choice for modern application development.

- Consider utilizing persisted queries as they offer optimization for network communication and query execution. Rather than transmitting the entire query text in every request, you can send a unique persisted-label that corresponds to a pre-stored query on the server. This approach takes advantage of server-side caching, allowing you to make GET requests using the persisted-label of the query, which enhances performance and reduces data transfer.

- Sort on top level fields: Sorting can be optimized when it involves top-level fields exclusively. When sorting criteria include fields located within nested fragments, it necessitates loading all fragments associated with the top-level model into memory, which negatively impacts performance. It’s important to note that even sorting on top-level fields may have a minor impact on performance. To optimize GraphQL queries, use the AND operator to combine filter expressions on top-level and nested fragment fields.

- Hybrid filtering: Explore the option of implementing hybrid filtering in GraphQL, which combines JCR filtering with AEM filtering. Hybrid filtering initially applies a JCR filter as a query constraint before the result set is loaded into memory for AEM filtering. This approach helps minimize the size of the result set loaded into memory, as the JCR filter efficiently eliminates unnecessary results beforehand. JCR filter has few limitations, where it does not work today, like case-insensitivity, null-check, contains not etc

- Use dynamic filters: Dynamic filters in GraphQL offer flexibility and performance benefits compared to variables. They allow you to construct and apply filters dynamically during runtime, tailoring queries to specific conditions without the need to define multiple query variations with variables. More details in video (time 6:29)

//Query with Variables

query getArticleBySlug($slug: String!) {

articleList(

filter: {slug: {_expressions: [{value: $slug}]}}

) {

items {

_path

title

slug

}

}

}

//Query-Variables

{"slug": "alaskan-adventures"}//Query with Dynamic Filter

query getArticleBySlug($filter:

ArticleModelFilter!){

articleList(filter: $filter) {

items {

_path

title

slug

}

}

}

//Query-variables

{

"filter": {

"slug": {

"_expressions": [{"value": "alaskan-adventures"}]

}

}

}- Avoid filtering on multiline textfields

- Avoid filtering on virtual fields

- Content hierarchy for GraphQL optimization: Implement a filter on the _path field of the top-level fragment to achieve this optimization.

- Enable GraphQL pre-caching and configure dispatcher/ CDN cache for persisted queries

- If Dynamic Media is enabled, employ web-optimized image delivery.

Aanchal Sikka

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Every bit in one place. Time for me to try everything you explained so nicely in an organized manner 😊👌👌👍

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

AEM Performance Optimization: Best Practices for Speed and Scalability

-

Some facts:

-

15-20ns (admin user)

-

30-40ns (checks ACL etc, nested setup )

-

1 ms

-

-

Creating 1 JCRNodeResource :

-

Accessing JCRResource takes a similar amount of time.

-

Creating ResourceResolver

-

Reduce repo access

-

Only open resource resolver where necessary

-

Try to reduce JCR functions and instead use SLING functions for high-level operations.

-

Don't try to resolve a resource multiple times.

-

Example: Don't convert node to resource then add path (like "/jcr:content") and then convert to node again.

-

Remove usage of @Optional & @inject as they check all injectors (in order) until it succeeds.

-

TRACE on org.apache.sling.jcr.AccessLogger.operation: logs stack trace when creating JCRNodeResource

-

TRACE on org.apache.sling.jcr.AccessLogger.statistics: logs number of created JCRNodeResource via each resource resolver.

/cached {

/glob "*"

/type "allow"

}/news {

/glob "/content/news/*"

/type "deny"

}

<flush>

<rules>

<rule>

<glob>/content/news/*</glob>

<invalidate>true</invalidate>

</rule>

</rules>

</flush>

<!-- Example AJAX request for dynamic content -->

<script>

$.ajax({

url: "/get-pricing",

method: "GET",

success: function(data) {

// Update pricing on the page

}

});

</script>

<picture>

<source srcset="/path/to/image-large.jpg" media="(min-width: 1024px)">

<source srcset="/path/to/image-medium.jpg" media="(min-width: 768px)">

<img src="/path/to/image-small.jpg" alt="Description">

</picture>

<img srcset="/path/to/image-small.jpg 320w,

/path/to/image-medium.jpg 768w,

/path/to/image-large.jpg 1024w"

sizes="(max-width: 320px) 280px,

(max-width: 768px) 680px,

940px"

src="/path/to/image-small.jpg"

alt="Description">

<?xml version="1.0" encoding="UTF-8"?>

<index oak:indexDefinition="{Name}CustomIndex" xmlns:oak="http://jackrabbit.apache.org/oak/query/1.0">

<indexRules>

<include>

<pattern>/content/myapp/.*</pattern>

</include>

<include>

<pattern>/content/dam/myassets/.*</pattern>

</include>

</indexRules>

</index>

<!-- Example code to define a custom rendition profile -->

<jcr:content

jcr:primaryType="nt:unstructured"

sling:resourceType="dam/cfm/components/renditionprofile">

<jcr:title>Web Renditions</jcr:title>

<renditionDefinitions>

<thumbnail

jcr:primaryType="nt:unstructured"

sling:resourceType="dam/cfm/components/renditiondefinition"

width="{Long}150"

height="{Long}150"

format="jpeg"/>

</renditionDefinitions>

</jcr:content>

// assume that resource is an instance of some subclass of AbstractResource

ModelClass object1 = resource.adaptTo(ModelClass.class); // creates new instance of ModelClass

ModelClass object2 = resource.adaptTo(ModelClass.class); // SlingAdaptable returns the cached instance

assert object1 == object2;

cache = true on the @Model annotation.cache = true is specified, the adaptation result is cached regardless of how the adaptation is done:@Model(adaptable = SlingHttpServletRequest.class, cache = true)

public class ModelClass {}

...

// assume that request is some SlingHttpServletRequest object

ModelClass object1 = request.adaptTo(ModelClass.class); // creates new instance of ModelClass

ModelClass object2 = modelFactory.createModel(request, ModelClass.class); // Sling Models returns the cached instance

assert object1 == object2;

// Example scheduled job with CRON expression

@Scheduled(resourceType = "myproject/scheduled-job", cronExpression = "0 0 3 * * ?")

public class MyScheduledJob implements Runnable {

// ...

}// Scheduled job to generate daily traffic report

@Scheduled(cronExpression = "0 0 3 * * ?")

public class TrafficReportJob {

public void execute() {

// Generate and send the report

}

}

List<User> users = // ... (populate the list)

List<User> filteredUsers = users.stream()

.filter(user -> user.getAge() >= 18)

.collect(Collectors.toList());

npm install uglify-js -g

uglifyjs input.js -o output.min.jsnpm install cssnano -g

cssnano input.css output.min.css

// webpack.config.js

const UglifyJsPlugin = require('uglifyjs-webpack-plugin');

module.exports = {

// ...

optimization: {

minimizer: [new UglifyJsPlugin()],

},

};<!-- Async loading -->

<script src="third-party-script.js" async></script>

<!-- Defer loading -->

<script src="third-party-script.js" defer></script>

<script src="lazy-third-party-script.js" loading="lazy"></script>

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

@Anmol_BhardwajKudos! 👏🏻

Very well written and organized article for best practices.

One thing surprised me 11K requests fact, is it really 11K requests for a WKND page? 😀

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @iamnjain ,

Thanks.

One thing surprised me 11K requests fact, is it really 11K requests for a WKND page?

Yeah, that too for the landing page.

If you want to check how many requests your page is making to the repository you can enable :

TRACE on org.apache.sling.jcr.AccessLogger.operation & org.apache.sling.jcr.AccessLogger.

Note: The size of the log file will grow very quickly, so make sure to turn it off when done.

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

mvn clean install -PautoInstallSinglePackage

npm i --save lodash.get@^4.4.2 lodash.set@^4.3.2

npm i --save apollo-cache-persist@^0.1.1

npm i --save redux-thunk@~2.3.0

npm i --save @Deleted Account/apollo-link-mutation-queue@~1.1.0

npm i --save @magento/peregrine@~12.5.0

npm i --save @Deleted Account/aem-core-cif-react-components --force

npm i --save-dev

@magento/babel-preset-peregrine@~1.2.1

npm i --save @Deleted Account/aem-core-cif-experience-platform-connector --forcemvn clean install -PautoInstallSinglePackage

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Building Robust AEM Integrations

In the current digital environment, the integration of third-party applications with Adobe Experience Manager (AEM) is pivotal for building resilient digital experiences. This blog serves as a guide, highlighting crucial elements to bear in mind when undertaking AEM integrations It is accompanied by practical examples from real-world AEM implementations, offering valuable insights. Without further ado, let’s dive in:

Generic API Framework for integrations

When it comes to integrating AEM with various APIs, having a structured approach can significantly enhance efficiency, consistency, and reliability. One powerful strategy for achieving these goals is to create a generic API framework. This framework serves as the backbone of your AEM integrations, offering a host of benefits, including reusability, consistency, streamlined maintenance, and scalability.

Why Create a Generic API Framework for AEM Integrations?

- Reusability: A core advantage of a generic API framework is its reusability. Rather than starting from scratch with each new integration, you can leverage the framework as a proven template. This approach saves development time and effort, promoting efficiency.

- Consistency: A standardized framework ensures that all your AEM integrations adhere to the same conventions and best practices. This consistency simplifies development, troubleshooting, and maintenance, making your AEM applications more robust and easier to manage.

- Maintenance and Updates: With a generic API framework in place, updates and improvements can be applied to the framework itself. This benefits all integrated services simultaneously, reducing the need to address issues individually across multiple integrations. This leads to more efficient maintenance and enhanced performance.

- Scalability: As your AEM application expands and demands more integrations, the generic framework can easily accommodate new services and endpoints. You won’t need to start from scratch or adapt to different integration methodologies each time you add a new component. The framework can seamlessly scale to meet your growing integration needs.

Key Components of an integration Framework:

1. Best Practices:

The framework should encompass industry best practices, ensuring that integrations are built to a high standard from the start. These best practices might include data validation, error handling, and performance optimization techniques.

2. Retry Mechanisms:

Introduce a mechanism for retries within the framework. It’s not uncommon for API calls to experience temporary failures due to network disruptions or service unavailability. The incorporation of automatic retry mechanisms can significantly bolster the reliability of your integrations. This can be accomplished by leveraging tools such as Sling Jobs.

For instance, consider an AEM project that integrates with a payment gateway. To address temporary network issues during payment transaction processing, you can implement a retry logic. If a payment encounters a failure, the system can automatically attempt the transaction multiple times before notifying the user of an error.

For guidance on implementing Sling Jobs with built-in retry mechanisms, please refer to the resource Enhancing Efficiency and Reliability by Sling jobs

3. Circuit Breaker Pattern:

The Circuit Breaker pattern is a design principle employed for managing network and service failures within distributed systems. In AEM, you can apply the Circuit Breaker pattern to enhance the robustness and stability of your applications when interfacing with external services.

It caters to several specific needs:

- Trigger a circuit interruption if the failure rate exceeds 10% within a minute.

- After the circuit breaks, the system should periodically verify the recovery of the external service API through a background process.

- Ensure that users are shielded from experiencing sluggish response times.

- Provide a user-friendly message in the event of any service disruptions.

Visit Latency and fault tolerance in Adobe AEM using HystriX for details

4. Security Measures:

Incorporate authentication and authorization features into your framework to ensure the security of your data. This may involve integration with identity providers, implementing API keys, setting up OAuth authentication, and utilizing the Cross-Origin Resource Sharing (CORS) mechanism.

For instance, when securing content fetched via GraphQL queries, consider token-based authentication. For further details, please refer to the resource titled Securing content for GraphQL queries via Closed user groups (CUG)

5. Logging

Ensuring robust logging and effective error-handling mechanisms are fundamental for the purposes of debugging and monitoring. Implementing comprehensive logging is crucial for recording significant events and errors, making troubleshooting and maintenance more streamlined. To achieve this:

- Comprehensive Monitoring and Logging: Implement thorough monitoring and logging for your integration to detect issues, track performance, and simplify debugging. It is advisable to categorize logging into three logical sets.

- AEM Logging: This pertains to logging at the AEM application level.

- Apache HTTPD Web Server/Dispatcher Logging: This encompasses logging related to the web server and Dispatcher on the Publish tier.

- CDN Logging: This feature, although gradually introduced, handles logging at the CDN level.

- Selective Logging: Log only essential information in a format that is easy to comprehend. Properly employ log levels to prevent overloading the system with errors and warnings in a production environment. When detailed logs are necessary, ensure the ability to enable lower log levels like ‘debug’ and subsequently disable them.

Utilize AEM’s built-in logging capabilities to log API requests, responses, and errors. Consider incorporating Splunk, a versatile platform for log and data analysis, to centralize log management, enable real-time monitoring, conduct advanced search and analysis, visualize data, and correlate events. Splunk’s scalability, integration capabilities, customization options, and active user community make it an invaluable tool for streamlining log management, gaining insights, and enhancing security, particularly in the context of operations and compliance.

6. Payload Flexibility:

Payload flexibility in the context of building a framework for integrations refers to the framework’s capacity to effectively manage varying types and structures of data payloads. A data payload comprises the factual information or content transmitted between systems, and it may exhibit notable differences in format and arrangement.

These distinctions in structure can be illustrated with two JSON examples sourced from the same data source but intended for different end systems:

Example 1: JSON Payload for System A

{

"orderID": "12345",

"customerName": "John Doe",

"totalAmount": 100.00,

"shippingAddress": "123 Main Street"

}Example 2: JSON Payload for System B

{

"transactionID": "54321",

"product": "Widget",

"quantity": 5,

"unitPrice": 20.00,

"customerID": "Cust123"

}Both examples originate from the same data source but require distinct sets of information for different target systems. Payload flexibility allows the framework to adapt seamlessly, enabling efficient integration with various endpoints that necessitate dissimilar data structures.

7. Data Validation and Transformation:

When integrating with a third-party app in Adobe Experience Manager (AEM), it’s crucial to ensure that data validation and transformation are performed correctly. This process helps maintain data integrity and prevents errors when sending or receiving data. Let’s consider an example where we are integrating with an e-commerce platform. We’ll validate and transform product data to ensure it aligns with the expected format and data types of the platform’s API, thus mitigating data-related issues.

private String transformProductData(String rawData) {

try {

// Parse the raw JSON data

JSONObject productData = new JSONObject(rawData);

// Validate and transform the data

if (isValidProductData(productData)) {

// Extract and transform the necessary fields

String productName = productData.getString("name");

double productPrice = productData.getDouble("price");

String productDescription = productData.getString("description");

// Complex data validation and transformation

productDescription = sanitizeDescription(productDescription);

// Create a new JSON object with the transformed data

JSONObject transformedData = new JSONObject();

transformedData.put("productName", productName);

transformedData.put("productPrice", productPrice);

transformedData.put("productDescription", productDescription);

// Return the transformed data as a JSON string

return transformedData.toString();

} else {

// If the data is not valid, consider it an error

return null;

}

} catch (JSONException e) {

// Handle any JSON parsing errors here

e.printStackTrace();

return null; // Return null to indicate a transformation error

}

}

private boolean isValidProductData(JSONObject productData) {

// Perform more complex validation here

return productData.has("name") &&

productData.has("price") &&

productData.has("description") &&

productData.has("images") &&

productData.getJSONArray("images").length() > 0;

}

private String sanitizeDescription(String description) {

// Implement data sanitization logic here, e.g., remove HTML tags

return description.replaceAll("<[^>]*>", "");

}- We’ve outlined the importance of data validation and transformation when dealing with a third-party e-commerce platform in AEM.

- The code demonstrates how to parse, validate, and transform the product data from the platform.

- It includes more complex validation, such as checking for required fields

- Additionally, it features data transformation methods like sanitizing product descriptions and selecting the main product image.

8. Client Call Improvements:

In the context of availability and performance concerns, a common challenge lies in the customer code’s interaction with third-party systems via HTTP connectivity. This challenge takes on paramount importance when these interactions are carried out synchronously within an AEM request. The direct consequence of any backend call’s latency is the immediate impact on AEM’s response time, with the potential to lead to service outages (for AEMaaCS) if these blocking outgoing requests consume the entire thread pool dedicated to handling incoming requests.

- Reuse the HttpClient: Create a single HttpClient instance, closing it properly, to prevent connection issues and reduce latency.

- Set Short Timeouts: Implement aggressive connection and read timeouts to optimize performance and prevent Jetty thread pool exhaustion.

- Implement a Degraded Mode: Prepare your AEM application to gracefully handle slow or unresponsive backends, preventing application downtime and ensuring a smooth user experience.

For details on improving the HTTP Client requests. Refer to: 3 rules how to use an HttpClient in AEM

9. Asynchronous Processing:

In the context of AEM projects, synchronous interactions with third-party services can lead to performance bottlenecks and delays for users. By implementing asynchronous processing, tasks like retrieving product information occur in the background without affecting the AEM server’s responsiveness. Users experience quicker responses while the heavy lifting takes place behind the scenes, ensuring a seamless user experience. Sling Jobs provide a reliable mechanism for executing tasks, even in the face of backend hiccups, preventing service outages. For details on how to implement Sling Jobs, refer to resource Enhancing Efficiency and Reliability by Sling jobs

10. Avoid Long-Running Sessions in AEM Repository

During integrations such as Translation imports, encountering Caused by: javax.jcr.InvalidItemStateException: OakState0001 is a common issue in the AEM repository. This problem arises due to long-running sessions and their interference with concurrent changes happening within the repository (like translation imports). When a session’s save() operation fails, temporary heap memory, where pending changes are stored, remains polluted, leading to subsequent failures. To mitigate this problem, two strategies can be employed:

1. Avoiding long-running sessions by using shorter-lived sessions, which is the preferable and easier approach in most cases. It also eliminates issues related to shared sessions.

2. Adding code to call session.refresh(true) before making changes. This action refreshes the session state to the HEAD state, reducing the likelihood of exceptions. If a RepositoryException occurs, explicitly clean the transient space using session.refresh(false), resulting in the loss of changes but ensuring the success of subsequent session.save() operations. This approach is suitable when creating new sessions is not feasible.”

11. Error Handling and Notifications:

Implement error-handling mechanisms, including sending notifications to relevant stakeholders when critical errors occur. Example: If integration with a payment gateway fails, notify the finance team immediately to address payment processing issues promptly.

12. Plan for Scalability

Scaling your AEM deployment and conducting performance tests are vital steps to ensure your application can handle increased loads and provide a seamless user experience. Here’s a structured plan:

- Define Expectations:

- Identify the expected user base growth and usage patterns.

- Set clear performance objectives, such as response times, throughput, and resource utilization.

- Determine the scalability needs and expected traffic spikes.

- Assess Current Architecture:

- Examine your current AEM architecture, including hardware, software, and configurations. Estimate resource requirements (CPU, memory, storage) based on expected loads and traffic.

- Identify potential bottlenecks and areas for improvement.

- Capacity planning: Consider vertical scaling (upgrading hardware) and horizontal scaling (adding more instances) options.

- Performance Testing:

- Create test scenarios that simulate real-world user interactions.

- Use load testing tools to assess how the system performs under different loads.

- Test for scalability by gradually increasing the number of concurrent users or transactions.

- Monitor performance metrics (response times, error rates, resource utilization) during tests.

- Optimization:

- Fine-tune configurations, caches, and application code.

- Re-run tests to validate improvements.

- Monitoring and Alerts:

- Implement real-time monitoring tools and define key performance indicators (KPIs).

- Set up alerts for abnormal behavior or performance degradation.

Adobe New Relic One Monitoring Suite:

13: Disaster Recovery:

Develop a disaster recovery plan to ensure high availability and data integrity in case of failures.

14. Testing Strategies:

Testing strategies play a critical role in ensuring the robustness and reliability of your applications. Here’s an explanation of various testing strategies specific to AEM:

- Integration Tests focus on examining the interactions between your AEM instance and external systems, such as databases, APIs, or third-party services. The goal is to validate that data is flowing correctly between these systems and that responses are handled as expected.

- Example:You might use integration tests to verify that AEM can successfully connect to an external e-commerce platform, retrieve product information, and display it on your website.

- Resource: For more information on conducting integration tests in AEM, you can refer to this resource

- Unit Tests are focused on testing individual components or functions within your AEM integration. These tests verify the correctness of specific code units, ensuring that they behave as intended.

- Example: You could use unit tests to validate the functionality of custom AEM services or servlets developed for your integration.

- Performance Testing evaluates the ability of your AEM application to handle various loads and traffic levels. It helps in identifying potential performance bottlenecks, ensuring that the application remains responsive and performs well under expected and unexpected loads.

- Penetration testing assesses the security of your AEM system by simulating potential attacks from malicious actors. This testing identifies vulnerabilities and weaknesses in the AEM deployment that could be exploited by hackers.

15. Testing and Staging Environments:

Create separate development and staging environments to thoroughly test and validate your integration before deploying it to production. Example: Before integrating AEM with a new e-commerce platform, set up a staging environment to simulate real-world scenarios and uncover any issues or conflicts with your current setup.

16. Versioning and Documentation:

Maintain documentation of the third-party API integration, including version numbers and update procedures, to accommodate changes or updates

Aanchal Sikka

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

AEM as a Cloud Service Migration Journey

- The Cloud Readiness Analyzer is an essential initial step before migrating to AEMaaCS. It evaluates various aspects of the existing AEM setup to identify potential obstacles and areas requiring modification or refactoring.

- This comprehensive analysis encompasses the codebase, configurations, integrations, and customizations.

- Its primary goal is to pinpoint components that might not be compatible or optimized for the cloud-native architecture.

- Areas requiring refactoring might include restructuring code to align with cloud-native principles, adjusting configurations for optimal performance, ensuring compatibility with AEMaaCS architecture, and reviewing customizations to adhere to best practices for cloud-based deployment models.

- This assessment aims to enhance scalability, security, and performance by addressing potential obstacles before initiating the migration process.

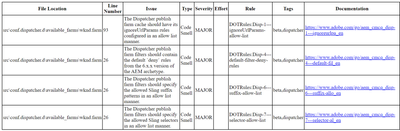

- The Cloud Manager code quality pipeline is a crucial mechanism to assess the existing AEM source code against the modifications and deprecated features in AEMaaCS.

- This pipeline integrates into the development workflow using Adobe's Cloud Manager, analyzing the codebase for compatibility, adherence to coding standards, identification of deprecated functionalities, and detection of potential issues that might impede a successful migration.

- Developers gain insights into areas of the code that require modifications or updates to comply with AEMaaCS standards.

- It enhances code quality, ensures compatibility with the cloud-native environment, and facilitates a smoother transition during the migration process.

- This code quality pipeline provides continuous checks and feedback loops within development environments, empowering teams to address issues early in the development lifecycle.

Anmol Bhardwaj

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Tools for Streamlining Development and Maintenance