Robots.txt file in AEM websites | AEM Community Blog Seeding

Robots.txt file in AEM websites by aemtutorial

Abstract

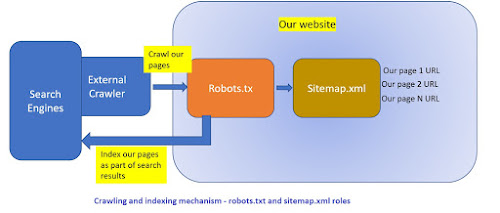

When we think about AEM websites, SEO is one of the major consideration. To ensure the crawlers are crawling our website, we need to have sitemap.xml and a robots.txt which redirects the crawler to corresponding sitemap.xml A robots.txt file lives at the root folder of the website. Below given the role of a robots.txt in any website. Robots.txt file acts as an entry point to any website and ensure the crawlers are accessing only the relevent items whcihwe have defined. robots.txt in AEM websites Let us see how we can implement a robots.txt file in our AEM website. There are many ways to do this, but below is one of the easiest way to achieve the implementation. Say we have multiple websites(multi-lingual) with language roots /en, /fr, /gb, /in Let us see how we can enable robots.txt in our case. Add robots.txt in Author Login to the crxde and create a file called 'robots.txt' under path /content/dam/[sitename] Ensure the following lines are added to the 'robots.txt' in Author of AEM instance and publish the robots.txt Now publish the robots.txt Add OSGi configurations for url mapping Now add below entry in OSGI console> configMgr - 'Apache Sling Resource Resolver Factory' Add below mapping for section 'URL Mappings' /content/dam/sitename/robots.txt>/robots.txt$

Read Full Blog

Robots.txt file in AEM websites

Q&A

Please use this thread to ask the related questions.