This conversation has been locked due to inactivity. Please create a new post.

This conversation has been locked due to inactivity. Please create a new post.

![]()

Hi Team,

We are getting datastore issue in our test publisher. Intially it was -111675 . we have done garbage collection, offline compaction, removed the debug logger but still it increasimg day by day and now it becomes- 198344

We have followed this document:- https://helpx.adobe.com/in/experience-manager/kb/analyze-unusual-repository-growth.html

Added the repogrowth.log in the logger and aslo run the diskusage report but it was running from 1 hr and we didnot get any result out of that. We are giving the sample of repogrowth.log:-

15.07.2021 07:05:38.530 *TRACE* [sling-default-2-com.cognifide.actions.core.persist.Resender.5335] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366382] save

15.07.2021 07:05:39.374 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:05:44.373 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:05:44.440 *TRACE* [discovery.connectors.common.runner.5002ee23-1d23-43cf-a1f5-5fa9079589dc.connectorPinger] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366389] refresh

15.07.2021 07:05:44.441 *TRACE* [discovery.connectors.common.runner.5002ee23-1d23-43cf-a1f5-5fa9079589dc.connectorPinger] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366389] refresh

15.07.2021 07:05:49.373 *TRACE* [sling-default-4-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:05:54.374 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:05:59.374 *TRACE* [sling-default-3-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:00.058 *TRACE* [sling-default-2-Registered Service.170361] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366411] save

15.07.2021 07:06:00.058 *TRACE* [sling-default-2-Registered Service.170361] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366415] save

15.07.2021 07:06:01.451 *TRACE* [sling-default-3-health-biz.netcentric.cq.tools.actool.healthcheck.LastRunSuccessHealthCheck] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366423] Adding node [/var/statistics/achistory]

15.07.2021 07:06:04.374 *TRACE* [sling-default-5-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:08.529 *TRACE* [sling-default-1-com.cognifide.actions.core.persist.Resender.5335] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366482] save

15.07.2021 07:06:09.374 *TRACE* [sling-default-3-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:14.374 *TRACE* [sling-default-4-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:14.444 *TRACE* [discovery.connectors.common.runner.5002ee23-1d23-43cf-a1f5-5fa9079589dc.connectorPinger] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366496] refresh

15.07.2021 07:06:14.445 *TRACE* [discovery.connectors.common.runner.5002ee23-1d23-43cf-a1f5-5fa9079589dc.connectorPinger] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366496] refresh

15.07.2021 07:06:19.374 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:24.374 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:29.373 *TRACE* [sling-default-2-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:34.374 *TRACE* [sling-default-5-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:38.529 *TRACE* [sling-default-2-com.cognifide.actions.core.persist.Resender.5335] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366548] save

15.07.2021 07:06:39.373 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

Solved! Go to Solution.

Views

Replies

Total Likes

Hi @seemak19887037!

So from your posting I understand that you are experiencing unusual and unexpected repository growth.

Is that correct?

(Although I'm not sure about the figures shared. Is that ~112 GB vs. ~198 GB?)

There are several starting points to understand where this growth comes from:

[...]

Due to this fact, it is recommended to size the disk at least two or three times larger than the initially estimated repository size." (Emphasis in quotation added by me.)

Hope that helps!

Hi @seemak19887037!

So from your posting I understand that you are experiencing unusual and unexpected repository growth.

Is that correct?

(Although I'm not sure about the figures shared. Is that ~112 GB vs. ~198 GB?)

There are several starting points to understand where this growth comes from:

[...]

Due to this fact, it is recommended to size the disk at least two or three times larger than the initially estimated repository size." (Emphasis in quotation added by me.)

Hope that helps!

![]()

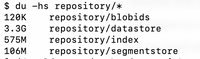

I have run the disusage report again and this time it run successfully. Sharing the result with you.

Hi @seemak19887037!

So your overall repository size is nearly 66 GB.

How does that compare to the size of your repository folder on the file system (du -hs crx-quickstart/repository)?

The majority of space is consumed inside the /etc tree.

Could you please drill down into that area to explore where exactly the space is consumed? You can simply click on "etc" in the first cell of the table or directly add the path as a query parameter (/etc/reports/diskusage.html?path=/etc).

I assume that there is a lot of space consumed by packages (/etc/packages) but there may be other candidates to check.

For my local instance the biggest part is also /etc with about 80% in total. For me, it's all packages (99%) but that may be different on your end. If it's the same for you, then check which package groups hold the biggest part (/etc/reports/diskusage.html?path=/etc/packages).

Hope that helps!

![]()

Drilled down the etc path and found that 99% disk usage is for etc/packages. Then drilled down the etc/packages and found that one of packages took 33% of disk space. Repository is taking 276G of the space.

These are the screenshot of all results:-

1.

Hi @seemak19887037!

There are two things to look at:

Hope that helps!