Question

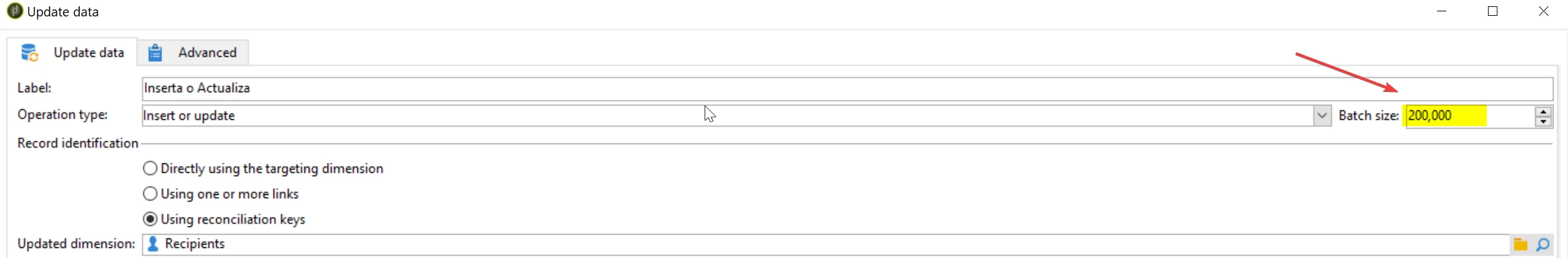

Correct "batch size" ?

Is there a good batch size for an update activity?

There are some workflows that take 5 to 8 hours to ingest/update the data into our schema.

I'm thinking going from 10,000 to 200,000. I've tested with 80,000 and 100,000 and everything seems fine.

Is there anything I should be aware of about this big increment?