Query on Splitting Large Data Exports into Batches

Hi All

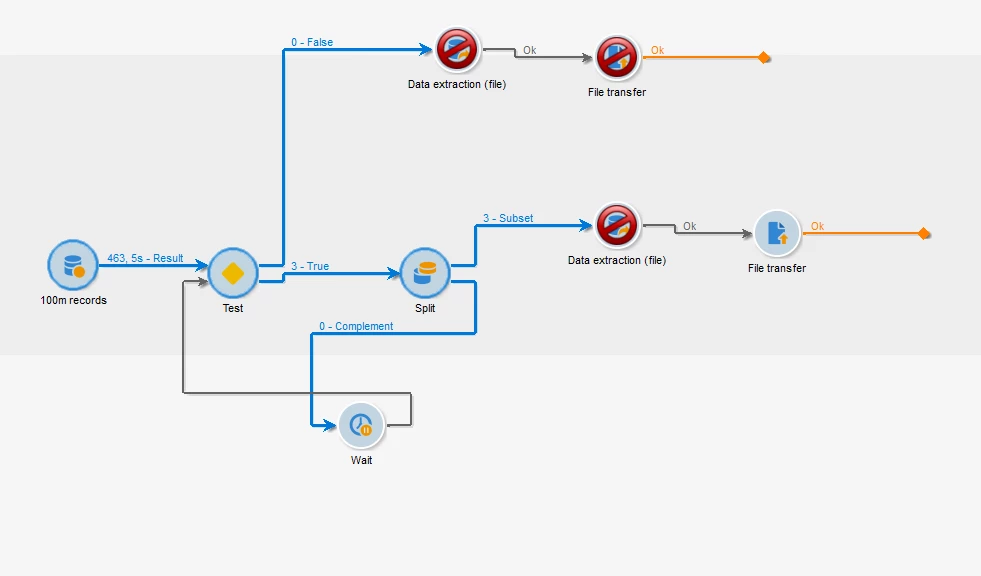

I have a question regarding my requirements. I have implemented an ETL technical workflow that exports data in CSV format to our downstream applications.

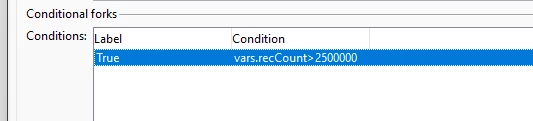

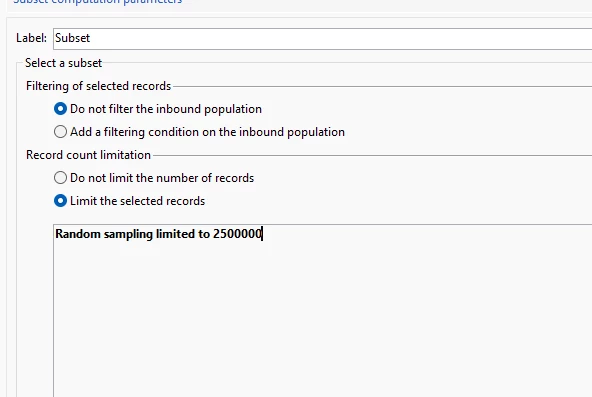

My requirement is to split the response data into multiple batch files. For instance, sometimes we target more than 10 million individuals in an hour. When the broadLogData is exported, it includes all 10 million responses in a file, which takes a significant amount of time and results in a large file. What I would like to achieve is that if the export data exceeds 2.5 million, it should be split into four batch files, each containing 2.5 million records.

Could you help me understand how to achieve this, either through code or if there are any out-of-the-box options available for this?

Thank you.