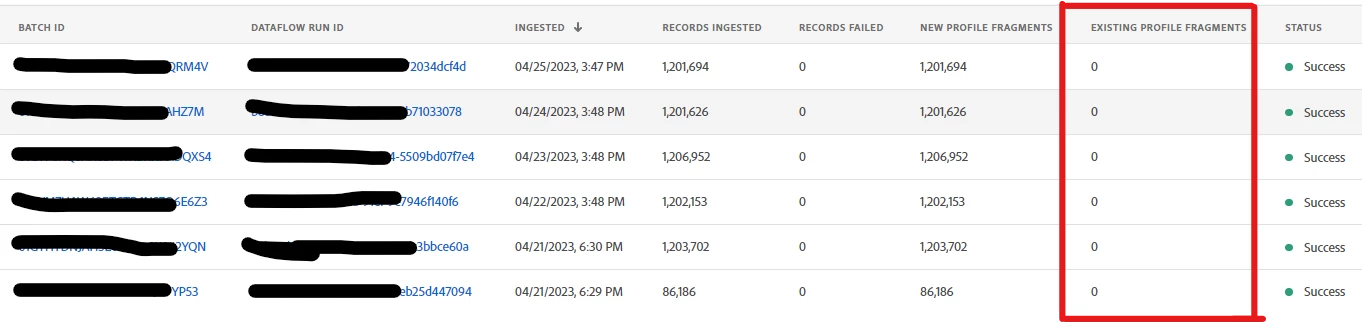

Incremental load data discrepancy in New Profile Fragments and Existing Profile Fragments.

Hi team,

Recently I did the batch data ingestion with enabling the incremental load. Once the incremental load work as on daily basis for each batch ID against the records get ingested into the dataset but all records only reflect under the New Profile Fragments as new records and Zero records reflect in Existing Profile Fragments as existing record or modified existing record. (Specific to XDM-ExperienceEvent Class Dataset)

As per source daily new updated records count is low but in AEP getting the different count what could be the reason.

All mapping is correct.

Below snaps for your reference.

Regards,

Sandip Surse