Prevent Robots.txt file from getting downloaded

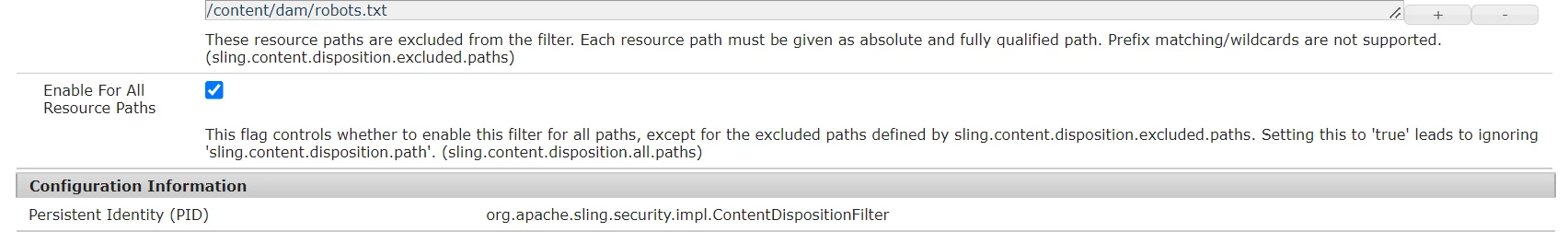

My robots.txt file is added to content/dam and I have added rewrite rules for the same in my dispatcher. I have update the robots.txt path in Content Disposition under exclude path. Yet, the the robots file gets downloaded instead of showing in browser.

In disposition config: if i add entire path tille robots.txt, it fails to load on browser. If i add /content/dam*:text/plan, it works.

But the above will block all text type file. So i made change in dispatcher rewrite rule file:

<LocationMatch "/content/dam/site/robots.txt">

ForceType text/plain

Header set Content-Disposition inline

</LocationMatch>