Hello @rakesh_h2 There are multiple ways you can avoid your non-prod domains to get crawled on search engines, mainly google.

1. Disallowing everything in robots.txt - This is a must do for non-prod environments!

2. Using 'noindex', 'nofollow' meta tags in pages(this is a non practical solution in your case because you want to restrict the whole domain rather than a few pages). You usually use this for approach specific pages to not index(live domains)

3. By not providing sitemap.xml - this is also not a very practical solution, cause usually we have business logic and dispatcher configurations in place to generate/deliver sitemap.xml.

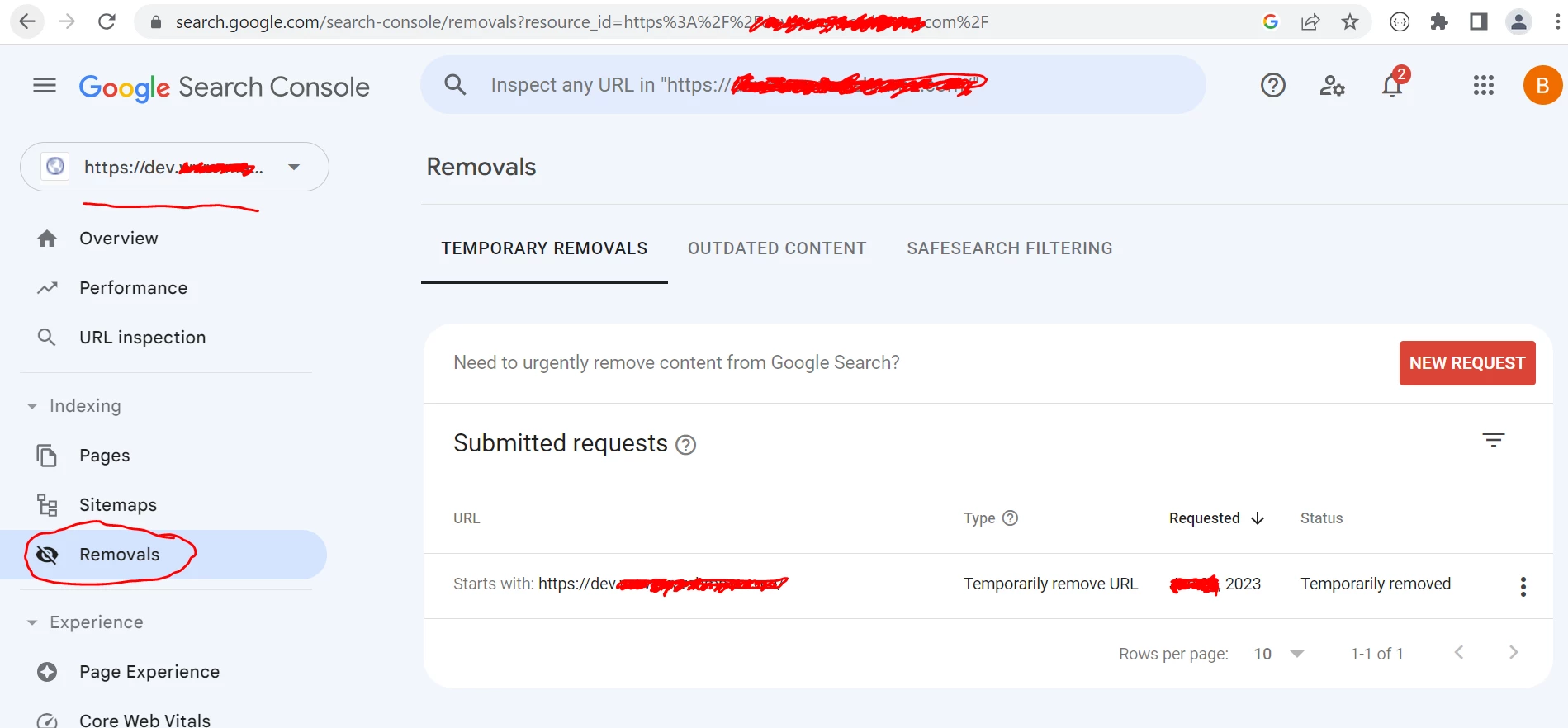

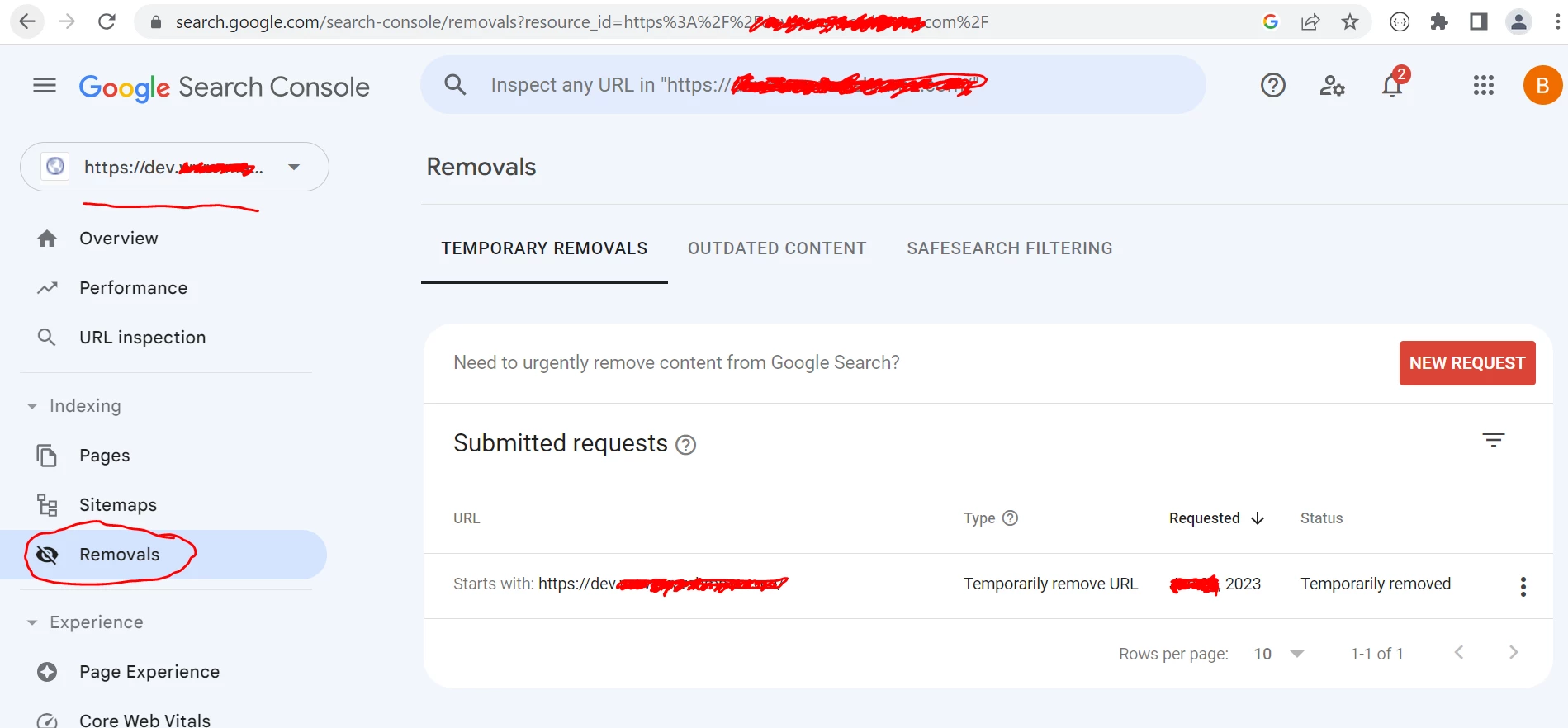

4. Use Google Search Console - This might turn out to be the best bet you can have. Register yourself on this console(free of cost) and create a property to verify your non-prod domains. Once your domains are verified, place a request for removal. This will remove you non-prod domains(They will no longer appear in google search). Once they stop appearing from google search, remove the site verification code(keep it if you want to. It's up to you).

Now your robots.txt with

User-agent: *

Disallow: /

will work perfectly fine. Make sure you have this in-place post you remove, else they will get re-indexed and start appearing in google search.

Thanks

- Bilal