Hi @kayzee ,

It is quite challenging to handle such scenarios directly within Workspace.

Recently, I encountered a similar issue in my application—though in my case, pageviews were being inflated due to a different bug, not just duplicate calls.

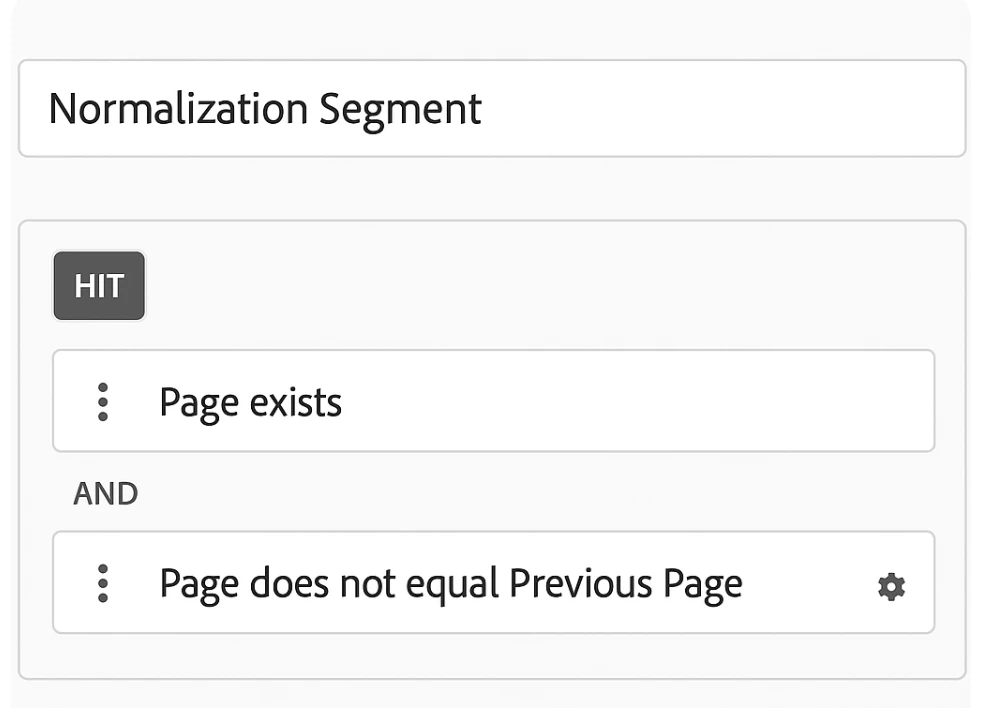

Normalising duplicate page view calls can be achieved in Workspace using workarounds and assumptions. However, for this to be reliable, we need to be 100% certain that the duplicate pageviews occurred consistently for all users within a known date range. Only then is it reasonably safe to divide the pageviews for the affected page(s) by 2.

In essence, the approach I used was similar to this SQL-style logic:

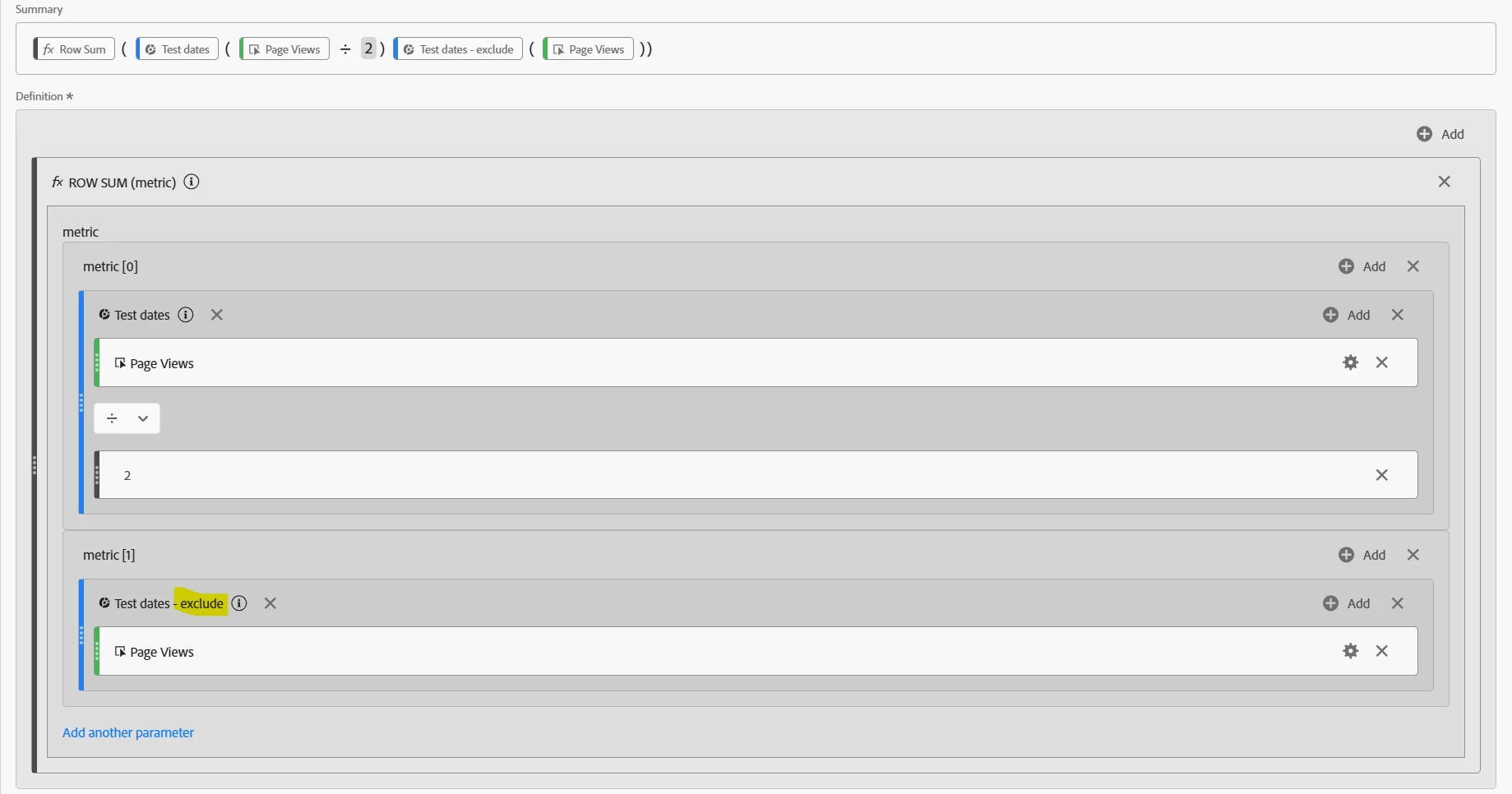

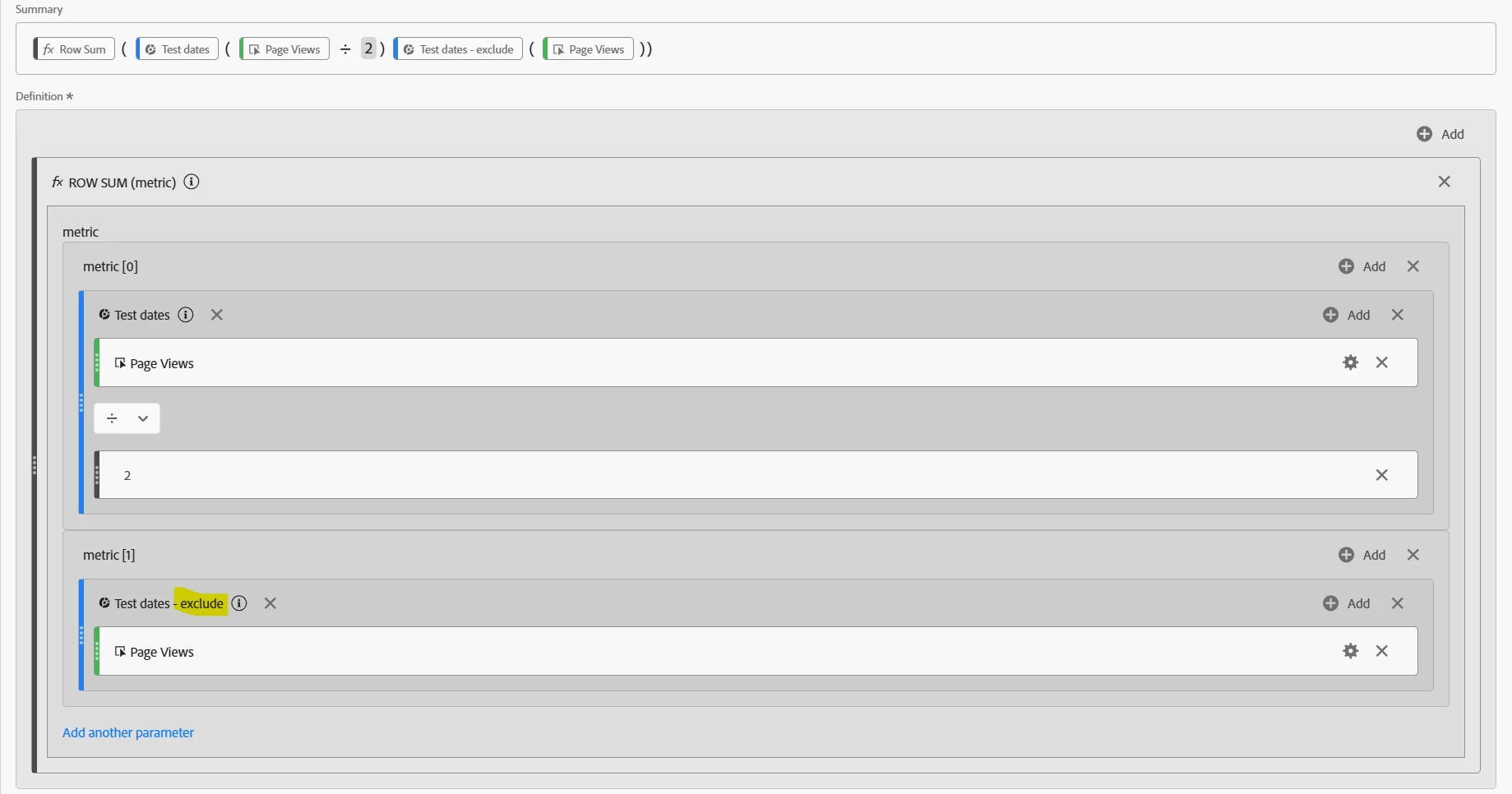

ROW_SUM(ADJ_PAGEVIEW_1, ADJ_PAGEVIEW_2)

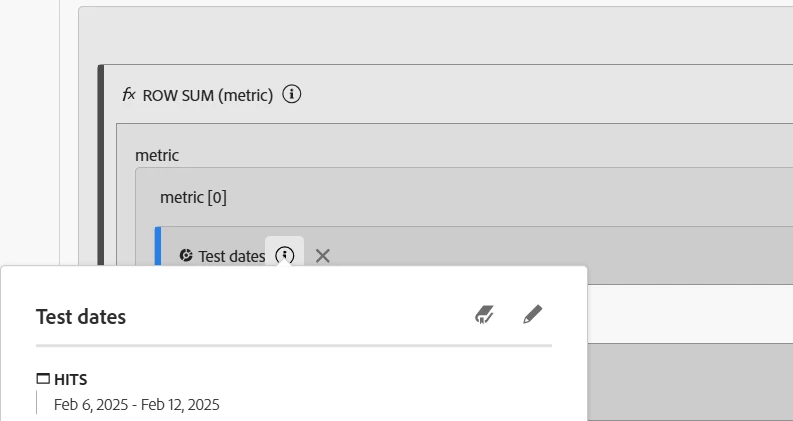

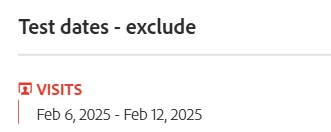

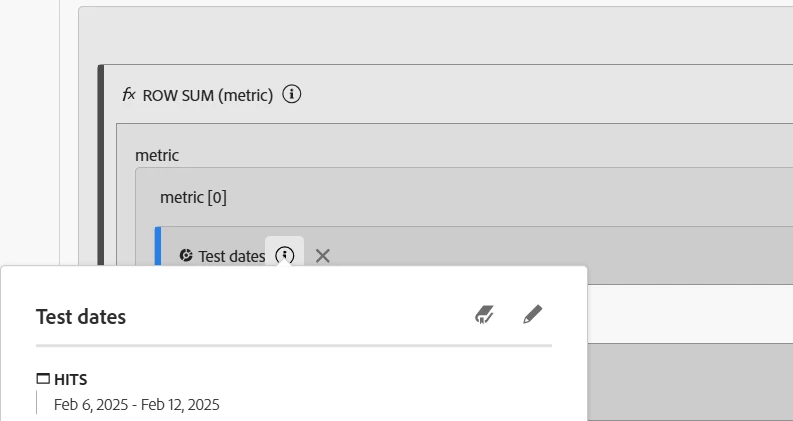

ADJ_PAGEVIEW_1: Pageviews divided by 2 to account for the duplication on the specific page(s).

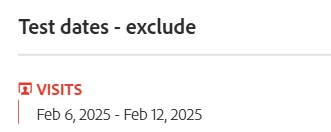

ADJ_PAGEVIEW_2: Actual pageview count, excluding the affected page(s) during the impacted time period.

By combining segments and calculated metrics creatively, I was able to approximate a more accurate trend.

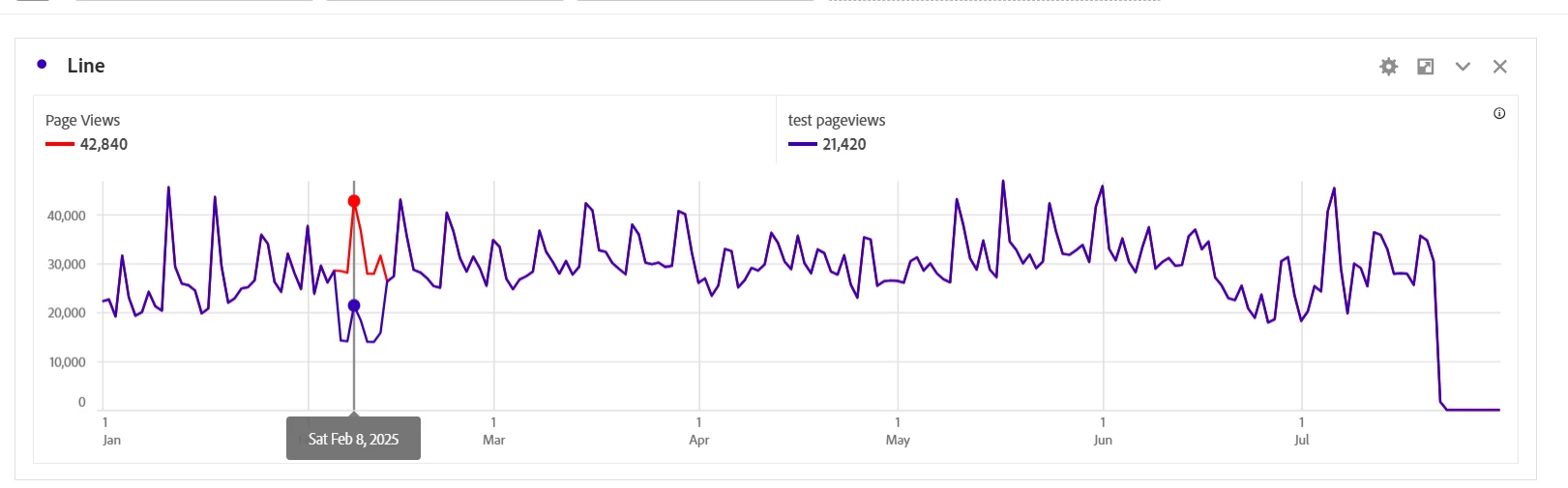

Example:

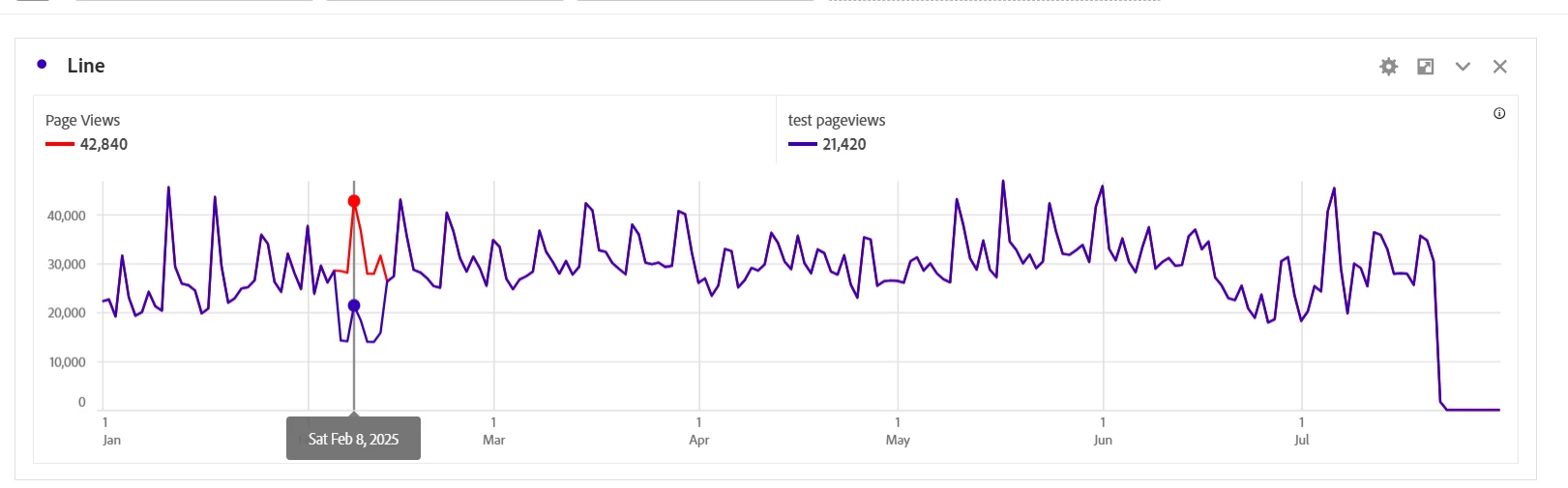

I tested the approach by adjusting the pageviews with a factor of 0.5 (i.e., halving them), and it seems to be working as expected in my case.

This workaround helps in analyzing trends and reporting only when the duplicate behavior is uniform and predictable. If the duplication varies—say, some users get 2 duplicate calls while others get 3 or more—then this approach will not yield accurate numbers.

Screenshot:

I discovered the ROW_SUM function from the recently published Calculated Metric Playbook by @mandygeorge .

Thanks,

Nitesh