Question

Data warehouse extract ERROR

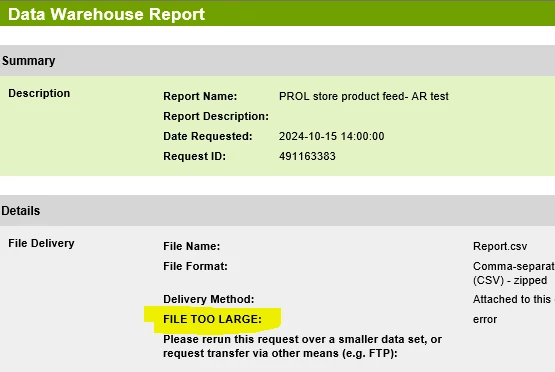

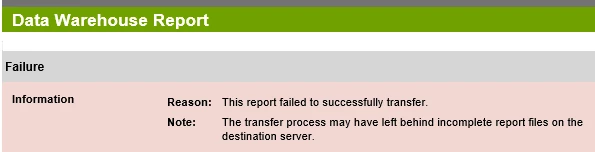

I'm re-querying the existing job w/ destination being GCP bucket, but for some reason it says "Error - Failure To Send" and the email notification received tells the below error. Could someone help what does this err mean and how we could tackle it?

As an alternate when I tried emailing the report myself, the email notification stated FILE TOO LARGE for email. Wonder what the above err means!