Behind the Curtain of a High-Performance Bidder Service

Introduction

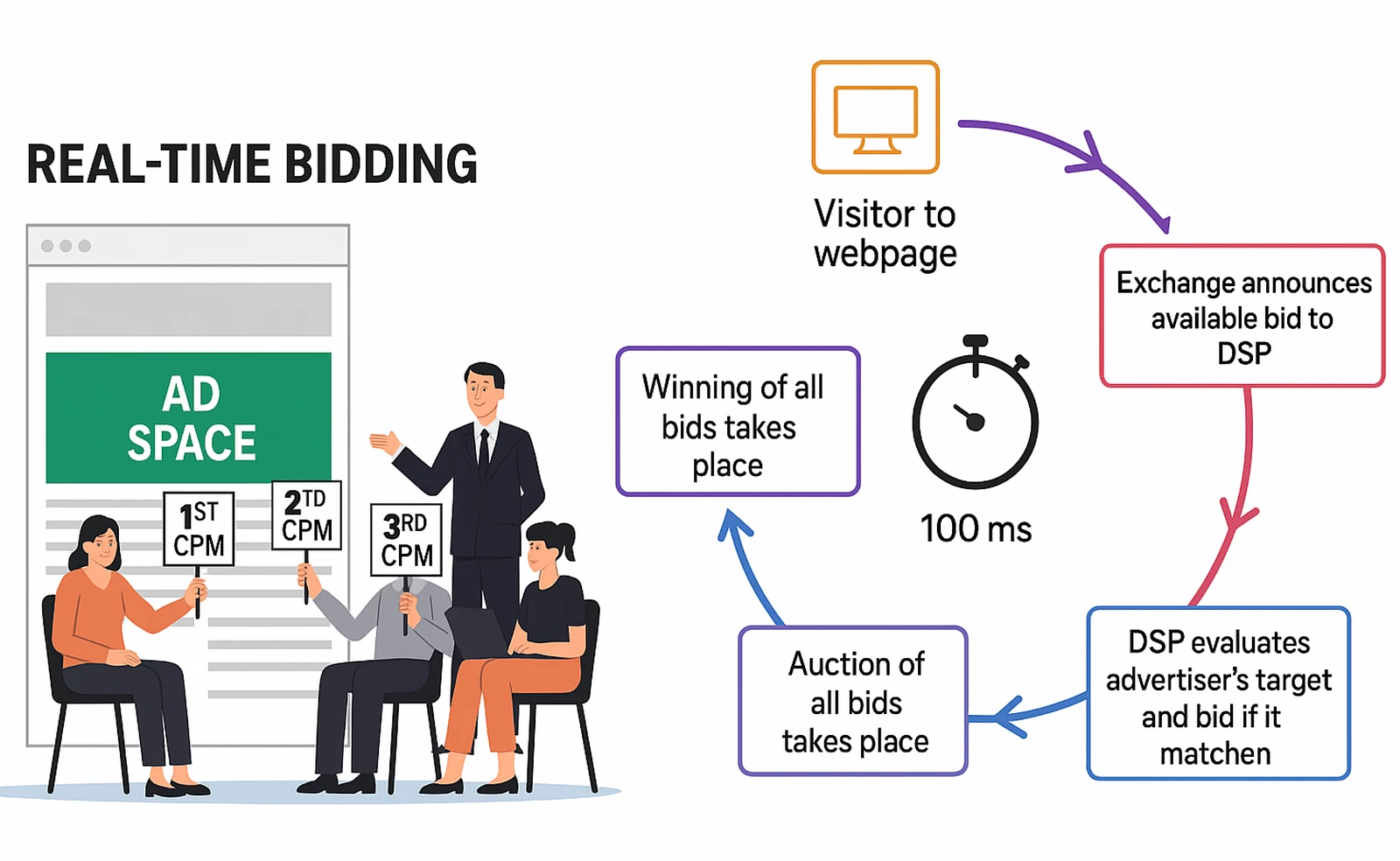

Real-time bidding (RTB) is a fundamental component of Demand Side Platforms (DSPs) within the realm of programmatic advertising. It enables advertisers and publishers (website owners) to purchase and sell ad inventory in real time through digital auctions.

Left image: A publisher page with an ad space(usually referred as impression opportunity or ad-slot). An example of where a Demand Side Platform would bid for an ad slot. More information on Adobe DSP

Right image: An auction flow with that is completed within 100ms.. Publishers work with Supply Side Platforms (SSP) to auction ad-slot availability to various Demand Side Platforms (DSPs or Buyers) through bid requests (usually a http request), whenever a visitor lands on a webpage. DSPs decide to bid or not based on various targeting criteria. The exchange selects the winning ad and delivers it. More information on Adobe SSP partners.

At Adobe, we manage more than 400 billion auctions each day, all while maintaining a p95 response time of less than 60 milliseconds. We’ve implemented efficient data structures and developed internal systems to support this vast scale and low latency requirements.

In this blog, we offer a high-level overview of how such a system operates at scale, sharing architectural and performance strategies that help ensure low-latency, high-volume decisioning.

Sample Bid Request

Let us first look a sample bid request.

{

"id": "1234567890",

"imp": [

{

"id": "1",

"banner": {

"w": 300,

"h": 250,

"pos": 1,

"format": [

{ "w": 300, "h": 250 }

]

},

"bidfloor": 0.50,

"bidfloorcur": "USD"

}

],

"site": {

"id": "site123",

"name": "Example Site",

"domain": "example.com",

"cat": ["IAB1"],

"page": "https://example.com/article123"

},

"device": {

"ua": "Mozilla/5.0 (Windows NT 10.0; Win64; x64)...",

"ip": "123.123.123.123",

"geo": {

"country": "USA",

"region": "CA",

"city": "San Francisco",

"lat": 37.7749,

"lon": -122.4194

},

"devicetype": 2,

"os": "Windows",

"osv": "10",

"language": "en"

},

"user": {

"id": "user123",

"buyeruid": "buyer123"

},

"at": 2,

"tmax": 120,

"cur": ["USD"]

}

Our service processes the bid requests similar to above. Let us look at some important fields from the above request body.

Key Fields Explained:

- id:

Unique ID for the bid request.

- imp:

Array of impression objects, each representing an ad opportunity.

Includes details like banner size, bid floor, etc.

- site:

Information about the website where the ad will appear.

Includes domain, page URL, and IAB category.

- device:

Information about the user’s device.

Includes IP, user-agent (ua), OS, location, and more.

- user:

Contains user ID info, buyer UID for user syncing.

Scaling to Billions of Auctions

Operating at the scale of billions of auctions per day requires careful orchestration of compute resources, data, and logic. A distributed architecture powered by container orchestration ensures high availability and regional load balancing. Each instance (or pod) of the Bidder service independently processes requests, applying a series of filtering and decision-making steps to identify the most promising ad to bid with — or respond with a “no bid” if no match is found.

Bidder Requirements

Now that we know what type of requests are handled, let us understand the requirements of the service.

- Pick the best ad among all the ads configured in the platform, honouring all the associated targeting , goals, pacing and budget constraints

- p95 latency to be less than 60 ms

- The service must be cost-efficient and make optimal use of compute and memory resources to handle 400 billion requests per day.

Bidder Challenges

Given above requirements, let us look at the challenges faced:

- Needs to include continuously increasing Ads on the platform, configured as part of various campaigns run across the regions by the advertisers in its decision making process

- Needs to honour varied set of targeting rules like target a specific geo, certain user segments or only a category of publishers etc with very low latency to meet the stringent latency requirements

- Ensure campaign budgets are spent evenly and efficiently over time, honouring the goals as configured for the ad.

Building Bidder Service

Before getting into how the challenges are addressed, let us look at all the data categories that are required by the Bidder service to make a decision to bid or not.

Data Categories

- Ads Data: This dataset comprises information about all the ads linked to various campaigns from multiple advertisers on our DSP. It encompasses extensive details about ads, including their types, sizes, and more. Additionally, it encompasses a wide range of targeting criteria, encompassing target audience preferences and preferences related to publisher page categories. This dataset also includes various goals set by the advertisers. It serves as the fundamental basis upon which various filtering stages operate to selectively refine and filter out ads. This data resides in the database.

- User Profiles: Our system is integrated with multiple Data Management Platforms (DMPs). Through a dedicated pipeline, user profile data is continually ingested into a User Key-Value storage. This process ensures that the User’s database is consistently refreshed with the latest information regarding user attributes, behaviours, and affiliations. This access empowers advertisers to target user segments provided by various DMPs precisely.

- Pacing Data: The pacing data determines the maximum number of bids an ad can be part of within a defined time interval, this ensures that every ad is evenly spread across with budget checks. The pacing system continuously generates this data for every ad in the system and stores this in its local DB.

- Other Data:

ML models: We predict the quality of incoming auction requests using ML models. This helps in deciding whether to bid on incoming auction requests and also the price at which we have to bid. ML pipelines continuously refine these models based on the performance of our previous bids. Models that are built get uploaded to a central storage (Amazon S3) along with its metadata at regular intervals of time.

Geolocation data: This binary database file helps to determine the country, subdivisions, city, and postal code associated with IPv4 and IPv6 addresses coming in the bid request. This file is uploaded to a central S3 bucket by a different pipeline.

AdsTxt data: This data contains the information of authorized sellers of digital inventory for a publisher. More information on this can be read here ads.txt. A different system converts this data into a binary format and writes to a file. This file is uploaded to S3.

Warmup: Getting ready to handle requests

The bidder is designed as a high-throughput, low-latency system. To maintain optimal performance, it’s crucial to preload as much relevant data as possible directly into the bidder pods’ memory, reducing reliance on external calls during request processing. With this approach in mind, we built an in-house data delivery system named Telephone.

Telephone: Data Delivery

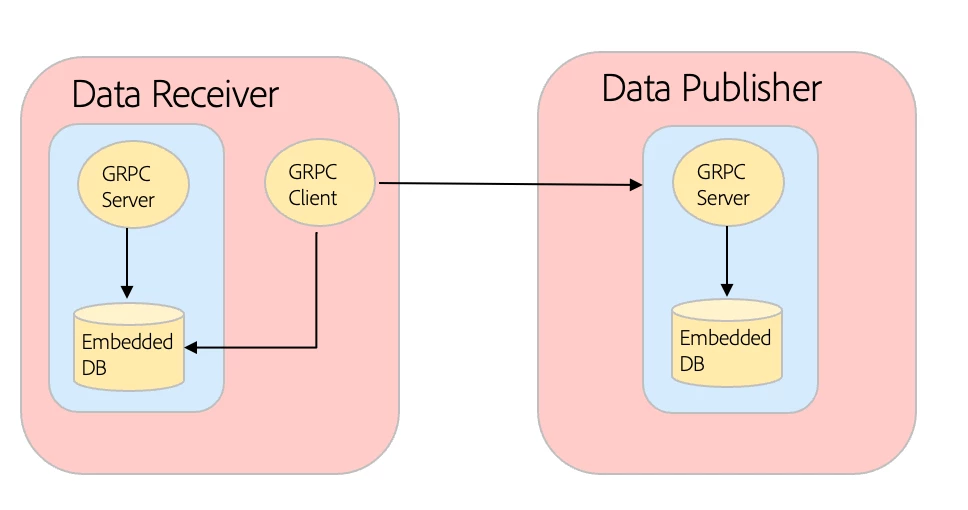

Telephone is built on embedded storage and protocol-based messaging. Services define gRPC interfaces, supporting fine-grained updates of the data with timestamped ordering. Data publisher services continuously generate the data in the defined format and store them in the embedded store. Client services can independently subscribe to the data they are interested in and continuously poll for the latest data. This essentially acts as a message queue between data publisher services and clients which use them.

At Adobe, we have built an in-house service named streamers sitting between the data publishing services and clients of these services. This service creates multiple copies of the data being generated by these publisher services. This service independently scales creating multiple copies as per the scale of consumer services, thus preventing any bottlenecks created on the publisher services.

Bidder service pulls the data of Ads Data and Pacing data continuously through the telephone framework, so essentially all of the ads data is ingested into it before its starts accepting any request. This is augmented by continuously pulls to fetch the latest ads data.

Bidder Service also downloads ML-Models, Geo Lookup data files and any other files required as part of IAB standard to honour various other functionalities.

Once all of above the data is pulled in to pod (service), it is marked ready for processing the bid requests.

Bid Request Processing:

Once we get the bid request, Bidder conducts an internal auction where all the ads configured on the platform fight for the impression opportunity described in the bid request.

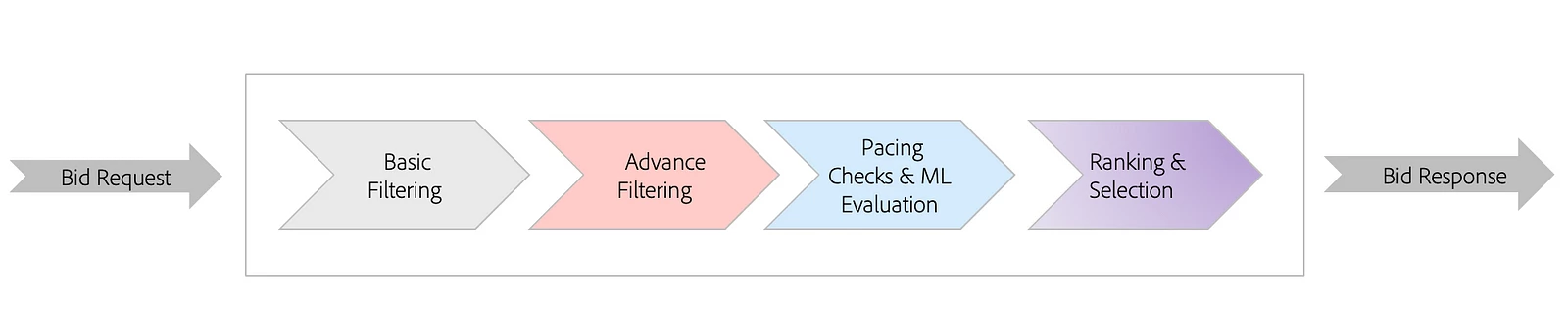

Filtering Pipeline: From Broad to Best Match

The Bidder uses a tiered filtering model, where ads move through increasingly granular stages:

Basic Filtering: The purpose of this stage is to filter out ads that don’t match the attributes of the incoming bid request, based on various targeting criteria such as geographical location and ad type. For instance, if a bid request originates from the United States, this stage filters out ads that are not intended for that region. For example, ads from an Australian real estate firm that chooses not to display ads related to its US inventory should be filtered out in this stage.

Given the potentially large volume of ads and targeting dimensions, naively matching each ad to each criterion would be inefficient. To combat this, we precompute inverted indexes using bitmaps, enabling fast, set-based operations that reduce filtering to near-constant time per request. This computation is done in a different Indexer service and the latest indexes are pulled by Bidder using the Telephone framework. So whenever we get a request an intersection of the bitmaps gives all the ads which confirm to the bid request and are moved to the next stage of filtering

For example, when a request targets a specific geography and ad type, we intersect relevant bitmaps to instantly identify ads that match both criteria.

Advanced Filtering: This layer filters based on user profile information.At this stage, we will be left with the ads which confirm targeting criteria matching bid request attributes. The purpose of this stage is to filter out ads further based on ever-changing user behaviour and interests. For example, if a company is advertising running shoes, it may want to target audiences who are interested in sports. If the user for a specific bid request doesn’t belong to any of the sports-related segments, the ad is filtered out and does not advance to the next stage.

We have billions of profiles stored in a low latency key value store which is constantly updated. Bidder does one external call to this service to fetch the user segments, post which a further filtering is done.

Pacing Checks & ML Evaluation: The Pacing System controls the number of times a placement can participate in an auction within a fixed time interval. It is calculated based on the placement’s win rate and spending target for that particular interval. This logic ensures that a placement’s budgets are utilised smoothly and efficiently over time. For example, if a placement has to spend 100 dollars in a day, the system ensures that the spending occurs throughout the day rather than just within 1 hour. Auctions that pass the pacing checks are then scored using ML models. All of the ads got through this stage are considered good candidates for bidding.

Ranking & Selection: Choose the highest-ranking eligible ad for the auction response.

Each stage progressively narrows the candidate pool while minimizing latency through optimized data structures and smart caching.

Bid Decision: The best-ranked ad is selected; otherwise, a “no bid” response is issued.

Key Takeaways

- Data Locality Is Critical: Preloading all necessary datasets into memory ensures ultra-low-latency decisioning under high throughput.

- Filtering by Stages Boosts Efficiency: A tiered filtering pipeline — starting broad (geo, ad size) and narrowing down (user segments, pacing, ML) — allows the system to quickly eliminate non-eligible ads and focus compute where it matters.

- Precomputed Indexes Save Time: Bitmap-based inverted indexes enable fast set operations, reducing matching overhead to near-constant time per request.

- Telephone Powers Real-Time Data Delivery: A custom gRPC-based data distribution system ensures services get timely updates without burdening data producers, supporting elasticity and horizontal scaling.

- Pacing and ML Ensure Balanced Delivery: Budget pacing avoids over-delivery, while ML scoring increases the likelihood of winning auctions with the best possible ad.

- Independent Pod Design Ensures Scalability: Each Bidder instance operates autonomously, enabling horizontal scaling while maintaining consistent performance.

Summary

The primary goal of the bidder service is to ensure every eligible ad on the platform has a fair opportunity to compete in auctions, while also pacing campaign budgets evenly and consistently meeting strict SLA requirements.