Datastore issue in AEM test Publisher

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi Team,

We are getting datastore issue in our test publisher. Intially it was -111675 . we have done garbage collection, offline compaction, removed the debug logger but still it increasimg day by day and now it becomes- 198344

We have followed this document:- https://helpx.adobe.com/in/experience-manager/kb/analyze-unusual-repository-growth.html

Added the repogrowth.log in the logger and aslo run the diskusage report but it was running from 1 hr and we didnot get any result out of that. We are giving the sample of repogrowth.log:-

15.07.2021 07:05:38.530 *TRACE* [sling-default-2-com.cognifide.actions.core.persist.Resender.5335] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366382] save

15.07.2021 07:05:39.374 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:05:44.373 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:05:44.440 *TRACE* [discovery.connectors.common.runner.5002ee23-1d23-43cf-a1f5-5fa9079589dc.connectorPinger] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366389] refresh

15.07.2021 07:05:44.441 *TRACE* [discovery.connectors.common.runner.5002ee23-1d23-43cf-a1f5-5fa9079589dc.connectorPinger] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366389] refresh

15.07.2021 07:05:49.373 *TRACE* [sling-default-4-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:05:54.374 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:05:59.374 *TRACE* [sling-default-3-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:00.058 *TRACE* [sling-default-2-Registered Service.170361] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366411] save

15.07.2021 07:06:00.058 *TRACE* [sling-default-2-Registered Service.170361] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366415] save

15.07.2021 07:06:01.451 *TRACE* [sling-default-3-health-biz.netcentric.cq.tools.actool.healthcheck.LastRunSuccessHealthCheck] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366423] Adding node [/var/statistics/achistory]

15.07.2021 07:06:04.374 *TRACE* [sling-default-5-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:08.529 *TRACE* [sling-default-1-com.cognifide.actions.core.persist.Resender.5335] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366482] save

15.07.2021 07:06:09.374 *TRACE* [sling-default-3-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:14.374 *TRACE* [sling-default-4-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:14.444 *TRACE* [discovery.connectors.common.runner.5002ee23-1d23-43cf-a1f5-5fa9079589dc.connectorPinger] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366496] refresh

15.07.2021 07:06:14.445 *TRACE* [discovery.connectors.common.runner.5002ee23-1d23-43cf-a1f5-5fa9079589dc.connectorPinger] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366496] refresh

15.07.2021 07:06:19.374 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:24.374 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:29.373 *TRACE* [sling-default-2-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:34.374 *TRACE* [sling-default-5-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

15.07.2021 07:06:38.529 *TRACE* [sling-default-2-com.cognifide.actions.core.persist.Resender.5335] org.apache.jackrabbit.oak.jcr.operations.writes [session-78366548] save

15.07.2021 07:06:39.373 *TRACE* [sling-default-1-com.day.cq.rewriter.linkchecker.impl.LinkInfoStorageImpl.173880] org.apache.jackrabbit.oak.jcr.operations.writes [session-69917828] refresh

Solved! Go to Solution.

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @seemak19887037!

So from your posting I understand that you are experiencing unusual and unexpected repository growth.

Is that correct?

(Although I'm not sure about the figures shared. Is that ~112 GB vs. ~198 GB?)

There are several starting points to understand where this growth comes from:

- To determine the size and distribution on the file system, you can leverage operating system tools such as "du -s" on the instance folder(s). This may help to tell instance, logs, repository and datastore usage apart. This will help you understand where the unexpected growth is happening and if you should take a deeper look inside repository (see following recommendation).

- To get a better view on the distribution within the repository, you may want to check AEMs Disk Usage report as outlined in this blog post. You can drill down and check disk usage distributions on each level of the content hierarchy. Please be aware that the tool performs a full tree iteration of the selected path which may take some time and causes quite some load on the instance depending on the size and structure of your repository. This may help to identify the areas causing repository growth. Is it under /etc/packages? Is it /content/dam? Or any other specific location?

- Please be aware that there are regular maintenance jobs that should be running on the AEM instance. While on the long term all of these jobs are meant to increase instance performance and reduce repository size, sometimes they may cause repository growth on a short term basis - especially if combined with offline compaction (see also next remark).

Some of the relevant jobs are: - One important remark from this documentation:

"So, when you run online revision cleanup after offline revision cleanup the following happens:- After the first online revision cleanup run the repository will double in size. This happens because there are now two generations that are kept on disk.

- During the subsequent runs, the repository will temporarily grow while the new generation is created and then stabilize back to the size it had after the first run, as the online revision cleanup process reclaims the previous generation.

[...]

Due to this fact, it is recommended to size the disk at least two or three times larger than the initially estimated repository size." (Emphasis in quotation added by me.)

- Please also check if there are regular jobs that work with packages / package manager. Each package installation creates a snapshot of the relevant area of the repository before the actual package installation takes place. Cleaning up unused packages (which will also cleanup the according snapshots) is often a good area to save on disk space.

- You may want to check this blog post on managing repository size growth in AEM.

Hope that helps!

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @seemak19887037!

So from your posting I understand that you are experiencing unusual and unexpected repository growth.

Is that correct?

(Although I'm not sure about the figures shared. Is that ~112 GB vs. ~198 GB?)

There are several starting points to understand where this growth comes from:

- To determine the size and distribution on the file system, you can leverage operating system tools such as "du -s" on the instance folder(s). This may help to tell instance, logs, repository and datastore usage apart. This will help you understand where the unexpected growth is happening and if you should take a deeper look inside repository (see following recommendation).

- To get a better view on the distribution within the repository, you may want to check AEMs Disk Usage report as outlined in this blog post. You can drill down and check disk usage distributions on each level of the content hierarchy. Please be aware that the tool performs a full tree iteration of the selected path which may take some time and causes quite some load on the instance depending on the size and structure of your repository. This may help to identify the areas causing repository growth. Is it under /etc/packages? Is it /content/dam? Or any other specific location?

- Please be aware that there are regular maintenance jobs that should be running on the AEM instance. While on the long term all of these jobs are meant to increase instance performance and reduce repository size, sometimes they may cause repository growth on a short term basis - especially if combined with offline compaction (see also next remark).

Some of the relevant jobs are: - One important remark from this documentation:

"So, when you run online revision cleanup after offline revision cleanup the following happens:- After the first online revision cleanup run the repository will double in size. This happens because there are now two generations that are kept on disk.

- During the subsequent runs, the repository will temporarily grow while the new generation is created and then stabilize back to the size it had after the first run, as the online revision cleanup process reclaims the previous generation.

[...]

Due to this fact, it is recommended to size the disk at least two or three times larger than the initially estimated repository size." (Emphasis in quotation added by me.)

- Please also check if there are regular jobs that work with packages / package manager. Each package installation creates a snapshot of the relevant area of the repository before the actual package installation takes place. Cleaning up unused packages (which will also cleanup the according snapshots) is often a good area to save on disk space.

- You may want to check this blog post on managing repository size growth in AEM.

Hope that helps!

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

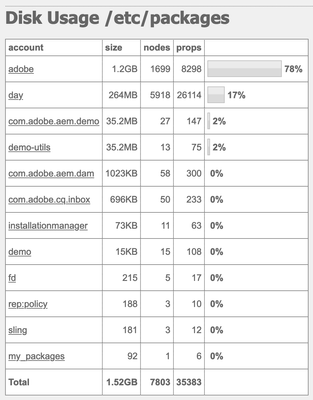

I have run the disusage report again and this time it run successfully. Sharing the result with you.

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @seemak19887037!

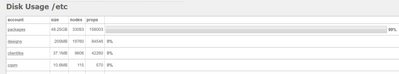

So your overall repository size is nearly 66 GB.

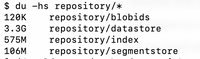

How does that compare to the size of your repository folder on the file system (du -hs crx-quickstart/repository)?

The majority of space is consumed inside the /etc tree.

Could you please drill down into that area to explore where exactly the space is consumed? You can simply click on "etc" in the first cell of the table or directly add the path as a query parameter (/etc/reports/diskusage.html?path=/etc).

I assume that there is a lot of space consumed by packages (/etc/packages) but there may be other candidates to check.

For my local instance the biggest part is also /etc with about 80% in total. For me, it's all packages (99%) but that may be different on your end. If it's the same for you, then check which package groups hold the biggest part (/etc/reports/diskusage.html?path=/etc/packages).

Hope that helps!

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Drilled down the etc path and found that 99% disk usage is for etc/packages. Then drilled down the etc/packages and found that one of packages took 33% of disk space. Repository is taking 276G of the space.

These are the screenshot of all results:-

1.

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @seemak19887037!

There are two things to look at:

- You should do a cleanup of your packages in Package Manager of all "dcep" packages. If you think you might need them at a later point in time, download and archive them to some external storage. Afterwards delete all packages that are not actively used on that instance.

If there are multiple version of a package installed (see "Other versions" option of the "More" button when the package is selected), you can start with a cleanup up of older versions that are no longer used. If there are many deployments (like multiple times a day) and/or you deployment packages have a significant size, this can grow quite quickly (example: 5 deployments per day, deployment package of 500 MB will result in a growth of 12,5 GB per workweek). Cleaning up older, outdated versions of these packages can gain you space.

Once you have deleted all packages that are no longer required, run a DataStore garbage collection and perform an offline revision cleanup and double check on the repository disk usage. Please also make sure that DataStore garbage collection is scheduled to run automatically on a regular basis from the Operations Dashboard. For me, this reduced the size of my instance by ~25%. Please note that the DataStore garbage collection maintenance task needs to be actively configured and does not run OOTB. You need to activate it. - There is a huge difference between the repository size on your hard disk (276 GB) and the reported size of the disk usage report running inside the repository (66 GB). Could you please check the distribution inside the "/repository" folder? While I outlined the reasons for some differences here in my initial post, the factor of more than 4x in your case seems somewhat abnormal.

Hope that helps!