Need recommendations to delete duplicate data in publishers

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi Team,

Issue- AEM author having around 35k documents and publishers having at least 2k docs more than author. Those extra documents are duplicate documents. Which need to be deleted.

Below are the options we are having. Please verify and comment on this options.

1) Remove the total documents from publisher and copy the whole content from author to publisher (Not using replication engine for this, just copy the content from author to publishers).

If we copy like this through package manager/webdev or any other tool,

sync between author and publisher page will be there or NO?

2) Remove the total documents from publisher and activate again from author through replicate engines.

3) Last option is identifying extra documents and delete those in publishers itself. Lot of manual work required for this.

Please suggest recommend approach for this issue.

Thanks a lot in advance.

Regards,

Chandra

Solved! Go to Solution.

Views

Replies

Total Likes

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @chandramohanred ,

I hope below code snippet will help you

Upload that excel in Dam and gives a path in the input field of the servlet.

@Override

protected void doGet(SlingHttpServletRequest request, SlingHttpServletResponse response) throws ServletException, IOException {

response.setContentType("text/html");

response.getWriter().write("<form action=\"/bin/sync/author-publish/content\" method=\"POST\"><input type=\"text\" name=\"path\"><input type=\"submit\" value=\"submit\"></form>");

}

@Override

protected void doPost(SlingHttpServletRequest request, SlingHttpServletResponse response) throws ServletException, IOException {

response.setContentType("text/html");

String path = request.getParameter("path");

if(StringUtils.isEmpty(path)){

response.sendError(400, "No path found");

return;

}

resourceResolver = request.getResourceResolver();

Resource resource = resourceResolver.getResource(path);

if (resource == null) {

response.sendError(400, "No Resource found at the path: " + path);

return;

}

Asset asset = resource.adaptTo(Asset.class);

if (asset == null) {

response.sendError(400, "Invalid Asset at path: " + path);

return;

}

Rendition rendition = asset.getOriginal();

if (rendition == null || !"text/csv".equalsIgnoreCase(rendition.getMimeType())) {

response.sendError(400, "Corrupted Asset at path: " + path);

return;

}

InputStream inputStream = rendition.getStream();

BufferedReader br = new BufferedReader(new InputStreamReader(inputStream));

String line = "";

while ((line = br.readLine()) != null) {

deletePage(line, response);

}

}

you can write the logic in the deletePage method to delete the pages/node. you can use resource/page/node API etc.

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @chandramohanred ,

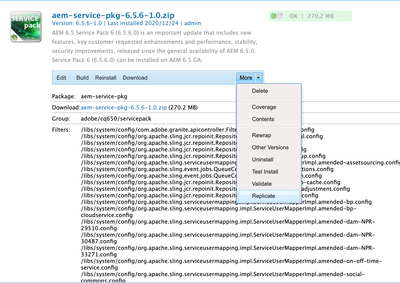

If your documents are in one parent folder, the best way is to delete that folder from publish instance, create a new package & build it in the author with parent folder path and replicate it from the More option. Please consider all the demerit of document unavailability if you directly running on Production.

if all the documents are scattered across multiple folders and hierarchy, you can use the query builder to get the list of documents from both the instance [Author & Publish]

/libs/cq/search/content/querydebug.html

use any editor tool to find the difference and that 2k extra path list. Dump that list into an excel sheet.

You can develop a small utility & write a mini servlet to read that excel sheet with paths & delete the node programmatically.

In this way, you can always reuse this script and maintain sync between Author & Publish anytime whenever needed by uploading excel.

Note: The servlet path should be accessible only to the admin.

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Thanks Sanket for suggestion. This really helps.

Data/docs is scattered across multiple folders.

Could you please suggest any article/blog which helps to write a servlet for this.

Views

Replies

Total Likes

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Views

Replies

Total Likes

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi @chandramohanred ,

I hope below code snippet will help you

Upload that excel in Dam and gives a path in the input field of the servlet.

@Override

protected void doGet(SlingHttpServletRequest request, SlingHttpServletResponse response) throws ServletException, IOException {

response.setContentType("text/html");

response.getWriter().write("<form action=\"/bin/sync/author-publish/content\" method=\"POST\"><input type=\"text\" name=\"path\"><input type=\"submit\" value=\"submit\"></form>");

}

@Override

protected void doPost(SlingHttpServletRequest request, SlingHttpServletResponse response) throws ServletException, IOException {

response.setContentType("text/html");

String path = request.getParameter("path");

if(StringUtils.isEmpty(path)){

response.sendError(400, "No path found");

return;

}

resourceResolver = request.getResourceResolver();

Resource resource = resourceResolver.getResource(path);

if (resource == null) {

response.sendError(400, "No Resource found at the path: " + path);

return;

}

Asset asset = resource.adaptTo(Asset.class);

if (asset == null) {

response.sendError(400, "Invalid Asset at path: " + path);

return;

}

Rendition rendition = asset.getOriginal();

if (rendition == null || !"text/csv".equalsIgnoreCase(rendition.getMimeType())) {

response.sendError(400, "Corrupted Asset at path: " + path);

return;

}

InputStream inputStream = rendition.getStream();

BufferedReader br = new BufferedReader(new InputStreamReader(inputStream));

String line = "";

while ((line = br.readLine()) != null) {

deletePage(line, response);

}

}

you can write the logic in the deletePage method to delete the pages/node. you can use resource/page/node API etc.

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Sure, I will try this and let you know if any doubts.

Thanks a lot for your effort and time.

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Maybe it's a "hack" and I'm not sure about performance, but I would write a groovy script that:

1. loop through the nodes of type dam:Asset and get the path

2. execute a HTTP request to the author (maybe you could just do a HEAD request) for that particular path.json (.json to not get the actual stream). Also, keep in mind that the request should also include the basic auth header.

3. if the status code = 404, delete the node.

The script itself I believe is straightforward, and you can add a "sleep" to make sure that you don't kill the author with the requests. Also, you should use the internal IP of the author, just to make it faster...