Speed up big queries

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi all,

I am looking for best approach to execute workflow below several times per day. There are two big tables (connection via FDA !!!) . Table AUDIT contains IDs and timestamp of records in CORE table which are modified. Let's say workflow will start every 30 minutes and we need to fetch data from CORE which is modified after last execution of workflow.

- how to pass a date of last execution into AUDIT query (for example for an option which will be created to store date) ?

- What about passing IDs from AUDIT results to CORE query? This can be done in JS file for sure, but in GUI is it possible? It is ORACLE behind, so we probably can expect an error if we pass more than 1000 values in IN clause (... where ID in (1000 different values)...)

Is there any better way to optimize fetching data from these tables via FDA in general?

Thanks in advance.

Regards,

Milan

Solved! Go to Solution.

Views

Replies

Total Likes

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi Milan,

You can join them using the Add a linked table option on the ID

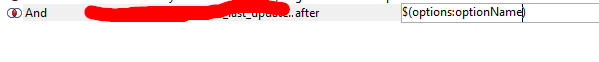

Then in Edit query you can pass an option like this

Thanks,

Saikat

Views

Replies

Total Likes

![]()

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi Milan,

You can join them using the Add a linked table option on the ID

Then in Edit query you can pass an option like this

Thanks,

Saikat

Views

Replies

Total Likes

- Mark as New

- Follow

- Mute

- Subscribe to RSS Feed

- Permalink

- Report

Hi Saikat,

works perfectly ![]()

Regards,

Milan

Views

Replies

Total Likes